Air Quality Data Summit Meeting Notes

Back to <Data Summit Workspace

Back to <Community Air Quality Data System Workspace

Meeting Write-up Doc submitted by L. Sweeny as word doc, March 6.

Day 1 February 12, 2008

Summit Purpose and Objectives

Chet Wayland (EPA OAQPS) and Rich Scheffe (EPA OAQPS) welcomed participants and reviewed the purpose of the data summit:

- to convene organizations and individuals with key roles in retrieving, storing, disseminating, and analyzing air quality data in order to learn about and explore efficient means of leveraging the numerous individual efforts underway;

- to assist EPA/OAQPS in honing its role in the larger air quality data community;

- to begin to establish a community-wide strategy responsive to user defined needs.

Data system and data processing center profiles

To enable productive discussions in subsequent sessions, air quality (AQ) data system and AQ data processing center owners briefed meeting participants on their respective systems. The following is a list of data systems and data processing centers reviewed during the meeting, a link to a brief profile, a link to the presentation given at the data summit, and the contact name/info for each presenter.

Inventory of Data Systems - Dimmick

- AQS: Profile | Presentation - Miller

- CASTNET: Profile | Presentation - Lear

- AIRNow: Profile | Presentation - Dye

- NASA DAACs: Profile | Presentation - Ritchey/Closs

- NEI & EMF: NEI & EMF Profile | Presentation - Houyoux

- Air Quality Modeling Data (CMAQ):Profile | Presentation - Possiel

- NOAA systems: Presentation - Faas

- Data provider perspectives: Presentation - Felton

Inventory of Data Processing Centers - Lorang

- AIRQuest: Profile | Presentation - Dickerson

- 3D-AQS: Profile | Presentation - Engel-Cox

- HEI: Profile | Presentation - Pun

- NCAR Unidata IDD/LDM System: Profile | Presentation - Domenico

- NASA Giovanni: Profile | Presentation - Leptoukh

- RSIG: Profile | Presentation - Plessel

- Environmental GeoWeb: Profile | Presentation - Walter

- VIEWS: Profile | Presentation - McClure/Schichtel

- DataFed, ESIP, NEISGEI: DataFed Profile, ESIP Profile, NEISGEI Profile | Presentation - Husar/Falke

Identifying key challenges and needs for air quality information

Data summit participants split into four groups to discuss the key challenges and needs for data quality information in Public Health, Air Quality Forecasting and Reanalysis for Assessment, Model/Emissions Evaluation, Air Quality and Deposition Characterization for Trends and Accountability. This section briefly summarizes the discussion in each group. Each group set out to answer four questions.

- What trends/needs do you see, or do your users tell you about for future business uses?

- What are the broad data related needs and capabilities required to support these uses?

- What are key non-IT requirements and challenges to delivering those needs/capabilities?

- What have we missed in this discussion?

A1: Public Health

The participants in the public health breakout session identified that public health practitioners seek:

- Consistent formatting in AQ data to enable spatial integration of information and to have the ability to scale information up and down to meet privacy standards.

- Ability to learn about and understanding the composition of the AQ data and the ability to identify co-pollutants.

Participants identified that to meet these needs the AQ data community and data providers need to:

- Create a mechanism to provide feedback to others in the AQ data community and end users of the information.

- Expose complete metadata including, information about uncertainty, quality assurance (QA), scale, measuring techniques, and detection limits.

- Provide easy access to information and guidelines for appropriate use, including links to clear documentation.

The key non-technical challenges data summit participants identified include a series of issues around archiving and maintaining information. These include:

- Clearly articulating what information needs to be archived, and associated questions

- how is material archived

- how is access to archived information managed

- how long is information kept

- how is versioning managed

- How subsets of information are used in a specific analysis preserved

- How are end users kept appraised of improvements and changes in data

- Establishing chain of custody (pedigree of data)

- Key challenges surrounding the need for metadata include differentiating in metadata between opinion and facts, provisioning for feedback in the metadata (for the benefit of subsequent users and the primary information provider), and enabling easy creation of metadata.

B1: Air Quality Forecasting and Reanalysis for Assessment

The data summit participants in the AQ Forecasting breakout session identified two types of business users—those doing daily forecasting, and those performing long-term forecasting.

The AQ Forecasting breakout session participants identified that the greatest needs are:

- Compiling, and providing information about known data sources (this would include a periodic and regular scan for unknown data sources) and enabling integration of additional supplementary data. Providing this information to end users is both a logistical challenge as well as a communications issue.

- Forecasters’ biggest data needs (because of a data gap) are for upper-level air measurements to help in boundary-level characterization and for information about international events, including the ability to not only know about their occurrences but to be able to track them.

- For International events it would be useful to have a “push” function to get this type of information delivered to them when they occur.

- Similar to the public health group, this group identified the importance of availability of archived information. Of primary importance would be enabling access to historical forecasting model runs and the ability to tag the archived model runs with contextual information (i.e., model run during a La Niña year).

The key challenges identified by the AQ Forecasting participants included:

- having the ability, (similar to the public health breakout session), to gather feedback on forecast model performance to feed into subsequent model runs or as ancillary information for users of the model output.

- Getting federal agencies to collaborate to establish federal data requirements for satellite data and to begin having meaningful conversations about how to eliminate redundant investments.

To improve the forecasting model outputs, participants identified that a key non-technical challenge is assuring that meteorological drivers used as inputs are as good as they can be, and that all model in puts, for a given run, be fully characterized. Lastly the AQ Forecasting group identified that the public currently has an inaccurate perception regarding the current sophistication of forecasting skill and how forecasts are being successfully used.

C1: Model/Emissions Evaluation

The Model Evaluation breakout session identified that the most important business uses are:

- The ability to characterize model confidence and uncertainty; and confidence levels in model results

- Providing information about what evaluation results mean and why a model is performing a certain way

Key needs identified by the Model Evaluation participants mirror those of the other groups, The biggest needs are for feedback and metadata, including observations metadata and model input/output metadata. For model input/output metadata its especially important that the community define a standard set of metadata which describes a given model run (and its output)

Key non-technical issues included:

- Enabling basic access to models in a timely fashion

- This implies the community establishing policies for when/how models are released; participants identified that this is a unique challenge because making data available for someone else’s benefit is not in someone’s job description.

- Making special project and “intensive field campaign” collected data available

- The need to develop consensus protocols for how models can be compared

D1: Air Quality and Deposition Characterization for Trends and Accountability

The Air Quality and Deposition Characterization for Trends and Accountability break-out group identified the following key trends/need areas:

- International and transboundary transport issues

- Climate change

Broad data needs and capabilities identified were:

- Better shared analytical visualization across temporal and geo domains

- Better ability to communicate and collaborate with data produces and co-users

- Better ability to characterize “data quality” to establish suitability for use

Much of the important best data is “behind the firewall”

Challenges and opportunities to deliver these capabilities included:

- More explicit tiering of systems to deal with the different levels of user requirements which range widely from simple interactive tools to more intensive offline analysis

- Improved metadata, perhaps through some collaborative mechanism

- Improved collaboration tools overall, looking for examples of the open source community

- Establishing a mechanism to expose descriptions of services/re-usable components that we already have

Group Discussion

Following the breakout sessions and during the report-outs, the data summit participants identified 40 key concepts. These 40 key concepts were collated and summarized into 19 to address during the second-day breakout sessions. (Breakout group that will address the key issue appears in parentheses following each issue.)

- Design choices between end-to-end solutions or component solutions given the diversity of users and needs. (all)

- What are the existing policies, practices, and regulations influencing what we need or need to do? (all)

- Building systems robust enough to accommodate future evolution and innovation

- Gaining access to other partner data and associated issues (e.g., field campaign data) that is not going into our systems (A, B)

- Standard protocols/interface for data access and service access (B)

- Designing and architecture for search and discovery services (e.g., machine-readable metadata) (B)

- Data, model run, and application feedback from user community, including feedback from forecast performance (all)

- How/what do we archive? How do we inform community of changes? (A, B)

- End user support (C)

- What data can and should be shared (e.g., Model outputs) (A)

- Tracking and early detection of events and pushing information (C)

- Ability to tag information related to events (C)

- Feedback on model performance (C)

- Increasing need for transboundary information (C)

- Timeliness and data availability (C)

- Building community into the mission of EPA

- Getting the right (and right level) metadata (all)

- How to use WIKI (collaborative) technology for monitoring user needs, user feedback, and metadata. (all)

- Reducing redundancy and redirecting resources—process and criteria (all)

Day 2 February 13, 2008

Day 1 Recap and Day 2 Introduction

Re-stating context for the meeting

Comments in this section were provided as a summation of issues from day one by the facilitator as a point of departure for discussion. Opinions in this section to not necessarily represent those of any individual participant, they are provided for discussion purposes only.

EPA, NOAA, NASA and other funders are now, and will continue to make funding decisions, many of which have implications for projects of their peer agencies and implications across many of the air quality communities’ areas of interest. They are making these decision without an over-arching vision of the system of these systems. Most of the attendees of this meeting will be directly/indirectly effected by these decisions.

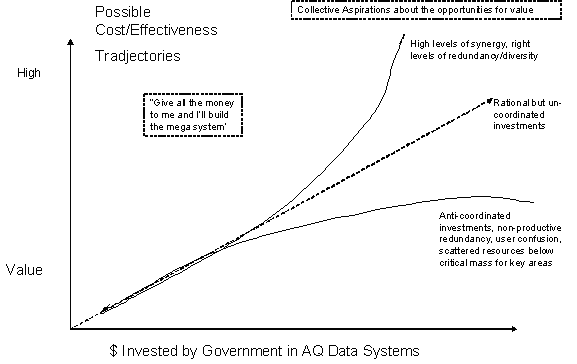

We expect that that funding for air quality related projects is likely to be declining or stable. We posit that there is a wide range of cost-effectiveness trajectories for these investments. Fig 1 provides a sketch of these possibilities. They include the lower trajectory of anti-coordinated investments, the linear trajectory of rational but not coordinated investments, and the “idea” trajectory where smarter investments yield high cost effectiveness. The NW quadrant depicts only for the purposes of discussion, the rational but unrealistic sentiment that if all spending were centralized somehow in one/small number of ideal systems there would be high cost effectiveness.

Figure 1 Possible Cost Effectiveness Trajectories

Question for this session is “With what vision (of some system of systems) should those funding decisions be guided and informed?” And how should that same vision inform the implementation activities of the funding recipients?

Thus current budget climate presents both a dilemma and an opportunity. The dilemma, is that without such an over-arching, user-community credible vision, funding agencies have to resort to a “pick the big winners” strategy, and do so on the basis of limited information, and feedback from user community.

Opportunity, around which this conference was organized, is that, through some new forms of collaboration and coordination among all the partners in the data value chain, a credible vision for a system of systems could be established, and used by funders and implementers to dramatically improve the efficiency and performance of the overall air quality value chain. Further this credible vision would position funding agencies to make more persuasive and successful request for resources (including joint requests which could be especially powerful).

Assumptions about the current constellation of systems

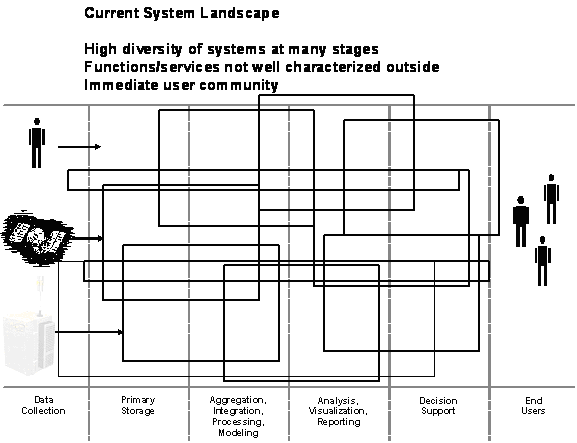

Our current constellation of systems is not well characterized. There is significant overlap in systems performing identical functions. Some of this is healthy diversity and some of redundancy. We do not always know which is which. This is depicted in Fig 2.

Figure 2 Current System Landscape

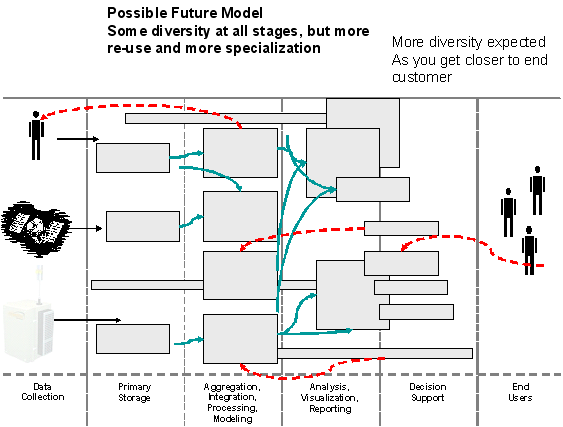

Figure 3 sketches the opportunity space (see Rudy’s diagrams with same concept) we are discussing:

Figure 3

Key attributes of this picture are:

- Fewer (but not zero) overlaps of functionality at the “data” side of the value chain. Duplications that exist are known to the community. They can serve many functions: collaboration, competition, innovation, etc.

- Greater diversity of applications (or “virtual applications) as one gets closer to the user.

- Standard (blue line) interconnections between systems, these interconnections are, as far as possible standardized across the chain, and lower the overhead of connection

- Improved feedback (red dashed lines) through purposeful design of “community”. Some institutional methods to support improved communication back to upstream partners.

The opportunity space identified requires design. Under the current set of incentives and guidance’s it will probably not reach critical mass. Under a different set of incentives and guidance’s there is great potential for it to be built/evolved/grown.

Principles

- We had much discussion about “redundancy, diversity and innovation”, here are some proposed common principles:

- We want a system that is overall, more economically efficient

- We know there will always be “redundancy” i.e. different parties doing the same business function. Goal is not to eliminate this overlap but to, as far as possible, ensure that it exists for a healthy reason.

- Having room for innovation, by definition means allowing a new (additional) way to do something already being done (and maybe going beyond it)

- There must be cooperation and competition, simultaneously, so that systems that need each other’s inputs/outputs can co-exist, even when one may eventually displace the other.

- System diversity will always increase as one moves closer to the user, we can even envision “individually customized” applications for each user, tied to their specific business needs. Current portal technologies are suggestive of this.

- A possible indicator of success is that, over time, the majority of a systems investment, is in providing services that are unique within some context. This is the “niche” metaphor from ecology/markets. Some niches may be small others may be large.

- Success at this design will require a more active, effective community of air quality data stakeholders, operating over a broader range of issues. A critical function of this community is collecting and synthesizing feedback and “feedfoward” to other parts of the system. This topic was highlighted later in the X priorities as “feedbackthe group selection of “Joint/collective characterization of End User requirements”

- System and its supporting community need a brain-trust/central nervous system of some kind. Most stakeholders must focus on their local parts of this system, but someone must be responsible (through some incentive or requirement) to look after the operation of the system as a whole, on behalf of, and with enough legitimacy of the stakeholder community.

- We need some institutional/community process for carrying this work forward. It is larger than any single agency or user group. [link to discussion of governance]

Technical Breakout Sessions

Participants split into technical function areas, enabling data access, data processing and integration, and visualization/analysis, to answer:

- What are the priority information capabilities needed to achieve the key concepts identified on day one, relevant to this break out group's area?

- What attributes, or over-arching principles should shape the systems (provide examples)?

- What actions could we take in the near term to demonstrate/validate these attributes and principles? What would be convincing/credible to your agency?

- What support do we need to accomplish these actions and organize our collective work?

A2: Enabling Data Access

Group A2 discussed five priority information capabilities. These five information capabilities reflect the breakout’s sense of the capabilities that are foundational to enabling data access that either don’t exist or can be improved upon. An improved ‘system’ would:

- Making access to data the standard expectation for government funded data;

- Increase access to orphan or gray data ;

- Include new classes of data such as model outputs;

- Provide easy access to relevant archived/versioned data;

- Be robust and stable to so that application developers and their funders would be confident in relying on such a system, even though they do not own it; and

- Be consistently described

The overarching principles that Group A2 defined as being important to augment the five system principles identified in the introduction are:

- The system would assume that information is always accessible when data owners define appropriate, and ownership and stewardship responsibilities are established. This would include versioned and archived information.

- The system will enable user feedback in all parts of Data Value Chain and be consistently described.

- The system and its participants will adhere to a community defined and governed suite of standards/conventions.

Meshing the capabilities and principles above, Group A2 identified a set of actions to begin the process of improving the system. To begin evolving to a paradigm of default access to information the community must:

- clarify ownership and stewardship responsibilities,

- must assure that when data is accessed credit is received when credit is due, ,

- work as a community to define the expectation of data accessibility by type of data.

- establishing a standard mechanisms for data discovery and description

- identifying what in the system can and should be standardized.

As part of the next steps, the community should investigate and learn from the experience of partner who have been working these areas ahead of us. Lastly, the group identified that while archiving and versioning are important issues, an immediate next step is to define what is meant by archiving, i.e., active archiving vs. offline archiving.

B2: Data Processing and Integration

Group B2 discussed data processing and integration. Group B2 identified three priority information capabilities:

- Providing value-added functionality including filtering, aggregation, transformation, fusion, and Pre and Post processing QA/QC

- Enabling Feedback on version changes, measures on performance from community and automated value added services (see above), and communication of assessments for suitability for use.

- Establishing standard protocols for data and service access. Starting point for these standards are those adopted by the OpenGIS and GEOSS, including:

- Web Coverage Services (WCS)

- Web Feature Services (WFS)

- Web Map Service (WMS)

The overarching principles that Group B2 defined as being important is that for data processing and integration the system must:

- Provide adequate information to end-user application developers to allow appropriate development;

- Provide knowledge and insight in post processing and analysis;

- Accommodate Metadata demands;

- Have reliable and robust value added services; and

- Be stably governed including service level agreements where necessary.

Meshing the capabilities and principles above, Group B2 identified a list of actions and next steps:

- Inreach and outreach. Inreach by increasing visibility within partner agencies, specifically mentioned was EPA. Outreach would include providing ‘layman’ explanations and working to relate/link the efforts of this community to parallel groups and projects.

- importance of continuing this process including implementing a governance mechanism to convene the community in a fashion that allows decision making.

- Identification and execution of a pilot, candidates included remounting capabilities of the Health Effects Institute or establishing a CAP for an specific AQ event class

C2: Visualization / Analysis

Group C2 had the responsibility for visualization and analysis and brainstormed a long list of desired capabilities.

- Multiplatform support (flexibility)—ability for user to use with many systems

- Standards-based spatial and temporal capability

- Ability to aggregate by user, including multiscale

- Display and communication of uncertainty

- Sufficient description/annotations of visualization (e.g., units, scales

- Capabilities for interpolation (spatially & temporally)

- Multiple options for visualization (e.g., multiple ways for interpolation, including no interpolation)

- Recommended default with other options for more advanced users

- Tiered capabilities

- Consistent Color

- Ability to interface/export/translate between standard systems (e.g., Google)

- Ability to discover

- Means to support a defined need or user group

- Include multiple data types & forms – ground, satellite, models and able to work with multiple scales

- Multiple Output Forms (e.g., PNG, MPEG, KML/KMZ, Excel, PPT)

Group C2 identified a series overarching principles that the community must use when considering ‘system’ changes and improvements: Diversity of tools and applications. This is important to assure a robust system; it appeals to specific users, and provides flexibility.

- to provide flexibility, the system should place a premium on modularity and portable development.

- visualization and data provided to end user should have scientific credibility, including information on levels of quality and a document change of custody and data source.

- to help end users, visualization & analysis products must include interpretation and help files. This is, in part, accomplished by assuring that the ‘system’ knows its users and how they want the information. This type of interface with users could help with defining user requirements, building community, enabling feedback (up and down stream).

- visualized at appropriate spatial and temporal scales for analysis.

To begin making progress towards the capabilities and principles identified above, Group C2 identified several next steps and actions:

- Owners of systems should make services available outside firewall and put WMS/WCS standards in place (e.g., datafed, GIOVANNI).

- Tool providers need to ask for & include lineage of data (be explicit in presentation). Existing systems should make their info available as web services.

- EPA, NOAA, NASA, CDC should develop interagency agreement to define commitment to the process.

- community must convene to develop guidance for metadata for end users (i.e., idiots guide for metadata”).

- community must continue to engage end users through outreach, pilots, requirements development, and by linking end/users back to data generators. As part of this outreach, the community should consider an end-user panel/advisory group.

- To energize the community and especially the federal contingency, partners should enact/reenergize/update interagency MOUs and begin briefings within agencies including successful examples/models and an inventory/evaluation of existing systems.

Group Discussion (Top Priorities)

Following the breakout sessions and the report out from the breakout sessions, the group identified common themes and the top priority areas for the community to consider. The participants were each asked to identify up to five themes and priority areas. Participant responses are summarized below.

Metadata

The need and use of metadata was a predominant theme in the breakout groups and during plenary discussion. The group identified that there are two types of metadata that are important to the community, innate and emergent. Innate metadata is provided by the data provider, needs to be standardized for the entire community, and the system must propagate it (supply it when demanded unchanged). Emergent metadata is user-generated information and could be opinions. The group agreed that whatever the community ultimately decides, the metadata requirement should leverage what partners have already implemented, should be facilitated by metadata generation tools, should not be unduly burdensome, and not a huge monster. The meeting participants identified that a next step would be investigating and documenting existing efforts in partner federal agencies, specifically mentioned was EPA.

Standards

It was clear that a next and important step for the community is to establish a small set of data and technical standards that would enable consistent implementations and ‘blue line’ development/enhancement. The group consensus was that it was important to do this as soon as possible as having something, even if not a comprehensive list, is better than nothing. The meeting participants identified that an appropriate next step might be to establish and convene a small group of scientific and IT individuals to evaluate data standards, data formats, and technical standards. This group would leverage partner experience, i.e., NOAA, EPA, WMO, and prepare a recommendation for the community to consider.

End User Requirements Development

The summit participants felt that a concerted, increased, and focused effort to include end users in requirements development would be substantially beneficial to the AQ data community. This could take several different paths including hosting meetings between system developers and end users, establishing user groups with connections to application developers, and a mechanism to include end users in evaluation of over system performance. This would be a small part of a larger effort to include the end user in more and more of the AQ Data communities’ work. The group identified that a next step could be to work with the AQ data community to identify end users and pilot one joint requirement development effort such as the Health Effects Institute remount described above.

Feedback

Every technical breakout group identified the importance of feedback, albeit each with a different flavor. The feedback the AQ community is seeking is person-to-person, agency-to-agency, and system-to-system across multiple subjects including feedback on data (usage, quality, gaps), models (performance, results, gaps, usability), and systems (performance, development, enhancements, gaps, issues). Each of these relationships for feedback imply a different solution and a different set of roles and responsibilities. Consensus among the group was that this was extremely important but must be thought out and formalized. The group spent some time talking about the efficacy of the Wiki as a tool to enable feedback and concluded that more information was needed.

Sustainability

During the discussion of sustainability, consensus from participants was that maintaining some forum ala the air quality data summit is very important. The group agreed that some aspect of sustainability is enabling the community to self-organize and operate under the principle of openness. Everybody agreed that establishing a common vision/framework from which community members can make decisions is the only way to assure that the most prudent decisions—those that benefit the community as a whole—are consistently made. Participants felt that the most pressing next steps are to take action to make sure the momentum created by this meeting is continued. Next steps could include:

- Investigate Wikis and establishing an ongoing ‘place’ for the community to self-organize.

- Execute an inventory and assessment of existing systems to establish baseline information, e.g., lifecycle, users, ROI to users/agency, data sources, redundancy, and cost.

- Establish a mechanism for community strategic planning and vet a vision for the AQ community with the AQ community.

Governance

Group discussed that, along with need to build a community that would operate on a voluntary “opt in” basis there would also need to be some group constituted with enough decision making authority to establish stability and confidence in the standards and protocols on which the system would rely. Group discussed a wide variety of possible structures..

Part of the vision is housecleaning not only money. It gives some credibility to the need argument. How do we do something formal and keep the right people driving the issue.

Governance Models:

- FACA (new or existing)

- Does EPA already have a FACA that could do that?

- NADP

- CASAC - Clean Air Science Advisory Committee (Existing FACA)

- Steering committee on ambient methods

- ESIP

- EPAR10: Air Consortia—mesoscale modeling through UW and AQ model through WSU

- IMPROVE: Steering Committee that governs the improve program. Voluntary

- NARSTO: Interagency Private Sector Consortium – legal entity with Charter

Data Summit WorkspaceAir Quality Data Summit Meeting NotesScheffe