Difference between revisions of "WCS Access to netCDF Files"

| (30 intermediate revisions by 2 users not shown) | |||

| Line 2: | Line 2: | ||

* [http://sourceforge.net/p/aq-ogc-services/home/ Project on SourceForge] | * [http://sourceforge.net/p/aq-ogc-services/home/ Project on SourceForge] | ||

| + | * [http://geo-aq-cop.org/content/htap-data-network-pilot HTAP Network Pilot Final Report] | ||

| + | ---- | ||

| + | * [[WCS Access to netCDF Files]]: WCS Access to netCDF Files | ||

* [[WCS_Wrapper_Configuration| WCS Wrapper Configuration]]: Common information about the server | * [[WCS_Wrapper_Configuration| WCS Wrapper Configuration]]: Common information about the server | ||

* [[WCS_Wrapper_Configuration_for_Point_Data]]: How to serve SQL-based point data. | * [[WCS_Wrapper_Configuration_for_Point_Data]]: How to serve SQL-based point data. | ||

* [[WCS_Wrapper_Configuration_for_Cubes]]: How to serve NetCDF-CF based cube data. | * [[WCS_Wrapper_Configuration_for_Cubes]]: How to serve NetCDF-CF based cube data. | ||

* [[#Installation_Guides| Installation on Windows and Linux]] | * [[#Installation_Guides| Installation on Windows and Linux]] | ||

| + | * [[WCS_Wrapper_Installation_WindowsOP]] | ||

| + | |||

* [[Creating_NetCDF_CF_Files| How to pack your data to NetCDF Files]] | * [[Creating_NetCDF_CF_Files| How to pack your data to NetCDF Files]] | ||

| − | + | * [[Glossary_for_WCS|Glossary for WCS]] | |

| + | * [[WCS_Development_Issues|Unedited conversations around the design, development and deployment issues]] | ||

* [[WCS_NetCDF_Development]]: Old development page, moving to sourceforge. | * [[WCS_NetCDF_Development]]: Old development page, moving to sourceforge. | ||

| + | * [[WCS_NetCDF-CF_Updates]] | ||

| Line 46: | Line 53: | ||

=== Client WCS 1.1.2 interface === | === Client WCS 1.1.2 interface === | ||

| − | + | '''GetCapabilites'''. The first query is GetCapabilities which returns a Capabilities XML document. It gives a list of heterogeneous coverages. Coverages can have metadata such as keywords representing the coverage (layer). A coverage is a collection of homogenous data. Each coverage has a | |

| − | + | * Coverage Name | |

| − | + | * Metadata and | |

| − | + | * Bounding box | |

| − | * | ||

| − | * | ||

| − | * | ||

| − | |||

| − | |||

| − | |||

| − | * The third call is GetCoverage. It gets you a subset of a coverage, from which coverage, which fields and which spatial-temporal-other dimension subsetting. That query is passed into the 1.1.2 server and it returns the data. | + | The client can use the Capabilities document to select a coverage for further examination. |

| + | |||

| + | '''DescribeCoverage'''. The second query is DescribeCoverage which describes a coverage, it's grids size, time dimension, for points location dimension, and other dimensions like elevation or wavelength. DescribeCoverage is used mainly by the client software to aid accessing the data through GetCoverage. | ||

| + | * Each field contain metadata such as units. | ||

| + | * A coverage can have multiple homogenous fields, which share the same dimensionality. The fields are parameters like PM10 or PM2.5 | ||

| + | * Each field share the grid bounding box and grid size. | ||

| + | * Each field share the time dimension bounding box and grid size. | ||

| + | * It is possible to have a field that is missing a dimension: A field can be aggregated over elevation, wavelength or time, but it will still be matching with the dimensionality of other data. | ||

| + | * It is possible to have a field that has completely different wavelength dimension or elevation dimension. You probably should create a different coverage in this case, since parameters measured on different elevations are not really sharing the dimensionality. | ||

| + | |||

| + | |||

| + | '''GetCoverage'''. The third call is GetCoverage. It gets you a subset of a coverage, from which coverage, which fields and which spatial-temporal-other dimension subsetting. That query is passed into the 1.1.2 server and it returns the data. | ||

WCS 1.1.2 servers can be implemented by whatever means needed. Data can be stored in binary cubes, sql database,... The DataFed server database stores grid data in netCDF cubes and point data in sql database. The return types are netCDF CF 1.0 for grid data and CSV, Comma Separater Values for points. CF 1.5 stationTimeSeries support is coming for point data. | WCS 1.1.2 servers can be implemented by whatever means needed. Data can be stored in binary cubes, sql database,... The DataFed server database stores grid data in netCDF cubes and point data in sql database. The return types are netCDF CF 1.0 for grid data and CSV, Comma Separater Values for points. CF 1.5 stationTimeSeries support is coming for point data. | ||

| Line 64: | Line 76: | ||

===Networking using the netCDF-CF and WCS Protocols=== | ===Networking using the netCDF-CF and WCS Protocols=== | ||

| − | The combination of netCDF-CF and WCS protocols offers the means to create agile, loosely coupled data flow networks based on Service Oriented Architecture (SOA). The netCDF-CF data | + | The combination of netCDF-CF and WCS protocols offers the means to create agile, loosely coupled data flow networks based on Service Oriented Architecture (SOA). The netCDF-CF data format provides a compact, standards-based self-describing data format for transmitting Earth Science data pockets. The OGC WCS protocol supports the Publish, Find, Bind, operations required for service oriented architecture. A network of interoperable nodes can be established for each of the nodes complies with the above standard protocls. |

An important (incomplete) initial set of nodes for the HTAP information network already exist as shown in Figure 3. Each of these nodes is, in effect, is a portal to an array of datasets that they expose through their respective interfaces. Thus, connecting these existing data portals would provide an effective initial approach of incorporating a large fraction of the available observational and model data into the HTAP network. The US nodes DataFed, NEISGEI and Giovanni are already connected through standard (or pseudo-standard) data access services. In other words, data mediated through one of the nodes can be accessed and utilized in a companion node. Similar connectivity is being pursued to the European data portals Juelich, AeroCom, EMEP and others. | An important (incomplete) initial set of nodes for the HTAP information network already exist as shown in Figure 3. Each of these nodes is, in effect, is a portal to an array of datasets that they expose through their respective interfaces. Thus, connecting these existing data portals would provide an effective initial approach of incorporating a large fraction of the available observational and model data into the HTAP network. The US nodes DataFed, NEISGEI and Giovanni are already connected through standard (or pseudo-standard) data access services. In other words, data mediated through one of the nodes can be accessed and utilized in a companion node. Similar connectivity is being pursued to the European data portals Juelich, AeroCom, EMEP and others. | ||

| Line 114: | Line 126: | ||

* The folder becomes a provider. For example in http://128.252.202.19:8080/NASA?service=WCS&version=1.1.2&Request=GetCapabilities the folder is named NASA and all the netcdf files are put into it. | * The folder becomes a provider. For example in http://128.252.202.19:8080/NASA?service=WCS&version=1.1.2&Request=GetCapabilities the folder is named NASA and all the netcdf files are put into it. | ||

* Each NetCDF file becomes a coverage. In this case, they are firepix.nc, INTEX-B-2006.nc, MISR.nc and modis4.nc. Each of these files are completely different in dimensionality. | * Each NetCDF file becomes a coverage. In this case, they are firepix.nc, INTEX-B-2006.nc, MISR.nc and modis4.nc. Each of these files are completely different in dimensionality. | ||

| − | * Each variable in a netcdf file becomes a field. | + | * Each variable in a netcdf file becomes a field. They automatically share the dimensions. |

* it is possible to store netcdf files elsewhere, or even have each day in a different file. The installation instructions contain an example how to configure it. | * it is possible to store netcdf files elsewhere, or even have each day in a different file. The installation instructions contain an example how to configure it. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Multiple Servers, Multiple Clients, connected with WCS protocol == | == Multiple Servers, Multiple Clients, connected with WCS protocol == | ||

| Line 140: | Line 146: | ||

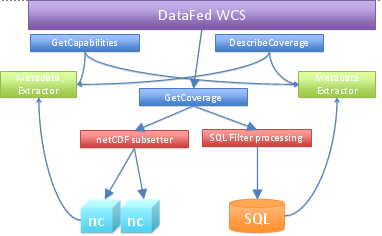

Queries, Metadata Extractors, Subsetters, Backends | Queries, Metadata Extractors, Subsetters, Backends | ||

| + | |||

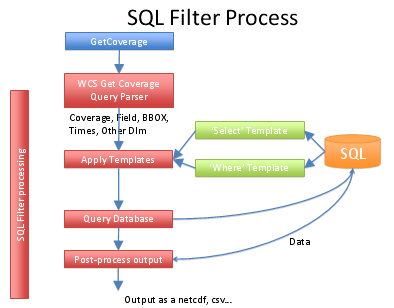

| + | Data Flow for SQL-based Point Data: | ||

| + | |||

| + | [[Image:Point_flow_v1.png|datafed Point WCS data flow]] | ||

| + | |||

| + | |||

| + | |||

| + | Obsolete image: | ||

[[Image:WCS_service.png|datafed WCS structure]] | [[Image:WCS_service.png|datafed WCS structure]] | ||

| Line 164: | Line 178: | ||

</Axis> | </Axis> | ||

| − | According the standard, <Key> element should contain just a loc_code, in this case it contains WFS GetFeature query for the whole location table. Since point data is relative novelty in WCS, this could become a convention for points. The | + | According the standard, <Key> element should contain just a loc_code, in this case it contains WFS GetFeature query for the whole location table. Since point data is relative novelty in WCS, this could become a convention for points. The "Meaning" node is used to indicate, that this is a location dimension. |

* GetCoverage | * GetCoverage | ||

| Line 192: | Line 206: | ||

* [[WCS_Wrapper_Installation_LinuxOP|Installation on Linux]] | * [[WCS_Wrapper_Installation_LinuxOP|Installation on Linux]] | ||

| − | ** [http://pocus.wustl.edu:81 Test server Linux platform (this will link to a live server) ] | + | |

| + | OUT OF DATE, BEING UPDATED | ||

| + | |||

| + | ** [http://pocus.wustl.edu:81 Test server Linux platform (this will link to a live server) ] | ||

**[http://pocus.wustl.edu:81/HTAP?service=WCS&acceptversions=1.1.2&Request=GetCapabilities GetCapabilities] | **[http://pocus.wustl.edu:81/HTAP?service=WCS&acceptversions=1.1.2&Request=GetCapabilities GetCapabilities] | ||

**[http://niceguy.wustl.edu:81/HTAP?service=WCS&version=1.1.2&Request=DescribeCoverage&identifier=GEMAQ-v1p0 DescribeCoverage] | **[http://niceguy.wustl.edu:81/HTAP?service=WCS&version=1.1.2&Request=DescribeCoverage&identifier=GEMAQ-v1p0 DescribeCoverage] | ||

Latest revision as of 12:07, July 29, 2012

Back to Interoperability of Air Quality Data Systems

- WCS Access to netCDF Files: WCS Access to netCDF Files

- WCS Wrapper Configuration: Common information about the server

- WCS_Wrapper_Configuration_for_Point_Data: How to serve SQL-based point data.

- WCS_Wrapper_Configuration_for_Cubes: How to serve NetCDF-CF based cube data.

- Installation on Windows and Linux

- WCS_Wrapper_Installation_WindowsOP

- How to pack your data to NetCDF Files

- Glossary for WCS

- Unedited conversations around the design, development and deployment issues

- WCS_NetCDF_Development: Old development page, moving to sourceforge.

- WCS_NetCDF-CF_Updates

Introduction

The purpose of this effort is to create a portable software template for accessing netCDF-formated data using the WCS protocol. Using that protocol will allow accessing the stored data by any WCS compliant client software. It is hoped that the standards-based data access service will promote the development and use of distributed data processing and analysis tools.

The initial effort is focused on developing and applying the WCS wrapper template to the HTAP global ozone model outputs created for the HTAP global model comparison study. These model outputs are being managed by Martin Schultz's group at Forschungs Zentrum Juelich, Germany. It is hoped that following the successful implementation at Juelich, the WCS interface could also be implemented at Michael Schulz's AeroCom server that archives the global aerosol model outputs.

It is proposed that for the HTAP data information system adapts the Web Coverage Service (WCS) as the standard data query language. The adoption of a set of interoperability standards is a necessary condition for building an agile data system from loosely coupled components for HTAP. During 2006/2007, members of HTAP TF have made considerable progress in evaluating and selecting suitable standards. They also participated in the extension of several international standards, most notably standard names (CF Conversion), data formats (netCDF-CF) and a standard data query language (OGC Web Coverage Service, WCS).

Recent Addition, July 16th 2010, adds implementation of the point data as a possible data type. If the data is collected in regular intervals from a network of stations and is stored into a SQL database, the implementation provides templates to configure WCS interface.

The netCDF-CF Data Format

The netCDF-CF file format is a common way of storing and transferring gridded meteorological and air quality model results. The CF convention for structuring and naming of netCDF-formated data further enhances the semantics of the netCDF files. Most of the recent model outputs are conformant with netCDF-CF. The netCDF-CF convention is a key step toward standard-based storage and transmission of Earth Science data.

The netCDF-CF data format is supported by a robust set of well-documented and maintained low-level libraries for creating, maintaining and accessing data in that format for multiple platforms (Linux, Windows). The low level libraries provided by UNIDATA also offer a clear application programing interface (API). At the server side, the libraries can be used to create and to subset the netCDF data files. At the client side, the libraries allow easy access to the transmitted netCDF contents. Thus, both the data servers and the application developers are enabled by the robust netCDF libraries.

The existing names for atmospheric chemicals in the CF convention were inadequate to accommodate all the parameters used in the HTAP modeling. in order to remedy this shortcoming the list of standard names was extended by the HTAP community under leadership of C. Textor. She also became a member of the CF convention board that is the custodian of the standard names. The standard names for HTAP models were developed using a collaborative wiki workspace. It should be noted, however, that at this time the CF naming convention has only been developed for the model parameters and not for the various observational parameters.(See Textor, need a better paragraph). The naming of individual chemical parameters will follow the CF convention used by the Climate and Forecast (CF) communities.

The netCDF CF data format is most useful for the exchange of multidimensional gridded model data. It was also demonstrated that the netCDF format is well suited for the encoding and transfer of station monitoring data. Traditionally, satellite data were encoded and transferred using the HDF format. The new netCDF version 4 (beta) library provides a common API for netCDF and HDF-5 data formats.

Data access through OGC WCS protocol

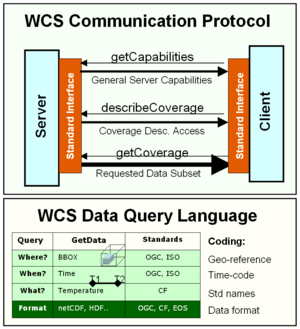

The WCS protocol consists of a communications protocol and a data query language. The WCS data access protocol is defined by the international Open Geospatial Consortium (OGC), which is also the key organization responsible for interoperability standards in GEOSS. Since the WCS protocol was originally developed for the GIS community, it was necessary to adapt it to the needs of "Fluid Earth Sciences". The Earth Science Community has actively pursued the adaptation and testing of the WCS interoperability standards, spearheded by the Unidata-driven GALEON interoperability program.

The WCS communications protocol consists of three service calls: getCapabilities, describeCoverage, getCoverage. The combination of the three services permits the linking of WCS clients and servers using loosely coupled connections consistent with Service Oriented Archicterure (SOA). The client first invokes the getCapabilities service, which returns an XML file listing the datasets (coverages) offered by the server. Given the list the client then selects a particulate coverage and issues a describeCoverage request to the server. The returned XML file describes the specific coverage and also contains specific data access instructions for that coverage. The main data query service is getCoverage, in which the user specifies the desired data subsets as well as the desired data format. In our case, the return format is netCDF-CF, which can be accessed and manipulated by the client-side netCDF libraries.

The WCS getCoverage service incorporates a data query language to request specific data from the server. The data queries are formulated in terms of physical coordinate i.e. the space-time query consisting of the (1) geographic bounding box, (2) time-range, (3) other dimensions like wavelength, elevation or location in point data and the (4) parameter (coverage).

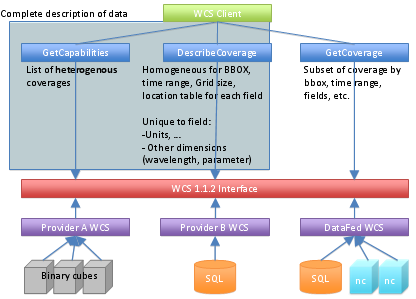

Client WCS 1.1.2 interface

GetCapabilites. The first query is GetCapabilities which returns a Capabilities XML document. It gives a list of heterogeneous coverages. Coverages can have metadata such as keywords representing the coverage (layer). A coverage is a collection of homogenous data. Each coverage has a

- Coverage Name

- Metadata and

- Bounding box

The client can use the Capabilities document to select a coverage for further examination.

DescribeCoverage. The second query is DescribeCoverage which describes a coverage, it's grids size, time dimension, for points location dimension, and other dimensions like elevation or wavelength. DescribeCoverage is used mainly by the client software to aid accessing the data through GetCoverage.

- Each field contain metadata such as units.

- A coverage can have multiple homogenous fields, which share the same dimensionality. The fields are parameters like PM10 or PM2.5

- Each field share the grid bounding box and grid size.

- Each field share the time dimension bounding box and grid size.

- It is possible to have a field that is missing a dimension: A field can be aggregated over elevation, wavelength or time, but it will still be matching with the dimensionality of other data.

- It is possible to have a field that has completely different wavelength dimension or elevation dimension. You probably should create a different coverage in this case, since parameters measured on different elevations are not really sharing the dimensionality.

GetCoverage. The third call is GetCoverage. It gets you a subset of a coverage, from which coverage, which fields and which spatial-temporal-other dimension subsetting. That query is passed into the 1.1.2 server and it returns the data.

WCS 1.1.2 servers can be implemented by whatever means needed. Data can be stored in binary cubes, sql database,... The DataFed server database stores grid data in netCDF cubes and point data in sql database. The return types are netCDF CF 1.0 for grid data and CSV, Comma Separater Values for points. CF 1.5 stationTimeSeries support is coming for point data.

Networking using the netCDF-CF and WCS Protocols

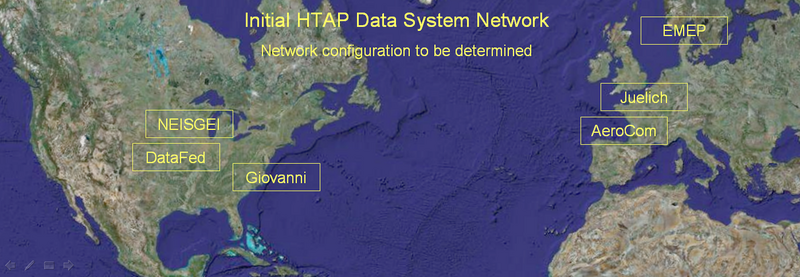

The combination of netCDF-CF and WCS protocols offers the means to create agile, loosely coupled data flow networks based on Service Oriented Architecture (SOA). The netCDF-CF data format provides a compact, standards-based self-describing data format for transmitting Earth Science data pockets. The OGC WCS protocol supports the Publish, Find, Bind, operations required for service oriented architecture. A network of interoperable nodes can be established for each of the nodes complies with the above standard protocls.

An important (incomplete) initial set of nodes for the HTAP information network already exist as shown in Figure 3. Each of these nodes is, in effect, is a portal to an array of datasets that they expose through their respective interfaces. Thus, connecting these existing data portals would provide an effective initial approach of incorporating a large fraction of the available observational and model data into the HTAP network. The US nodes DataFed, NEISGEI and Giovanni are already connected through standard (or pseudo-standard) data access services. In other words, data mediated through one of the nodes can be accessed and utilized in a companion node. Similar connectivity is being pursued to the European data portals Juelich, AeroCom, EMEP and others.

Figure 3. Initial HTAP Information Network Configuration

(Here we could say a few words about each of the main provider nodes) Federated Data System DataFed; NASA Data System Giovanni; Emission Data System NEISGEI; Juelich Data System; AeroCom; EMEP.

Design goals of WCS-netCDF Wrapper:

- Promote WCS as standard interface.

- Promote NetCDF and CF-1.x conventions

- Make it easy to deliver your data via WCS from your own server or workstation.

- No intrusion: You can have the NetCDF files where you want them to be.

- A normal PC is enough, no need to have a server.

- Minimal configuration

The purpose of the wrapper software is to respond to three HTTP GET service queries.

The WCS-netCDF wrapper software was developed for DataFed using Python 2.6, C++ and lxml 2.2.4, numpy 1.4.1, HDF5 1.8.4 and NetCDF 4.1.2-beta1 libraries. Linux port is mainly developed at Juelich adding PyNIO 1.2.3.

In fact, public test servers have been prepared for both platforms. It is free and open source, licensed under MIT License which does not require a lawyer to interpret it for you.

Wrapper software description and installation

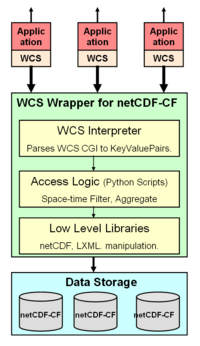

The WCS wrapper for netCDF software has three tripple functionality:

- Accessing netCDF-CF files contents over the HTTP Get Internet protocol

- Imposing a standard data query language using the WCS standard

- Allow easy (non-intrusive) adoptation to evolving standards.

The low level netCDF libraries provided by UNIDATA provide an Application Programming Interface (API) for netCDF files. These libraries can be called from application development programming languages such as Java and C. The API facilitates creating and accessing netCDF data files within an operating environment but not over the internet. In other words, the API is a standard library interce, not a web service interface to netCDF data.

However, such libraries are not adequate to access user-specified data subsets over the Web. For standardized web-based access, another layer of software is required to connect the high-level user queries to the low-level interface of the netCDF libraries. We call this interface the WCS-netCDF Wrapper.

The main components of the wrapper software are shown schematically in the Figure left. At the lowest level are open source libraries for accessing netCDF and XML files. At the next level are Python scripts for extracting spatially subset slices for specific parameters and times. At the third level, is the WCS interpreter that parses the WCS url.

The Capabilities and Description files are created automatically from the NetCDF files, but you can provide a template containing information about your organization, contacts and other metadata.

The library has also some useful features for python programmers that need access to NetCDF files. The datafed.nc3 module providers wrappers to all the essential C calls, and the module datafed.iso_time helps in interpreting ISO 8601 time range encoding.

Sample Configuration of NetCDF files.

All you need is a bunch of netCDF files following CF convention.

- The folder becomes a provider. For example in http://128.252.202.19:8080/NASA?service=WCS&version=1.1.2&Request=GetCapabilities the folder is named NASA and all the netcdf files are put into it.

- Each NetCDF file becomes a coverage. In this case, they are firepix.nc, INTEX-B-2006.nc, MISR.nc and modis4.nc. Each of these files are completely different in dimensionality.

- Each variable in a netcdf file becomes a field. They automatically share the dimensions.

- it is possible to store netcdf files elsewhere, or even have each day in a different file. The installation instructions contain an example how to configure it.

Multiple Servers, Multiple Clients, connected with WCS protocol

Interoperability can be achieved via a good standard, one that addresses the important issues and is not too complicated to implement. WMS has achieved this goal, and WCS can achieve it too.

High level view of WCS protocol

- With GetCoverage and DescribeCoverage queries the client can get all the metadata, so that GetCoverage query can be made without guesswork. The client can zoom in to the desired are time, and parameter, without searching.

- WCS Client can be anything that understands WCS 1.1.2: a human with web browser, data consuming application, datafed viewer.

- The most important standard here is the return data format: Regardless where the data is stored in netCDF-CF Files, netCDF files with other convention, binary cubes, SQL DBMS or other, the return data is formatted according to precise standard. For grids it is CF 1.0 or later, for points CF 1.5 stationTimeSeries.

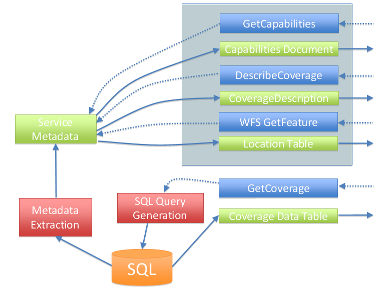

Architecture of datafed WCS server

Queries, Metadata Extractors, Subsetters, Backends

Data Flow for SQL-based Point Data:

Obsolete image:

- Backends, the system supports 2 data storages.

- netCDF-CF for grids, access library written by Unidata.

- Any SQL DBMS for points.

- They are nothing else, just plain data. For SQL there's no requirement for a standard schema. Adding SQL views you can configure the database to match an existing access component, and if that's not possible, a custom access component can be written.

- Metadata Extraction

- For netCDF-CF the built-in utility reads though all the files and stores all the metadata to a file for fast retrieval.

- If there was a CF-like convention for SQL DB schema, it would be possible to extract also point metadata automatically. Currently the hand-edited configuration just lists all the metadata: coverages, fields, dimensions, keywords, datatypes, units and access information. If the data is stored in netCDF CF 1.5 stationTimeSeries format, automatic extraction is possible.

- DescribeCoverage Convention for Point Data

- Using the extracted metadata, the WCS server can create the coverage descriptions. DescribeCoverage contains all the necessary metadata to for creating proper filters for execution.

- The standard describes enumerated dimensions with the dimension values only. Point data locations need to have loc_code, lat and lon fields too, and WCS does not have a good place for them. The datafed WCS uses Web Feature Sevice, WFS, to publish the location table:

<Axis identifier="location">

<AvailableKeys>

<Key>http://128.252.202.19:8080/point?service=WFS&Version=1.0.0&Request=GetFeature&typename=SURF_MET&outputFormat=text/csv</Key>

</AvailableKeys>

<ows11:Meaning>location</ows11:Meaning>

</Axis>

According the standard, <Key> element should contain just a loc_code, in this case it contains WFS GetFeature query for the whole location table. Since point data is relative novelty in WCS, this could become a convention for points. The "Meaning" node is used to indicate, that this is a location dimension.

- GetCoverage

- For netCDF the extractor subsets the file and returns exactly same kind of file with all the metadata, dimensions trimmed according to the filters and with only selected variables.

- With points using SQL as backend the WCS query is turned into an SQL query.

Processing of custom SQL database

- The query is parsed. The GetCoverage query is parsed and the result is a query object, with filters for each dimension in an easy to access format. This is a generic operation, not implementation specific.

- The select templates are generated. The simplest way to create a template is to create a view to the DB, which lists all the result fields: loc_code lat, lon, datetime, data field, data flags. In this manner the template is simply the field name, and the engine can just write select loc_code, lat, lon, [datetime], dewpoint from dewpoint_view. If there is no such view, then the template must contain the data table and the field name, so that a proper join can be generated.

- the filter templates are generated. Again, if there is a flat view to the data, the engine can just write where [datetime] between '2008-05-01' and '2008-06-01'

- Now the SQL query is ready, and can be executed, resulting a bunch of rows.

- These rows are then forwarded to a post-processing component, that writes either netCDF, CSV, XML or whatever the user requested.

- Note: For point data, the location dimension is filtered like &RangeSubset=SO4f[location[ACAD1;GRSM1;YELL1]]. This means "Get data field SO4f filtered by location dimension, select locations ACAD1, GRSM1 and YELL1.". This is according to standard, same form as filtering by any other dimension like elevation or wavelength.

Installation Guides

OUT OF DATE, BEING UPDATED

Reports

HTP Network Project: June-July 2009 Progress Report by CAPITA

The initial effort was focused on developing and applying the WCS wrapper template to the HTAP global ozone model outputs created for the HTAP global model comparison study. These model outputs are being managed by Martin Schultz's group at Forschungs Zentrum Juelich, Germany.

In May, June and July 2009, WUSTL and FZ Juelich have collaborated on the implementation of the WCS service wrapper for the model data curated in Juelich. The WUSTL data wrapper code has been ported to server at Juelich. Following several iterations the code has been debugged. However, there were several inconsistencies have been found that required reconciliation. The co-development of the WCS wrapper code was facilitated by a shared programming environment with automatic code synchronization of the developing code.

Michael Decker at the Juelich group has added significant improvements and adaptations to the wrapper code that made it consistent with the Juelich model netCDF files. As an outcome of this first phase, a test model dataset has been pubished on the Juelich server. Subsequently, the dataset was successfully registered on DataFed and accessible for browsing and further processing.

In July, contact was also established with Greg Leptoukh at NASA Goddard, for expanding the WCS - netCDF implementation to the satellite datasets served through the Giovanni node of the HTAP data sharing network. In particular, the Giovanny Team was invited to participate in the collaborative development and testing of the WCS services. The open collaborative workspace for this HTAP Netork effort is accessible through the ESIP Wiki http://wiki.esipfed.org/index.php?title=WCS_Access_to_netCDF_Files

Evaluation of Juelich WCS: August 2009 Report by Giovanni

The focus of this study is to evaluate and compare WCS standards used by the Open Geospatial Consortium (OGC), Juelich, DataFed, and Giovanni. A minimum set of WCS standards is to be developed in conjunction with Juelich and DataFed. Click on Giovanni's current Media:Minimum Set WCS Standards.doc.

Juelich’s “HTAP server project” monthly progress report (July 2009) provides the following URL for accessing the Juelich server: http://htap.icg.kfa-juelich.de:58080? Michael Decker provided instructions to explore Juelich’s “HTAP_monthly” test dataset. The following are sample calls to:

GetCapabilities

http://htap.icg.kfa-juelich.de:58080/HTAP_monthly?service=WCS&version=1.1.0&Request=GetCapabilities

DescribeCoverage

http://htap.icg.kfa-juelich.de:58080/HTAP_monthly?service=WCS&version=1.1.0&Request=DescribeCoverage&identifiers=ECHAM5-HAMMOZ-v21_SR1_tracerm_2001

and GetCoverage

http://htap.icg.kfa-juelich.de:58080/HTAP_monthly?service=WCS&version=1.1.0&Request=GetCoverage&identifier=ECHAM5-HAMMOZ-v21_SR1_tracerm_2001&BoundingBox=-180,-90,180,90,urn:ogc:def:crs:OGC:2:84&TimeSequence=2001-01-01/2001-06-01&RangeSubset=*&format=image/netcdf&store=true

The Juelich WCS test server may also be accessed through the DataFed client (http://webapps.datafed.net/datafed.aspx?dataset_abbr=HTAPTest_G). A sample GetCoverage URL may be obtained by selecting the “WCS Query” button:

http://webapps.datafed.net/HTAPTest_G.ogc?SERVICE=WCS&REQUEST=GetCoverage&VERSION=1.0.0&CRS=EPSG:4326&COVERAGE=NCAS_vmr_no2&TIME=2001-05-01T00:00:00&BBOX=-180,-90,180,90,0.996998906135559,0.00460588047280908&WIDTH=10000&HEIGHT=10000&DEPTH=1&FORMAT=NetCDF

Sample calls for non-HTAP Giovanni data are as follows:

GetCapabilities:

http://gdata1.gsfc.nasa.gov/daac-bin/G3/giovanni-wcs.cgi?SERVICE=WCS&WMTVER=1.0.0&REQUEST=GetCapabilities

DescribeCoverage:

http://gdata1.gsfc.nasa.gov/daac-bin/G3/giovanni-wcs.cgi?SERVICE=WCS&WMTVER=1.0.0&REQUEST=DescribeCoverage&COVERAGE=MYD08_D3.005::Optical_Depth_Land_And_Ocean_Mean

GetCoverage:

http://gdata1.gsfc.nasa.gov/daac-bin/G3/giovanni-wcs.cgi?SERVICE=WCS&WMTVER=1.0.0&REQUEST=GetCoverage&COVERAGE=MYD08_D3.005::Optical_Depth_Land_And_Ocean_Mean&BBOX=-90.0,-45.0,90.0,45.0&TIME=2007-04-07T00:00:00Z&FORMAT=NetCDF

Action Item [Giovanni/Juelich/DataFed]: Use the same CRS URN (Uniform Resource Name) in all server and clients. There are two major inconsistencies in the current WCS server/client implementations. The first being the incorrect axis order for latitude and longitude. The first axis of EPSG:4326 is latitude, not longitude (see EPSG 6422 for the Ellisoidal CS definition). Thus, when this CRS is used, the bounding box values should be listed as "minLat,minLon,maxLat,maxLon". The second is the exact location of bounding box values. WCS version 1.1.x explicitly states bounding box values define the locations of grid POINT, not the edge of a grid cell. Thus the WGS 84 bounding box for a data set of 180-row by 360 column with 1 degree spacing is "-179.5, -89.5, 179.5, 89.5", NOT "-180,-90,180,90". The later will require that the data have 181 rows and 361 column. This interpolation should also apply to WCS V1.0.0, although it is not explicitly stated in the v1.0.0 specification.

Action Item [Giovanni]: Change WMTVER keyword to VERSION on the server side.

Action Item [Giovanni]: Add "CRS=EPSG:4326" in the request when Giovanni acts as a client or always require the KVP when acting as a server.

Initial comparisons between Juelich, DataFed, and Giovanni’s GetCoverage reveal that while DataFed and Giovanni use WCS VERSION 1.0.0, the Juelich server utilizes 1.1.0. Furthermore, the most obvious result of our comparison is that the Juelich server does not return a NetCDF file. Instead, a small xml envelope is returned, which contains metadata and a pointer to the result. This occurs because Juelich’s server currently only supports a value of “true” for the STORE parameter. Juelich has noted that in the future “store=false” may be required to return a MIME multipart message with both XML and the NetCDF included, from which the client could extract the NetCDF file from the message. To accommodate the current configuration, Giovanni may opt to simply set “store=true” in the GetCoverage call. Additionally, a WCS version checking will become mandatory to ensure backward compatibility.

Action Item [Giovanni]: Place “store=true” in GetCoverage call.

Action Item [Giovanni]: Have a WCS version checking mechanism.

Michael Decker, of Juelich, acknowledges the need to rectify the SERVICE parameter being ignored in GetCoverage.

Action Item [Juelich/DataFed]: Include SERVICE parameter in GetCoverage.

The CRS value of EPSG:4326 is utilized by all three parties. However, the Juelich server follows the 1.1.0 convention and appends the EPSG to the end of BoundingBox value.

A difference in the parameter naming convention between WCS 1.0.0 and 1.1.0 is BBOX/BoundingBox. A discrepancy with respect to longitudes was noticed while probing a NetCDF file that was obtained by accessing the URL that contains the link to a Juelich test file. As recommended for the “HTAP_monthly” test dataset, “BoundingBox” was set to “-180,-90,180,90”. However the file revealed that only longitudes of 0 through 180 were embedded in the file. In order to obtain all longitudes, the start and end values have to be set to 0 and 360. This is because a majority of Juelich files are on a 0 to 360 degree grid. DataFed states that plans are underway to support both longitude styles of 0 to 360 and -180 to 180 regardless of how the data is stored. The CRS specifications for the Juelich server will need to be modified in order to be consistent with their longitude value range.

Action Item [Juelich/DataFed]: The CRS specifications need to be modified to correctly set the longitude value range. Currently, the BoundingBox value contains WGS84 in the urn, which implies that the longitude range is from -180 to 180 (see EPSG code 1262 for detailed information). There are two alternatives to correct this: 1) use a CRS UNR that define the longitude range being [0,360]; or 2) change the server to advertise, to get client requests, and to label the output using the [-180,+180] longitude range. Option (2) is perhaps the easy way to go. If (2) is used, the server doesn't have to rotate the original data array but it must return the output coverage using the [-180,+180] range

Both Juelich and DataFed use the dataset as the WCS server name for the COVERAGE/identifier parameter value. Both Hualan and Wenli suggest that Giovanni WCS either use a look-up table or specify how to link the coverage name used in WCS and the actual product or data files in the archive. The result file naming convention appears to be the same for the original HTAP files that exist in Giovanni’s local directory and those returned from the Juelich server. The following format was verified by Michael Decker: ${model}_${scenario}_${filetype}_${year}.

Action Item [Giovanni]: Giovanni WCS either use a look-up table or specify how to link the coverage name used in WCS and the actual product or data files in the archive.

One of the differences between WCS 1.0.0 and 1.1.1 is the TIME(1.0.0)/TimeSequence(1.1.0) parameter. While DataFed uses a single value, Juelich specifies a range. In our sample, the time format is ${yyyy-mm-dd/yyyy-mm-dd}. Michael Decker added that TimeSequence may also be specified by a single point in time, a list of points in time separated with a “,”, or time ranges(with the option to specify periodicity). He further states that TimeSequence follows ISO8601. It is noted that in the original files that Giovanni has locally and in the files returned via WCS from the Juelich server that all contain one time valid for each month. This was validated with testing. The tests revealed that whether the start and end times spanned a month or several days (with or without a specified periodicity) the output is valid for a single day, which implies that interpolation is occurring. The output time stamp is valid for the day interpolated to instead of the for the requested time period. Furthermore, a single year (ex. TimeSequence=2001-01 and 2001) returns an error stating that a period must be used. If coverage data is a yearly value, it should use ${yyyy}. If it is a monthly value, it should use ${yyyy-mm}. Similarly, daily products should be advertised in ${yyyy-mm-dd} format. Clarification is required in the current specification. The complexity of time definition and lack of documentation in the current specification causes a time discrepancy. Thus a change request to the specification is necessary.

Action Item [Juelich/DataFed]: Clarification (definition and documentation) is required in the current TimeSequence specification.

The “Minimum Set WCS Standards” document notes the need to resolve case sensitivity in the value for the FORMAT parameter. Giovanni currently supports “format=HDF” and “format=NetCDF”. Juelich supports “image/netcdf”. Although it is not necessary, Giovanni would like Juelich to change to “format=image/NetCDF”. Michael Decker stated that this should not be a problem but that DataFed needs to be contacted regarding this since they are responsible for the installation.

Optional Item [Juelich/DataFed]: Although not significant, Giovanni asks for Juelich server to change “format=image/netcdf” to “format=NetCDF”.

The STORE parameter is used only by Juelich. Since Juelich is using WCS 1.1.0 and only supports “store=true”, Giovanni will need to implement an extra step to parse the URL link to the NetCDF file from the XML returned from the GetCoverage call.

Action Item [Giovanni]: Implement a step to parse out the URL link to the NetCDF file from the XML returned from the GetCoverage call.

The RangeSubset parameter is utilized only by Juelich because WCS 1.1.0 allows multiple range fields for multiple variables (each may require their own interpolation method). RangeSubset is not allowed in v1.0.0 (individual variables only). The RangeSubset parameter is utilized to specify which variable(s) the user is interested in. If RangeSubset is not provided then all variables in the file are returned. The same is true if “RangeSubset=*”. Variable names that are requested are separated by “;”.

Note that data slicing is available for subsetting latitudes/longitudes, time, and for obtaining variable(s), but is not available for vertical levels. Additionally, the data is specified on sigma levels. Juelich will need to pre-process the data in order to interpolate this vertical data from sigma levels to pressure levels. Additional data improvements, such as setting appropriate fill (missing) values (for specific models) and how to resolve surface levels when they are beneath the physical surface after interpolation, are needed in pre-processing.

Action Item [Giovanni]: Provide Juelich with email describing the data improvements necessary for pre-processing.

Action Item [Giovanni]: Document (model by model) the information pertaining to the differences between various models data on the ESIP wiki page.

Action Item [Juelich/DataFed]: Pre-process the HTAP model output data to: a) interpolate from sigma levels to pressure, b) set correct fill values, c) rectify under-the-surface cases.

Optional Item [Juelich/DataFed]: If upgrade to WCS 1.1.2, allow for vertical slicing.

Team members propose that Giovanni provide another independent version 1.1.2 server. If this occurs then there is no backward compatibility issue, which minimizes the impact to existing users (most of the work will be done in DescribeCoverage).

Optional Item [Giovanni]: Provide another independent version WCS 1.1.2.

HTAP model comparison spread sheet: October 21, 2009 Report by Giovanni

Giovanni is re-posting the HtapModelComparison.xls spreadsheet which was created to better understand the differences and similarities between the HTAP models. These differences complicate model pre-processing from sigma to uniform pressure levels. The spreadsheet highlights the differences between the HTAP models with respect to vertical levels, time, zero fields, and missing values. We are looking for an ongoing discussion on how to understand these models better. Please note if you see something that is incorrect.Media:HtapModelComparison.xls