Difference between revisions of "NASA ACCESS09: Tools and Methods for Finding and Accessing Air Quality Data"

| Line 39: | Line 39: | ||

* the data are filtered through the generic discovery mechanism of the clearinghouse | * the data are filtered through the generic discovery mechanism of the clearinghouse | ||

* then air quality specific filters such as sampling platform and data structure are applied | * then air quality specific filters such as sampling platform and data structure are applied | ||

| + | |||

| + | |||

Once the data are accessible through standard service protocols and discoverable through the clearinghouse they can be incorporated and browsed in any application including the ESRI and Compusult GEO Portals. <br> | Once the data are accessible through standard service protocols and discoverable through the clearinghouse they can be incorporated and browsed in any application including the ESRI and Compusult GEO Portals. <br> | ||

Revision as of 13:49, June 8, 2009

Air Quality Cluster > AQIP Main Page > Proposal | NASA ACCESS Solicitation | Context | Resources | Forum | Participants

Tools and Methods for Finding and Delivering Air Quality Data

Short: Tools for Finding and Delivering Air Quality Data

This proposal is in response to the solicitation: Advancing Collaborative Connections for Earth System Science (ACCESS) 2009. In particular, it focuses on providing "means for users to discover and use services being made available by NASA, other Federal agencies, academia, the private sector, and others". This proposal is offering tools and methods for data access and discovery services for Air Quality-related datasets. However, the tools, methods and infrastructure should be applicable to other domains of science and application.

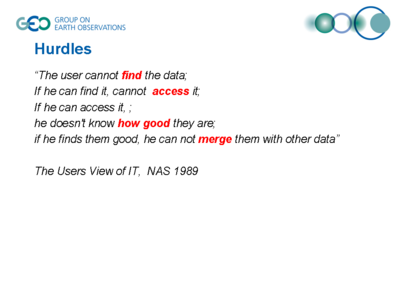

There are abundant impediments to seamless and effective data usage that both data providers and users encounter. The impediments from the user's point of view are succinctly stated in the report by NAS (1989), in short: the user can not find the data, if she can find them, she can not access them, if she can access then, she does not know how good they are, if she finds the data good, she can not merge them with other data. The data provider face a similar set of hurdles: the provider can not find the users, if she can find users, she does not know how to seamlessly deliver the data, if she can deliver, she does not know how to make them more valuable to the users. This project intends to provide support to overcome the first two hurdles: finding the user/provider and accessing/delivering the desired data.

Approach

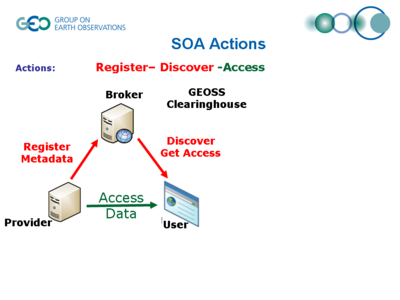

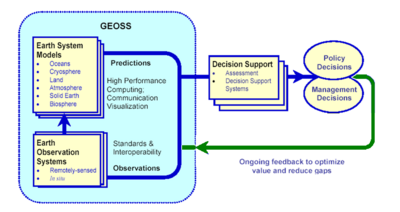

Architectural Approach: The architectural basis of the proposed work is Service Oriented Architecture (SOA) for the publishing, finding, binding to and delivery of data as services. The critical aspect of SOA is the loose coupling between service providers and service users. Loose coupling is accomplished through plug-and-play connectivity facilitated by standards-based data access service protocols. SOA is the only architecture that we are aware of that allows seamless connectivity of data between a rich set of provider resources and diverse array of users.

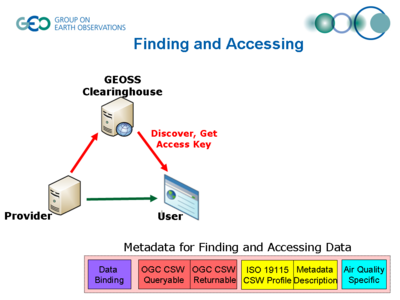

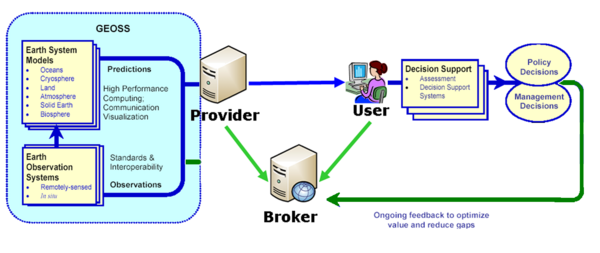

The data reuse is possible through the service oriented architecture of GEOSS.

- Service providers registers services in the GEOSS Clearinghouse.

- Users discover the needed service and access the data

The result is a dynamic binding mechanism for the construction of loosely-coupled work-flow applications.

Engineering and Implementation: The three major components of this SOA-based project are: (1) facilities for publication of data services (2) facilities to find data services and (3) facilities to access data services. Everything is a service. mash ups to other systems. Connectivity-workflow. The engineering design of the proposed work is Each of these components will be supported by a set of tools and methods.

Technology Approach:

Service technologies

workflow engines

caching.

Management Approach: Core group

Community - Find-bind ... was developend and tested durion AIP2 by the cooomm

Collaborative,

Publishing

The metadata has the primary purpose to facilitate finding and accessing the data in order to help dealing with first two hurdles that the users face. Clearly, the air quality specific metadata such as sampling platform, data domain and measured parameters etc. need to be defined by air quality users. Dealing with the hurdles of data quality and multi-sensory data integration are topics of future efforts.

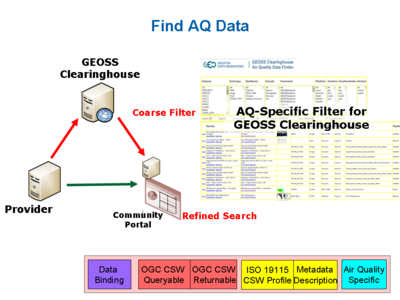

The finding of air quality data is accomplished in two stages.

- the data are filtered through the generic discovery mechanism of the clearinghouse

- then air quality specific filters such as sampling platform and data structure are applied

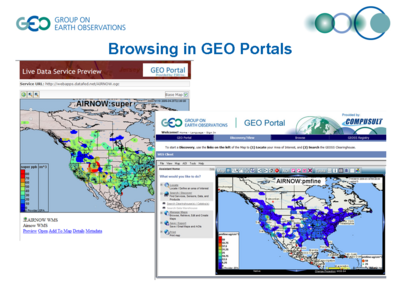

Once the data are accessible through standard service protocols and discoverable through the clearinghouse they can be incorporated and browsed in any application including the ESRI and Compusult GEO Portals.

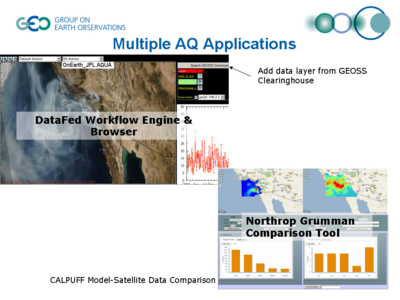

The registered datasets are also directly accessible to air quality specific, work-flow based clients which can perform value-adding data processing and analysis.

The loose coupling between the growing data pool in GEOSS and workflow-based air quality client software shows the benefits of the Service Oriented Architecture to the Air Quality and Health Societal Benefit Area.

- proposing soa b/c has components for publish, find, bind

- paragraph on publish, find and bind

- paragraph on community, openness of system

From ACCESS solicitation: Many existing web-based services in NASA are applicable to Earth Science (ES) but are not well exposed and not easily discoverable by potential users. Furthermore, users searching for NASA ES data often do not have broad knowledge of the data pertinent to their interest.

Proposals should address this information gap by providing tools and methods for discovery and use of data services provided by NASA, other Federal agencies, academia..and components for persistent availability of these services through machine and GUI interfaces.

Background

Recent developments offer outstanding opportunities to fulfill the information needs for Earth Sciences and support for many societal benefit areas. The satellite sensing revolution of the 1990's now yield near-real-time observations of many Earth System parameters. The data from surface-based monitoring networks now routinely provide detailed cgaracterisation of atmospheric and surface parameters. The ‘terabytes’ of data from these surface and remote sensors can now be stored, processed and delivered in near-real time and the instantaneous ‘horizontal’ diffusion of information via the Internet now permits, in principle, the delivery of the right information to the right people at the right place and time. Standardized computer-computer communication languages and the emerging Service-Oriented information systems now facilitate the flexible processing of raw data into high-grade scientific or ‘actionable’ knowledge. Last but not least, the World Wide Web has opened the way to generous sharing of data and tools leading to faster knowledge creation through collaborative analysis in real and virtual workgroups.

Nevertheless, Earth scientists and societal decision makers face significant hurdles. The production of Earth observations and models are rapidly outpacing the rate at which these observations are assimilated and metabolized into actionable knowledge that can produce societal benefits. The “data deluge” problem is especially acute for analysts interested in climates change and atmospheric processes are inherently complex, the numerous relevant data range form detailed surface-based chemical measurements to extensive satellite remote sensing and the integration of these requires the use of sophisticated models. As a consequence, Earth Observations (EO) are under-utilized in science and for making societal decisions.

Approach

- Service Orientation, while accepted has not been widely adapted for serving NASA products

- SOA allows the creation of loosely coupled, agile, data systems

- SOA -> requires ability to Publish, Find, Bind (Register, Discover, Access)

- Stages: Acquisition, Repackaging, Usage

- Acquisition is a stovepipe

- Usage is value chain

- In-between is the 'market'

Catalog Services

Web Services

A Web Service is a URL addressable resource that returns requested data, e.g. current weather or the map for a neighborhood. Web Services use standard web protocols: HTTP, XML, SOAP, WSDL allow computer to computer communication, regardless of their language or platform. Web Services are reusable components, like ‘LEGO blocks’, that allow agile development of richer applications with less effort. Visionaries (e.g. Berners-Lee, the ‘father’ of the Internet) argue that Web services can transform the web from a medium for viewing and downloading to distributed data/knowledge-exchange and computing.

Enabling Protocols of the Web Services architecture: Connect: Extensible Markup Language (XML) is the universal data format that makes data and metadata sharing possible. Communicate. Simple Object Access Protocol (SOAP) is the new W3C protocol for data communication, e.g. making and responding to requests. Describe. Web Service Description Language (WSDL) describes the functions, parameters and the returned results from a service. Discover. Universal Description, Discovery and Integration (UDDI) is a broad W3C effort for locating and understanding web services.

Service Oriented Architecture (SOA)) provides methods for systems development and integration where systems package functionality as interoperable services. SOA allows different applications to exchange data with one another. Service-orientation aims at a loose coupling of services with operating systems, programming languages and other technologies that underlie applications. These services communicate with each other by passing data from one service to another, or by coordinating an activity between two or more services. SOA can be seen in a continuum, from older concepts of distributed computing and modular programming, through to current practices of mashups, and Cloud Computing.

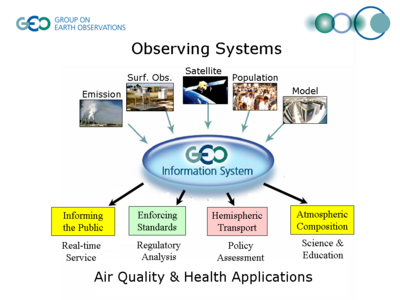

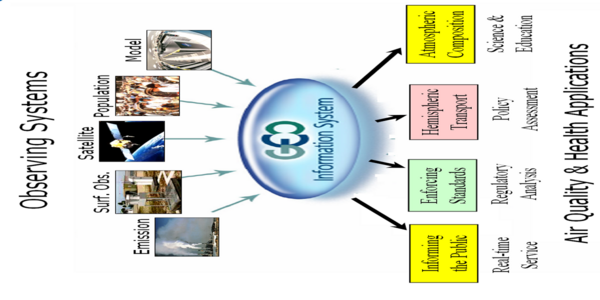

There are numerous Earth Observations that are available and in principle useful for air quality applications such as informing the public and enforcing AQ standards. However, connecting a user to the right observations or models is accompanied by an array of hurdles.

The GEOSS Common Infrastructure allows the reuse of observations and models for multiple purposes

Even in the narrow application of Wildfire smoke, observations and models can be reused.

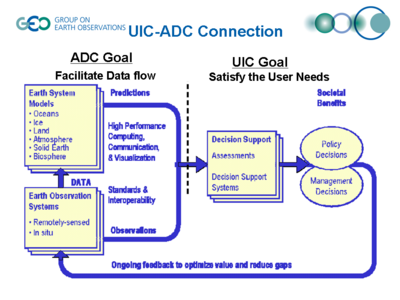

The ADC and UIC are both participating stakeholders in the functioning of the GEOSS information system that overcomes these hurdles. The UIC is in position to formulate questions and the ADC can provide infrastructure that delivers the answers.

The data reuse is possible through the service oriented architecture of GEOSS.

- Service providers registers services in the GEOSS Clearinghouse.

- Users discover the needed service and access the data

The result is a dynamic binding mechanism for the construction of loosely-coupled work-flow applications.

The metadata has the primary purpose to facilitate finding and accessing the data in order to help dealing with first two hurdles that the users face. Clearly, the air quality specific metadata such as sampling platform, data domain and measured parameters etc. need to be defined by air quality users. Dealing with the hurdles of data quality and multi-sensory data integration are topics of future efforts.

The finding of air quality data is accomplished in two stages.

- the data are filtered through the generic discovery mechanism of the clearinghouse

- then air quality specific filters such as sampling platform and data structure are applied

Once the data are accessible through standard service protocols and discoverable through the clearinghouse they can be incorporated and browsed in any application including the ESRI and Compusult GEO Portals.

The registered datasets are also directly accessible to air quality specific, work-flow based clients which can perform value-adding data processing and analysis.

The loose coupling between the growing data pool in GEOSS and workflow-based air quality client software shows the benefits of the Service Oriented Architecture to the Air Quality and Health Societal Benefit Area.

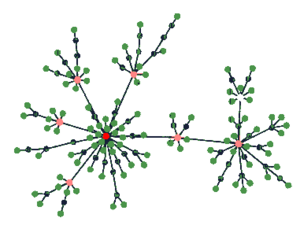

The Network

- Fan-In, Fan-Out

- (so is GCI) not central

- holarchy , data up into the pool though the aggregator network and down the disaggregator/filter network

- Data distributed through Scale-free aggregation network. Metadata contributed along the line of usage. Homogenized and shared.

This proposal...application of the GEOSS concepts in the federated data system, DataFed. The proposal focuses on the SAO aspects of the publish find bind. ...a contribution to the emerging architecture of GEOSS. It is recognized that it represents just one of the many configurations that is consistent with the loosely defind concept of GEOSS.

The implementation details and the various applications of DataFed are reported elsewhere [4]-[6].

Data Value Chain Stages: Acquisition - Mediation - Application

- Acquisition: Data from Sensor -> CalVal -> Data exposed

- Mediation: Accessible/Reusable -> Leverable

- Application: Processed -> LeveragedSynergy -> Productivity

Provider and User Oriented Designs

- Providers offers it wares ... to reach maxinum users in many applications

Community

Data as Service

- Wrappers, reusable tools and methods (wrapper classes for: SeqImage/Seq File, SQL, netCDF) into WCS and WMS

- Unidata (netCDF), CF (CF working group; Naming); GALEON (WCS-netCDF) building on top of...

Metadata as Service

- Metadata not service: readme file

- Metadata as service: Capability

WCS/WMS GetCapabilities Conventions that allow metadata reuse

- WCS Capabilitie expanded -> WMS (Combination of WCS and Render) (build metadata in 2 steps wcs and then augment with wms fields)

- WMS GetCapabilities > ISO Maker Tool publish metadata

To illustrate the Network, Coding (faceting) through metadata, WMS to show data

- WCS next

- Binding to data through standard data access protocols, publishing and finding requires metadata system

- google analytic sensors - where do we put it? so that we can identify users pattern.

Community

Data Discovery

- Semantic Mediation - Repackaging/homogenizing metadata - added value comes from incorporating user actions back into the semantic relationships.

- Metadata system for publishing and finding content has to be jointly developed between data providers and users.

- Generic catalog systems - metadata collection of not only what provider has done but also tracking what users need

- Collecting and Enhancing Metadata from observing Users

- Communication along the value chain, in both direction;

- Metadata the glue and the message

- Market approach; many providers; many users; may products

- Faceted search

- user is happy

- Search by usage data

Description on how users will discover and use services provided by NASA, other Agencies, academia..

- Detail on discovery services

- System components for persistent availability of these services

- machine-to-machine interface

- GUI interface

Classes of Users

- by value chain

- by level of experience

- by ...

Data Assess and Usage

Provider Oriented Catalog:

- All data from a provider (subset fall data)

- Metadata only (Standard protocol)

- Provider metadata (meta-meta-meta data)

User Oriented Catalog:

- The right data to the user at the right time the right (subset fall data)

- Seamlessly accessable (Standard protocol)

- Complete Metadata (meta-meta-meta data)

- Has to handle derived data (Raw-procssed Pyramid --- less along the value chain-network)

Performance Measurement and Feedback

Metadata from Providers

- Provide discovery, access information

- Providers can also provide information about how users behave once they are at their site

Metadata from Users

- Google Analytics/Google Sitemap - provides feedback and helps market process by improving "shopping" experience for users - creates values to both users and producers.

- Amazon - collects data on user actions in order to help the next user navigate to books of interest. collects data on text in the book in order to relate books together

- Recently Viewed widget Tracks for the user the last things that they viewed

Metadata from Mediators

- Alexa Amazon Web Information Serviceprovides analytics about another site. This is good for us b/c we could access analytics about distributed data access and show them uniformly in catalog (Site Overview pages, Traffic Detail pages and Related Links pages)

- Alexa also example of site pulling in information about one URL from multiple sources. (Amazon example)

- perform collaborative filtering based upon data collected from more than one [data provider]

- Avinash Kaushik - Evangelist for Google Analytics

- Key Performance Indicators -

- Where do people come from? search, other links - % of visits across all traffic source

- Bounce rate - Came to one page and left immediately (Good for us b/c it helps direct people to the right information/right place/right time)

- Combining where people come from and how many people from that source bounce lets you know if you are targeting the right people...

- Wrong audience

- Wrong Landing page

- Visitor loyalty - # that come in a give duration

- Recency of visit - do you retain people over time

- What are the key outcomes you want people to do (i.e. subscribe to feed, click on data link, click on metadata link...)

- What is the top content on site?

Management

Community approach:

- ESIP AQ Workgroup with links to

- GEO CoP -> Linking multi-region (global), multi SBA

- Agency (Air Quality Information Partnership (AQIP))

- EPA

- NASA

- NOAA

- DOE ....

- ESIP

- Semantic Cluster

- Offer: a rich highly textured data needing semantics

- Needed: Semantics of the data descriptions and finding

- Semantic Cluster

- Web Services and Orchestration Cluster

- Offer: A rich array of WCS data access services

- Different workflow & orchestration clients

- Web Services and Orchestration Cluster

- Meetings

- Winter

- Summer

- Telecons

- Meetings

- OGC WCS netCDF

- Stefano

- Ben

- Max Cugliano

- OGC WCS netCDF

- Other Related Proposals/Projects

- Show our CC proposal to AQWG

- Ask the if they have a way to use this CC as testbed

- Add a paragraph into the proposal to indicate the way their fits in

- Show our CC proposal to AQWG

links

ESIP

- Data_System_Governance_and_Sustainability and Synery

- Interoperability_of_Air_Quality_Data_Systems

- Data_Systems_Architecture

- Desired_Characteristics_of_Air_Quality_Data_Systems

- Air_Quality_Data_Processing_and_Portal_Systems

- Air_Quality_Data_Providers

- AQ_Data_System_Applications Clients_and_User_Groups

- Community_Air_Quality_Data_Systems_Strategy

- AQ_Data_System_Strategy_Introduction_and_Purpose

DataFed wiki

- Interoperability_in_DataFed_Service-based_AQ_Analysis_System

- 2008-07-07_EPA_Interoperability

- HTAP Interoperability Husar060126_HTAP_Geneva

- 2008-02-11:_EPA_DataFed_Presentation

- DataFed Collaboration Projects

- 2008-02-29:_Data_&_Knowledge_Differences_on_Interop_Stack

- 2006-06-28_ESTO,_College_Park

NASA Existing Component - Links

- Atmosphere Data Reference Sheet - Datasets identified to be relevant to atmospheric research.

- Giovanni - that provides a simple and intuitive way to visualize, analyze, and access vast amounts of Earth science remote sensing data without having to download the data GIOVANNI metadata describes briefly parameter

- parameter information pages - provide short descriptions of important geophysical parameters; information about the satellites and sensors which acquire data relevant to these parameters; links to GES DAAC datasets which contain these parameters; and external data source links where data or information relevant to these parameters can be found.

- Mirador new search and order Web interface employs the Google mini appliance for metadata keyword searches. Other features include quick response, data file hit estimator, Gazetteer (geographic search by feature name capability), event search

- Frosty

- GCMD - Has selected Air Quality datasets; provides lots of discovery metadata keywords, citation, etc. lacks standard data access.

- A-Train Data Depot - to process, archive, allow access to, visualize, analyze and correlate distributed atmospheric measurements from A-Train instruments.

- Atmospheric Composition Data and Information Service Center - is a portal to the Atmospheric Composition (AC) specific, user driven, multi-sensor, on-line, easy access archive and distribution system employing data analysis and visualization, data mining, and other user requested techniques for the better science data usage.

- WIST - Warehouse Inventory Search Tool. search-and-order tool is the primary access point to 2,100 EOSDIS and other Earth science data sets

- FIND - The FIND Web-based system enables users to locate data and information held by members of the Federation (DAACs are Type 1 ESIPs.) FIND incorporates EOSDIS data available from the DAAC Alliance data centers as well as data from other Federation members, including government agencies, universities, nonprofit organizations, and businesses.

- SESDI Semantically-Enabled Science Data Integration | Vision - ACCESS Project, Peter Fox - will demonstrate how ontologies implemented within existing distributed technology frameworks will provide essential, re-useable, and robust, support for an evolution to science measurement processing systems (or frameworks) as well as for data and information systems (or framework) support for NASA Science Focus Areas and Applications.