WCS Server Software

< GEO AQ CoP < HTAP Data Network Pilot

Kari Hoijarvi (WUSTL), Michael Decker (FZ Juelich)

Original Documentation: WCS Access to netCDF Files

Quick Links: Access to source code and documentation @SourceForge, Introduction and quick-start presentation

Introduction and Design

This section describes the design and implementation of portable WCS server software for station-point data types. The server software constitutes the main contribution of this Pilot to HTAP and to GEOSS. The purpose of the software is to minimize the most significant impediment to networking of air quality data systems: data access through standard-based web services. Since 2005 a number of demonstration projects were conducted to show the potential benefits of WCS data access protocol to access and use remote air quality data. The developments were reported in a series of GEOSS workshops: User and the GEOSS Architecture. In spite of the fact that over the past five years several organizations have committed considerable effort and attention, there are only a few operating WCS data servers that deliver air quality-relevant Earth observations or models. The number client nodes that consume WCS services is even less. As a consequence, in 2010 Service Oriented Architecture (SOA) for agile application building remains to be vision.

The implementation of truly interoperable WCS server software has proven to be a notoriously difficult task. There are several servers that implemented the WCS protocol for data access. However, it is impossible to write a generic client that can access any service. It's not that services would have errors, but the multiple correct interpretations of standards and multitude of return file formats.

WMS has been a success in this respect. For WCS It is difficult to pinpoint a particular impediment that is responsible for this slow progress. It appears that there are significant obstacles within each layer of the inter-operability stack. At the lowest storage and formatting level the problem lies in the diversity of data models and data storage formats. This necessitated the development of custom programs to access each dataset. Many of the legacy data systems archived or exposed their multi-dimensional data in file-based granules which means sub-setting multi-dimensional data was either prohibitatively slow or impossible.

On the next level of the inter-operability stack the impediment is the poor semantic characterization of the data. The heterogeneity of the data models and naming conventions prevent clear description of the data that can be unambiguously interpreted by the client. As a consequence, users were not able to understand the meaning of the received data.

At the third level of the inter-operability stack is the ability of the clients to specify and request a specific subset of the servers’ data holding, such as data extraction via spatial-temporal subsetting lacked the language common to both servers and clients.

These impediments are not unique to air quality data accesses. The general problem of scientific data sharing are concisely expressed in the 1989 NAS report “User and Information”: the user cannot find the data; if he can find it, he cannot access it; if he can access it, he cannot understand it; if he understands it, he cannot combine it with other data.

Design: The design philosophy of the data networking software is:

- To approach each of the data networking impediment individually.

- Seek out and implement interoperability standards that are relevant for each impediment in each interoperability layer.

- Simultaneously develop client software that uses the full interoperability stack.

While pursuing this design approach one encounters considerable challenges. The identification and delineation of the interoperability layers is a non-trivial task. What is the distinction between the lower two layers and where is the boundary, in other words what is the syntax or physical structure of the data, and what is the semantics that conveys the meaning. Similarly, how does one specify a sub-set of multi-dimensional data that is both useful for the client and it is also understandable by the users? How to combine the standards developed by a diverse set of organizations, while retaining the integrity of the entire interoperability stack.

Following extensive exploration development since 2005, it was concluded that for gridded environmental data the combination of CF-netCDF data model and the WCS 1.1 web service protocol satisfy the above design goals. For station point monitoring data the corresponding set includes data storage in SQL server, data semantics following the CF convention for point data and WCS as the data access protocol. How to design the data system interoperability so that it is responsive to changes in standards and user requirements.

For these two data types, the server software was developed such that data access was executed through the standard netCDF libraries and the SQL database query language. Consequently, the service software does not require any development for low level data access. The semantics for netCDF grid files is incorporated in the netCDF files themselves by applying the CF convention for metadata. Given the semantically rich CF-netCDF data files the server software can automatically configure the WCS data extraction protocol. Hence, for CF-netCDF encoded grid data the server software can execute a WCS data service without custom programming. All the service instance-specific data are contained in configuration data files that are prepared by the server administrator. The avoidance of low-level custom programming at the server makes porting the software code to different servers and different operating systems a modest effort.

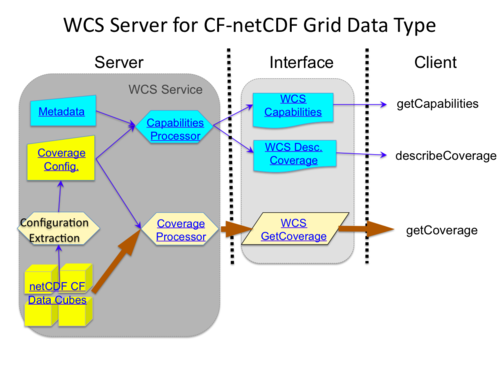

WCS Server for CF-netCDF Grid Data Type

The architecture of the WCS server software for grid data type is shown in Figure 4.1. The interface to the WCS service consists of two XML documents that describe the service. The Capabilities document, accessible through the getCapabilities call describes the service offerings consisting of a list of coverages. Following the selection of a specific coverage by the user, the describeCoverage call returns the Coverage description with more detailed semantic and data access information specified coverage. With information in the describeCoverage the client software can formulate a query for requesting a subset of the servers data offerings. Following the The third getCoverage call consists of an array of query parameters encoded as key-value-pairs. Both the names and permissible values of the query variables are strictly controlled by the WCS protocol (currently using WCS 1.1).

Fig 4.1 WCS server software flow diagram for Grid data type in CF-netCDF files

The functionality of the server software consists of preparing the two metadata XML documents that implements the coverage processor that reads the getCoverage query, reformulates the query for the local data access, extracts the requested data subset, reformats the data package as a CF-netCDF data package and returns the requested payload to the client.

In the flow diagram, Figure 4-1, the thin blue lines represent the flow of metadata while the heavy brown arrows indicate the flow of data. The NetCDF files themselves have only structure with syntactic meaning. A variable has datatype, shape (array of dimensions) and attributes, but there is no knowledge what that actually means. The semantic richness comes from CF-netCDF, and from the compatibility of the CF convention with the WCS 1.1 protocol. Since the CF-netCDF files contain all the necessary information, it is possible to automatically extract the Coverage Configuration. The CF-netCDF does not mention how to publish keywords or contact person, the metadata file must be filled separately.

With these two metadata sources the system can create WCS capabilities and coverage description documents, and can execute given filters in getCoverage request. Based on this server design, all the local effort for a given server can be focused on the preparation of suitably configured CF-netCDF data files. The automated maintenance of the WCS server for continuously evolving data sets is also supported. For instance, as new observations are acquired, the new time slice is appended to the CF-netCDF files, and the configuration extraction code automatically updates the WCS capabilities and coverage documents.

The CF-netCDF 1.5 is over 30 pages long, but fortunately only a handful of attributes are actually needed to make a netCDF files CF compatible. The necessary attributes are:

- Global Attributes:

- "Conventions" must be marked from CF-1.0 to CF-1.5

- "title" becomes the coverage human readable name

- "comment" becomes abstract

- Coordinate Variables:

- longitude: axis=X or standard_name=longitude, units=degrees_east

- latitude: axis=Y or standard_name=latitude, units=degrees_north

- time: axis=T or standard_name=time, units=hours since 1990-01-01

- elevation/depth: axis variable with axis=Z set

- Data Variables:

- optionally standard_name and units attributes set if applicable.

Description of Grid Data Server Components

Metadata: This is a text file filled out by a person. Contains Abstract, Contact Information, Keywords and any other documentation that is needed in classifying or finding the service. This file is used by the Capabilities Processor which incorporates this metadata into capabilities and coverage description documents.

Coverage Configuration for Grids: This is an automatically created python dictionary. Contains Coverages, Fields, dimensions, units and other descriptions. All this information is automatically extracted from the CF-netCDF files. Each file becomes a coverage, Each variable becomes a field. The Capabilities Processor for Cubes uses this configuration information.

Capabilities Processor: This is a component that produces the standard XML Capabilities and Data Description documents for WCS and WFS services. For input It reads the Metadata Data and Cube or Point Data Configuration. It is used to produce the results for GetCapabilities, DescribeCoverage and DescribeFeatureType queries.

Coverage Processor: This component extracts a subset of out of a CF-netCDF cube file. It finds the source file, reads the requested subset, and writes that into a CF-netCDF file. The output looks exactly like the original file, except it is a filtered subset. Used in WCS GetCoverage Query.

CF-netCDF -Climate and Forecast Metadata for netCDF Files. Names in netCDF don't have any meaning associated in them, CF Conventions standardizes the semantic meaning of names, and physical dimensions. CF-netCDF-CF files can be read with semantic meaning associated to the data.

WCS GetCapabilities: This is the top-level document of a service. It contains Metadata Data and a list of offered WCS Coverages. It is produced by the Capabilities Processor and used by clients.

WCS DescribeCoverage: This document is the detailed description of a WCS coverage. It lists the geo and time dimensions of the coverage, fields, additional dimensions of the fields, and field information like units. The Capabilities Processor produces this by clients request.

WCS GetCoverage: This is the main data query for a WCS service. The request contains Coverage identifier, Geographical BoundingBox and optionally, TimeSequence, fields, and filters for fields. The Coverage Processor for Cubes or Points processes the query and produces data returned to the client.

Implementation of WCS Server for CF-netCDF Grid Data

Mapping Between CF-netCDF and WCS 1.1.x

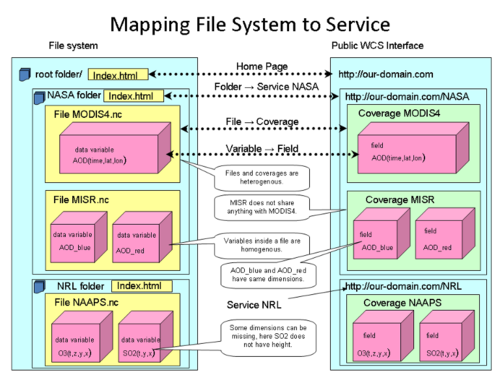

The implementation of the WCS grid data server can be made robust if there is a clear convention for mapping the file/directory structure to the WCS coverage and Field structure.

WCS is a query language that allows clients to formulate their request for data through that query language. The WCS protocol uses a four-ayer hiearachical structure for describing data: service, coverage, field and attribute. A WCS web service is at the top of the hierachy. A server publishes its service through a XML document. A service may incorporate multiple coverages. Each coverage contains any number of fields. Any given field may have a number of attributes.

The mapping between these structural elements and Earth Observations is not explicitely specified by the WCS standard. However, based on the experience and community-wide discussion, the following convention has been adapted for the WCS encoding of air quality observations:

Attributes correspond to units, flags, and other attributes of Earth Observatin parameters. Attributes are attached to Fields.

Fields correspond to specific observations such as ambient concentration of a given pollutant.

Coverages are a group of observations that have the same coverage in space and time. Typicaly, a coverage contains the data from a specific monitoring network, a numerical model or from a remote sensing satelite.

A service may contain a number of coverages, each coverage representing different kinds of observations and spatial temporal domains. For instance, and organization offering multiple data sets may publish their offerings as coverages in a single web service.

Fig 4.3 Example mapping of WCS service, coverage, and field to the file system.

In File system, the root folder contains project folders. A project folder contains CF-netCDF files. Each netCDF file contains a set of variables. In a netCDF file, data variables share dimensions. In the data access service, WCS 1.1.2, the computer can contain services. A service contains coverages and each coverage contains fields. In a coverage, fields share dimensions. A particular server computer can host several OGC services, which are not necessarily related to each other. Within a given WCS service, the coverages can be independent, and may have different dimensions and units. Inside a coverage, all the fields have to share the same space and time dimensions. That yields to a natural mapping where a folder becomes a service, a file becomes a coverage and a variable becomes a field.

Best Practices:

Inside a file, each data variable should have identical dimensions, optionally missing a dimension.

If you want to publish two different resolutions of the same data, make two files, which make two separate coverages.

A temperature and rel. humidity variables could be 3-dimensional T(time,lat,lon) and RH(time,lat,lon). The ground elevation variable can be 2-dimensional elevation(lat,lon). This makes a lot of sense, since temperature is often calibrated with elevation. The system supports this, time filter is ignored for elevation in this case.

Software Implementation (Kari Hoijarvi, Michael Decker)

The OWS Web Service Framework

OWS as described here is a python framework for creating OGC Web Services. The framework itself takes care of http related network issues, reading the request and returning the result over the network. Each service can be implemented as a library component. This separation of network related issues, and web service related issues, is very important. Other network transport mechanisms like SOAP can be added, and any implemented web services don't need to change. The actual web services can be developed, tested and deployed independently.

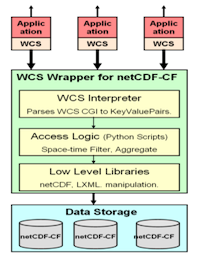

The WCS-netCDF Wrapper

The WCS wrapper for netCDF software has three layers. The low level netCDF libraries provided by UNIDATA supplied an Application Programming Interface (API) for netCDF files. These libraries can be called from application development programming languages such as Java and C. The API facilitates creating and accessing netCDF data files within an operating environment but not over the internet. In other words, the API is a standard library interface, not a web service interface to netCDF data.

However, such libraries are not adequate to access user-specified data subsets over the Web. For standardized web-based access, another layer of software is required to connect the high-level user queries to the low-level interface of the netCDF libraries. We call this interface the WCS-netCDF Wrapper. The main components of the wrapper software are shown schematically in the Figure 4.3.

Fig 4.4 Layers of the WCS-netCDF Wrapper

At the lowest level are open source libraries for accessing netCDF and XML files. At the middle level are Python scripts for extracting spatial/temporal subset slices for specific parameters. At the third level is the WCS interpreter, that parses the WCS query from the url and produces XML envelopes for returning data to the client.

The Capabilities and Description files are created automatically from the NetCDF files, but you can provide a template containing information about your organization, contacts and other metadata.

The WCS wrapper also has also some useful libraries for python programmers that need access to NetCDF files. The datafed.nc3 module providers wrappers to all the essential C calls, and the module datafed.iso_time helps in interpreting ISO 8601 time range encoding.

The service component is identified from the query parameter service=WCS or service=WFS. The framework parses the query, finds the service component, the WCS-netCDF wrapper, and passes the request to it. The service component now processes the query, creates the result, and returns it to the framework, which makes the actual http response.

Python itself, as well as the used libraries, are portable between Windows and Linux, so the system was designed portable from the beginning. To avoid issues with small differences between the platforms, unit tests are regularly run under Windows and two Linux variants.

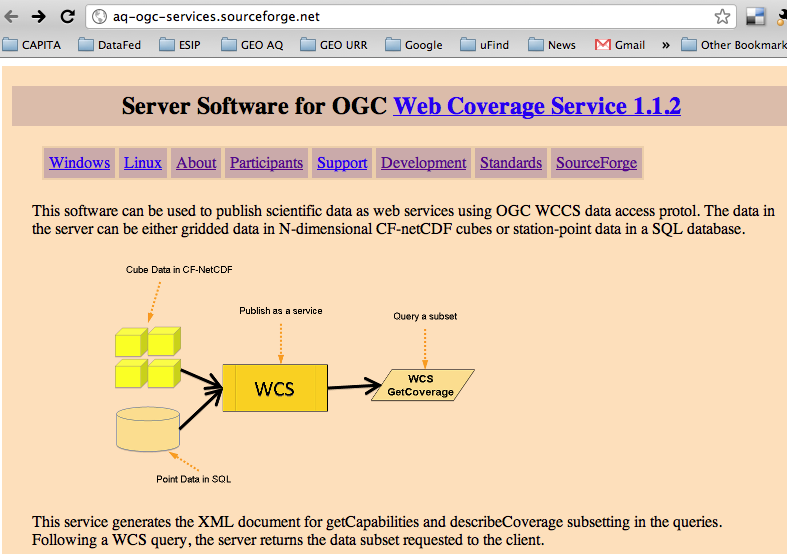

The software is hosted at http://aq-ogc-services.sourceforge.net/ and contains all the standard tools: downloads, tickets and discussions. The development page describes the use of darcs which is the version control system, and how to participate in the actual codebase.

The project has been developed mostly in TDD, Test-driven development fashion. First you write a test first, then just enough code to make the test pass, and last refactoring: find possible simplifications, especially code duplications, and reduce the code complexity to minimum. TDD fits very well in distributed, cross-platform, stateless server softare development, since it reaches near 100% line coverage in unit tests with following benefits:

Helps catching your own logic errors. Helps catching porting errors due to differences between Windows and Linux variants. Helps in communication between developers in a distributed team. A unit test is a executable document of expected behavior. Helps in refactoring, continuously keeping the code simple.

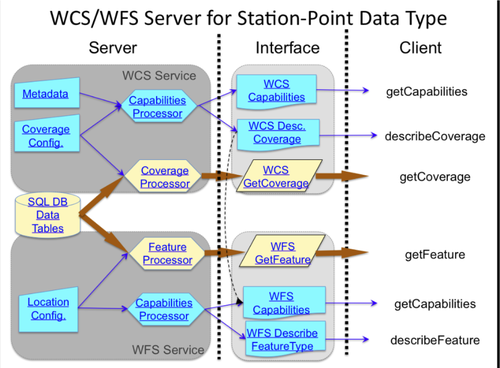

WCS Server for Station-Point Data Type

The WCS server for station-Point monitoring data follows the same general pattern as the WCS for grid data. The differences are highlighted below.

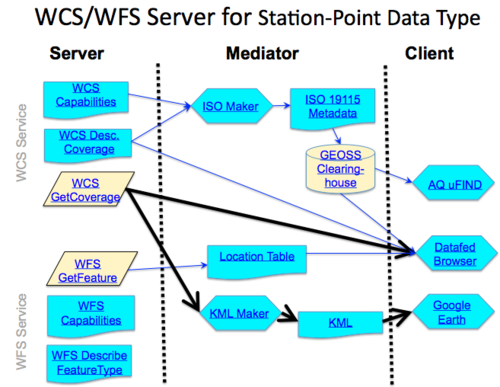

The architecture of the WCS server software for station-point data type is shown in Figure 4.4. As in the case of grid data type, the interface to the WCS service consists of two XML documents that describe the service. The client-server interaction is almost identical to the grid data type. However, WCS 1.1 has no good place for Location Table in the describeCoverage. Fortunately another service, WFS, Web Feature Service, is ideally designed to return the geographical metadata from surface-based monitoring networks consisting of fixed stations. WFS returns static geographical features and supports filters like "stations that record Ozone". With information in the describeCoverage and the location table, the client software can formulate a query for a subset of the monitoring station’s data. The getCoverage call consists of an array of query parameters encoded as key-value-pairs including station identifiers for the requested data, currently using WCS 1.1.

The functionality of the WCS/WFS server software consists of capabilities processors, that execute capabilities/describeCoverage/describeFeature queries, turning the Metadata/coverage config/location config data into the XML documents, and coverage/feature processors, that reads the getCoverage/getFeature query, reformulates the query for the local SQL data access, extracts the requested data subset, reformats the data package as a CF-netCDF or CSV data package and returns the requested payload to the client.

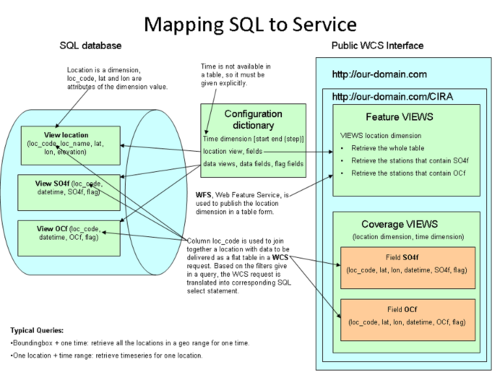

In the flow diagram, Figure 4.4, the thin blue lines represent the flow of metadata while the heavy brown arrows indicate the flow of data. Unlike the CF-netCDF data files, SQL databases do not have a corresponding CF convention. Consequently, the time dimension, location dimension table, data variable tables and fields, data variable units etc., must be provided by a separate configuration file.

Fig 4.5 WCS server software flow diagram for Station-Point data in a SQL database.

Further rationale for combining WCS and WFS for point data is given by Kari.

Description of Station-Point Data Server Components

Metadata: This is a text file filled out by a person. Contains Abstract, Contact Information, Keywords and any other documentation that is needed in classifying or finding the service. This file is used by the Capabilities Processor which incorporates this metadata into capabilities and coverage description documents.

Coverage Configuration for Points: This is a python dictionary filled out by a person. Contains coverage names, fields of each coverage, and the SQL table and column names for each field. The Capabilities Processor and the Coverage Processor uses this configuration information.

Capabilities Processor: This is a component that produces the standard XML Capabilities and Data Description documents for WCS and WFS services. For input It reads the Metadata Data and Point Data Configuration. It is used to produce the results for GetCapabilities, DescribeCoverage and DescribeFeatureType queries.

Coverage Processorr: This component parses the WCS query, translates it into SQL, extracts a subset of the data, and writes that into a netCDF-CF or CSV file. Used in WCS GetCoverage Query.

WCS GetCapabilities: This is the top-level document of a service. It contains Metadata Data and a list of offered WCS Coverages. It is produced by the Capabilities Processor and used by clients.

WCS DescribeCoverage: This document is the detailed description of a WCS coverage. It lists the geo range and location and time dimensions of the coverage, fields, and field information like units. The Capabilities Processor produces this by clients request.

WCS GetCoverage: This is the main data query for a WCS service. The request contains Coverage identifier, Geographical BoundingBox and optionally, TimeSequence, fields, and filters for fields. The Coverage Processor for Points processes the query and produces data returned to the client.

SQL DB Data Tables: If you store your station point data in a normalized manner, and you have your database indexed, you most likely can serve the WCS data as is. The WCS Wrapper Configuration for Point Data explains with detailed examples how to create views in your station point data database so that it can be a service by simply aliasing the tables and fields to coverages. It also shows how to create views to the location and data tables.

Location Configuration: This is a python dictionary created by a human. It contains the name and columns of Location Table for Points. Used by Feature Processor for Points

Feature Processor: This component reads the location table out of an SQL database. It gets the requested feature from the query and gets the relevant SQL table and column names from the Location Configuration information. Then it creates an SQL query, which is executed in the SQL database engine, and the resulting rows are written to a CF-netCDF or CSV file. Used for station point data in WFS GetFeature Query.

WFS Capabilities: This is the top-level document of a station point data location dimension. It contains Metadata Data and a list of location dimensions of offered WCS Coverages. It is produced by the Capabilities Processor and used by clients.

WFS DescribeFeature: The document describes the location table of a coverage in detail, containing the field names and types. It is produced by the Capabilities Processor and used by a client.

WFS GetFeature: This Request produces the location table for a coverage, by the Feature Processor for Points. It is used by any a client.

Implementation of WCS Server for Station-Point Data

Point monitoring data is typically stored in relational databases using SQL servers. The database schemae are designed to accomodate the needs for each data system and vary considerably in design. However, in most SQL servers it is possible to query the database for spatial and temporal subsetting required by WCS. In this sense, the SQL server serves the same purpose as the netCDF file system and associated libraries for gridded data described here. Location dimension is a table or view, which contains minimum three fields: loc_code, lat and lon.

The time dimension has typically no table associated in the database, so it has to be stated explicitly:

Periodic: The Web Map Service 1.3 convention start/end/period is used. For example 1995-04-22/2000-06-21/P1D. This type is recommended if all the stations have same regular reporting schedule. If data is missing for some periods, the time still is there, but data is missing. Enumerated: All the time dimension coordinates are given, for example 2009-01-01,2009-04-01,2009-07-01,2009-10-01. This type is recommended if all the stations have the same reporting time, but the intervals are irregular. Range: Just start/end, for example 2008-01-01/2008-12-13. This type must be used if the station reporting times differ from each other station. Giving only the range, there is no way to tell when the coverage actually has data. This means, that in WCS getCoverage request the TimeSequence parameter must be a time range, since there is no way to tell where the data actually is.

Fig 4.5 Mapping a SQL database of station time series data to WFS/WCS services.

Due to flexibility of SQL, it is possible to take a properly normalized database, and augment it with SQL VIEWS, after which configuring a coverage is simple: just point to a view, and give the field names that need to be queried. Based on this server design, virtually all the local effort for a given server can be focused on the preparation of suitably configured SQL database.

The maintenance of the WCS server for continuously evolving data sets is also automatic. For instance, as new observations are acquired the new time slice is appended to the SQL database and the configuration extraction code automatically updates the WCS capabilities and coverage documents.

The more detailed version how to configure point data for WCS is in WCS Wrapper Configuration for Point Data.

Best Practices:

If your monitoring network has the same collection locations, and the collection interval is the same, make each collection parameter a field in the same coverage. Time dimension must be the same. Location dimensions must have the same stations, but it's possible to use WFS to filter out stations that don't collect certain data field. If you have hourly data and daily aggregated data, make them two separate coverages. If you have data from two different location networks, make them separate coverages.

Additional Services

The WCS server package also provides additional services that support interfacing the WCS data access service with catalogs, workflow engines for processing and visualization services.

Detailed Descriptions of Individual Components.

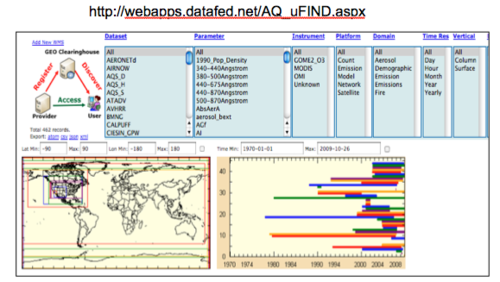

GEOSS Clearinghouse is the engine that drives the entire system. It connects directly to the various GEOSS components and services, collects and searches their information and distributes data and services. The AQ Community records can be found in the clearinghouse and aq applications, like uFIND, search the clearinghouse.

Location Table This table describes the Station Point Data location dimension. It contains at least loc_code, lat and lon columns and optional more detailed information. The data tables can then be normalized, they only need the loc_code to identify from which location the data came from. Feature Processor for Points returns this location table. Location Configuration for Points publishes this table to the framework

KML Maker This is a datafed service that produces KML out of grid, point, or image data. The data can be produced with WCS, WMS or any other service that produces results via http-get. The resulting KML can be viewed with Google Earth.

KML is a file format used to display geographic data in an Earth browser such as Google Earth, Google Maps, and Google Maps for mobile. KML uses a tag-based structure with nested elements and attributes and is based on the XML standard. All tags are case-sensitive and must be appear exactly as they are listed in the KML Reference. The Reference indicates which tags are optional. Within a given element, tags must appear in the order shown in the Reference.

Google Earth lets you fly anywhere on Earth to view satellite imagery, maps, terrain, 3D buildings, from galaxies in outer space to the canyons of the ocean. You can explore rich geographical content, save your toured places, and share with others.

AQ uFIND This is a web program that is used to find WMS and WCS services. It gets it contents by reading ISO 19115 Metadata documents from GEOSS Clearinghouse Service Registry. The user interface filters the service list by keywords like Parameter, Domain, Originator etc... The Datafed Browser can be then used to view the service data.

DataFed Browser is a web program for viewing WCS and WMS services.

Hosting the Open-Source WCS Server Project at SourceForge

The approach of this software project is to promote the idea of standards based co-operation instead of closed software development. To emphasize common ownership, the project is hosted at sourceforge.net, which is a popular place to manage open-source projects. Some of the benefits of having the project at SourceForge.net are:

Trustworthiness. SourceForge takes viruses and malware very seriously, the users give feedback. Downloading an established project with plenty of good comments is safer that downloading code from some unknown site.

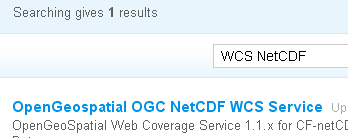

Visibility. If you search sourceforge with ?q=WCS+NetCDF you'll get this project:

Project Web Pages. Sourceforge hosts the project pages in a neutral web addess. Any project developer can edit these.

The address is nice neutral http://aq-ogc-services.sourceforge.net/

These project pages provide the complete documentation of the project itselt.

Project Home Page. This is the starting point to the pages that are used in the technical participation itself.

http://sourceforge.net/p/aq-ogc-services/home/

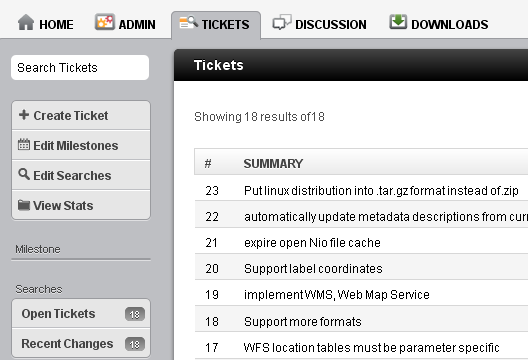

Tickets: Every project needs issue tracking. Whether you are a developer, who needs to communicate with other developers, or just a casual user who has a feature request or a defect to report, this is the place to go. Explore the open and closed issues.

The WCS ticket system http://sourceforge.net/p/aq-ogc-services/tickets/ is used actively by the developers.

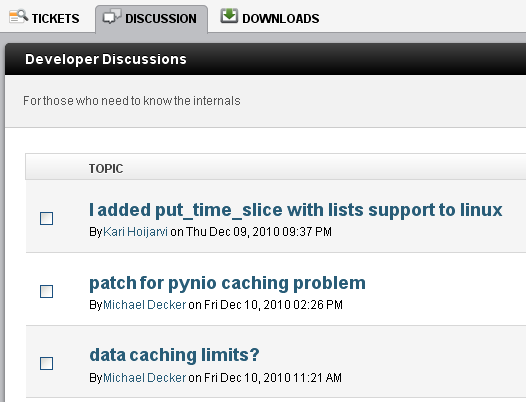

Discussions: Email gets lost. From a mailing list archive you can search relevant information.

Discussions home is http://sourceforge.net/p/aq-ogc-services/discussion/. There are three Discussion lists: General is a place to ask any question.

Developer discussions are for brainstorming about deep technical details.

Announcements is a low-traffic list to inform about new versions.

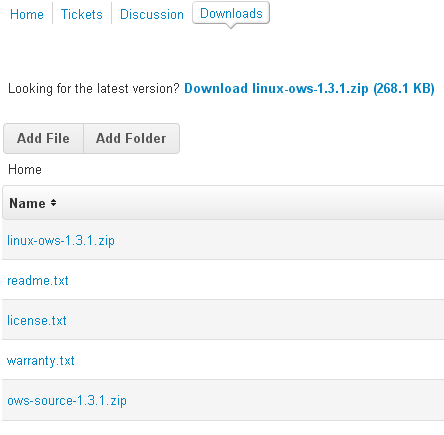

Downloads: Here you can download the actual code packages. The center http://sourceforge.net/projects/aq-ogc-services/files/ contains zipped files for those who want to install the system. Depending whether you are running Windows or Linux, cube data or point data, you can choose your best download package.

SourceForge is a large scale replication system, that puts the project zip files to numerous mirror computers. This improves availability, you can always download the binary even if sourceforge was down for maintenance.

SourceForge requires an open-source license. The WCS service is released under MIT License, which is a simple and permissive license, you can use this code in any project, including proprietary software.