NASA ROSES08: Regulatory AQ Applications Proposal- Technical Approach

Air Quality Cluster > Applying NASA Observations, Models and IT for Air Quality Main Page > Proposal | NASA ROSES Solicitation | Context | Resources | Schedule | Forum | Participating Groups

Technical Approach

Description of EE DSS

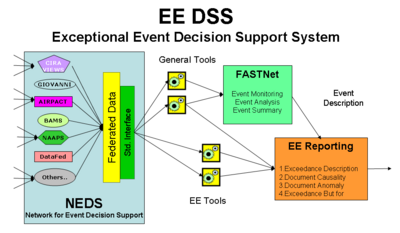

The main purpose of this project is to support the implementation of EPA's new Exceptional Event Rule by developing and delivering a suitable Exceptional Event Decision Support System (EE DSS). The functionality of the system includes: (1) detection and description of EEs, (2) preparation of EE flag justification reports, and (3) evaluation and approval of the EE flags by Regional and Federal EPA. The corresponding activities supported by EE DSS include: accessing and processing data, analyzing events and preparing EE Justification reports. These tasks will be accomplished by three major components of EE DSS shown below:

- Data network, Network for Event Decision Support, an infrastructure for accessing and integrating distributed EE-relevant data and models

- A networked community of analysts, FASTNet, for detecting, analyzing and describing exceptional events

- A comprehensive set of tools and methods for preparing and evaluating EE justification reports.

The three components of EE DSS working together, constitute an end-to-end information processing system that takes observations as inputs and produces "actionable" knowledge necessary for EE decision making. The knowledge derived from observations and models is in the form of evidence that an exceedance would not have occurred but for the impact of the exceptional event. Unlike traditional monolithic, closed "stove pipe" DSS, the proposed networked data system will follow a Service Oriented Architecture (SOA). In fact, the EE DSS will be a "network of networks". In NEDS, the data will be distributed through a network of providers. Similarly, in FASTNET, the collaborating analysts will form a network of analysts. It is also anticipate that with time the EE regulatory process will also include a network of States, Regional and Federal EPA offices.

Major components of the proposed EE DSS project have been developed over the past decade in projects. At Washington University these projects included: DataFed supported by NSF, EPA,NASA and FASTNet (RPOs), SHAIRED (refs) as well as work conducted by CAPITA while supporting EPA in preparation of the EE Rule itself. Similar developments at Co-I and Collaborating partners have also produced an impressive stock data, tools and methods relevant to EE DSS. The new components of the proposed system are the EE-specific tools in the and the Exceptional Event Reporting Facility. (note from Rich's letter)

Given the past years of experience in developing and using these components, the main challenge of this ambitious project will be "connecting the pieces" and enabling the networks of autonomous nodes to produce societal benefits in the form of better air quality management. In this sense, the proposed DSS is a contribution toward the implementation of the Global Earth Observing System of Systems (GEOSS). In fact, Exceptional Event is an air quality scenario in the 2008 GEOSS Architecture Implementation Pilot (ref). It will facilitate integrating and utilizing multi-sensory monitoring networks and enhance the connections among the key U.S. agencies NASA (science and technology), NOAA (operation), EPA (regulation). Equally important will be the connections and knowledge-sharing between the people: data providers, air quality analysts and regulators.

Network for Event Decision Support (NEDS)

The data required for Exceptional Event Analysis will be linked and federated using NEDS. The key roles of the federation infrastructure are to (1) facilitate registration of the distributed data in a user-accessible catalog; (2) ensure data interoperability using international, standard protocols; (3) provide a set of basic tools for data exploration and analysis.

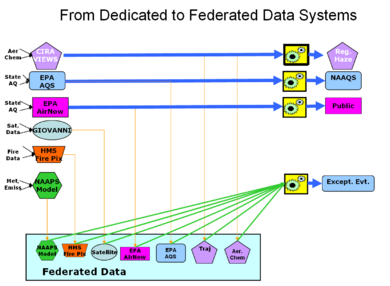

Data Federation Architecture

Data federation is accomplished by turning data stored and exposed through a server into a data service. Data as a service makes it accessible to other computers through standard interfaces and communication protocols. Data providers "publish" data in a catalog, users "find" data in the catalog and when ready, they connect or "bind" to the selected data access service. In NEDS, federating data resources can and will be pursued as a gradual, non-disruptive process where providers expose their self-determined fraction of data resources as a web service. Users of the federated data can then access the federated resource pool through suitable catalogs as shown in Fig... (add catalog to pic). From the user's perspective, federating the data makes the physical location irrelevant. This loosely-coupled networked architecture is consistent with the "publish-find-bind" triad of Service Oriented Architecture and also supports the GEOSS motto: "Any Single Problem Requires Many Data Sets. Any Single Data Set Serves Many Applications."(REF - GEO Sec. ). In the case of NEDS, for example, all the data needed for EE analysis are accessed from the federated data pool. The EE program does not have any data of its own.

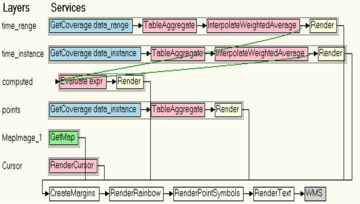

Fig.xx The services are organized as a stack of workflow chains. Each row is a data layer, where the values that are displayed are computed through the service chain. Which starts with data access followed by several processing services and then completed through a rendering service.

The Service Oriented Architecture (SOA) of DataFed is used to build web-applications by connecting the web service components (e.g. services for data access, transformation, fusion, rendering, etc.) in Lego-like assembly as illustrated in Fig. 2b. The generic web-tools created in this manner include data browsers for spatial-temporal exploration, tools for transport analysis and spatial-temporal pattern analysis. The proposed tools specific to the EE Rule will also be assembled through flexible web service composition.

Establishing the software connections for NEDS will be using standard interfaces including OGC WMS for image data and WCS for point and grid datasets. This universal access is accomplished by ‘wrapping’ the heterogeneous data, a process that turns data access into a standardized web service. Through the wrappers, datasets can be queried by spatial and temporal attributes and processed into higher-grade data products. The connectivity mechanism will be either directly through peer-to-peer connections or through mediators such as DataFed or GIOVANNI. Due attention will be given to the sensitivities of the data providers such as proper attribution and use constrains. The needs of the data users will be represented by proper metadata including data lineage, data quality and other detailed information. The creation of user-contributed metadata and the communication between data providers and data users will be facilitated through metadata workspaces, i.e. hybrid (structured/unstructured) wiki pages that are dedicated to each dataset.

The further development of the NEDS infrastructure is beyond the scope of this project since that development can be leveraged from other on-going projects such as the NASA REASON grant, SHAiRED, at Washington University. However, special effort will be devoted to NEDS. The core NEDS data sharing network is a subset of the available, pooled AQ data resources. It contains data serving nodes that are of particular relevance to exceptional event analysis. In the NEDS subset, the data flow is harmonized by eliminating connectivity glitches and the data flow will be well-tested for persistency and robustness. Such a robust network can be the basis for creating distributed, compound applications that are built by the combined effort of multiple organizations. Outstanding examples of these compound applications include the Combined Aerosol Trajectory Tool (CATT) and MODIS-Airnow tool at GIOVANNI. Details on the architecture can be found in a recent paper: DataFed: An Architecture for GEOSS.

Collaborating Participants in NEDS

The EE DSS project will achieve its goals primarily by linking, harmonizing and integrating and otherwise ‘connecting the pieces’ contributed by its autonomous core constituent partners represented by the projects GIOVANNI, NAAPS, VIEWS, AIRPACT, BAMS and DataFed. The nodes of NEDS working together constitute an end-to-end information processing system that takes observations as inputs and produces "actionable" knowledge necessary for EE decision making. The knowledge derived from observations and models is in the form of evidence that an exceedance would not have occurred but for the impact of the exceptional event. Unlike traditional monolithic, closed stove pipe DSS, the proposed DSS will follow a service oriented architecture where the data will be accessed from a network of providers. Similarly, the collaborating analysts will form a network.

Loosely coupled connections to a network of data providers and data analysts along with an open, inclusive approach will promote the creation of an agile, responsive DSS that is capable of responding to the challenging and varied requirements of the Exceptional Event regulatory process. In order to satisfy the operational requirements of the end-user organizations (States, Regional and Federal EPA), the open, loosely-coupled networks will be fortified by a core data network and a core analyst network that can deliver required data, tools and analysis products to the EE DSS customers. The core networks will be composed of the co-investigators and collaborators of this project.

Co-Investigators

VIEWS:The Visibility Information Exchange Web System (VIEWS) is an online decision support system developed to help federal land managers (FLMs) and states evaluate air quality and improve visibility in federally-protected ecosystems according to the stringent requirements of the EPA’s Regional Haze Rule and the National Ambient Air Quality Standards. The Technical Support System (TSS) is an extended suite of analysis and planning tools designed to help planners develop long term emissions control strategies for achieving natural visibility conditions in Class I Areas by 2064. VIEWS/TSS integrates numerous air quality and emission datasets into a single, highly-optimized data warehouse which enables users to explore, merge, and analyze diverse datasets. For this EE DSS project, the VIEWS/TSS program will make available the Air Quality data ???… Participate in event analyses (?? As desired, no hard commitment) and some of the decision support services/tools (?? As desired, no hard commitment)

NAAPS:The Navy Aerosol Analysis and Prediction System (NAAPS) is a global operational aerosol, air quality and visibility forecast model that generates six-day, forecasts of sulfate, dust and smoke and the resulting visibility conditions worldwide. NAAPS is particularly useful for forecasts of dust events downwind of the large deserts and the transport of large-scale smoke plumes originating from boreal and tropical forests and the savannah. NAAPS includes innovative data assimilation from MODIS, Deep Blue, AERONET, and CALIPSO data. NAAPS’ strength is in forecasting and simulating the timing of events. This is useful to the AQ forecaster in understanding today’s conditions and forecasting tomorrow’s air quality, as well as to the analyst studying an EE. Simulations that identify the contribution from outside the US will also be considered for use in providing boundary conditions for regional AQ models such as AIRPACT-3 or CMAQ.

GIOVANNI: The GES-DISC (Goddard Earth Sciences Data and Information Services Center) Interactive Online Visualization ANd aNalysis Infrastructure (GIOVANNI) is a Web-based application that provides standards-based web access (WMS, WCS, OpENDAP) to NASA Earth science remote sensing data including MODIS, MISR, TOMS, MLS, CALIOP and GOCART. GIOVANNI will enable air quality scientists to identify regional air pollution sources and sinks. It will also help in tracking the intercontinental transport of atmospheric trace gases and aerosols from industrial pollution plumes, smoke or dust. GIOVANNI is also developing tools to provide vertically-resolved visualization of aerosol pollution by combining MODIS AOD and CALIOP extinction data. The vertical distribution of aerosols will enable air quality scientists to understand the vertical transport of aerosols.

AIRPACT: The AIRPACT-3 daily air-quality forecasting system offers an excellent resource EE DSS for Exceptional Events, such as smoke from wildfires or dust storms. To support the development of the proposed EE DSS, Vaughan will make AIRPACT-3 results available for evaluation of air-quality events of interest as potential exceptional events. Also, Vaughan will participate in analysis of candidate events by operating the AIRPACT-3 modeling system with alternative emissions scenarios or boundary conditions and participate in evaluation of the contribution of specific sources of interest. For example, in the case of candidate EE involving wildfires in the northwest, AIRPACT-3 can provide air-quality simulation results for scenarios both including and excluding regional forest fires, for which emissions are already automatically included in AIRPACT-3 simulations.

BAMS:Baron Advanced Meteorological Systems' (BAMS) is developing a mission-critical commercial, national-scale air quality forecast decision support system (AQF-DSS). Operational forecasts are produced using MM5/SMOKE/MAQSIP-RT and CMAQ models which includes simultaneous assimilation of real-time satellite and surface aerosol observations. The new deep blue MODIS retrievals will improve initial and boundary conditions over CONUS, while the land-surface modeling system will better characterize the surface relative humidity critical to hygroscopic aerosol effects. The model simulations will inform and improve the ability to distinguish exceptional events from those that should be rightly classified as events that violate the standard. BAMS will make model results available for the evaluation of possible exceptional events, and will develop a boundary-condition interface to the NAAPS to better capture the effect of long-range transport from outside the regional-to-local domain where the event occured.

DataFed: DataFed is a distributed web-services-based computing environment for accessing, processing and rendering environmental data in support of air quality management and science. The flexible, adaptive environment facilitates the creation of user-driven data processing value chains. DataFed non-intrusively wraps datasets for access by standards-based web services. Its federated data pool consists of over 100 datasets and the tools have been applied in several air pollution projects. DataFed contributes air quality data (as services) to the shared data pool through the GEOSS Common Infrastructure. It also hosts a Decision Support System (DSS) for Exceptional Event analysis.

Collaborators

PULSENet:PULSENet is a standards-based sensor web framework for access, display, processing, and dissemination of sensor data and tasking control of sensors. PULSENet is part of a NASA ESTO project titled, Sensor-Analysis-Model Interoperability Technology Suite (SAMITS), that is developing a package of standards, technologies, methods, use cases, and guidance for implementing networked interaction between sensor webs and forecast models. PULSENet will augment the EE DSS by providing standards-based interfaces to services suitable for workflow chaining in advanced application testing that ties together atmospheric, air quality, and fire sensors with smoke forecasting models.

Hazard Mapping System:The NOAA/NESDIS Hazard Mapping System (HMS) integrates fire observations from multiple satellite sensors, human observations, and other sources. The HMS dataset is particularly useful for determining the spatial extent of smoke plumes identified by human interpretation of satellite images and extents of major fires. These data are provided as a web service by NOAA's National Geophysical Data Center.

BlueSky: BlueSky is a fire and smoke prediction tool used by land managers to facilitate wildfire containment and prescribed burning programs while minimizing impacts to human health and scenic vistas. BlueSky links computer models of fuel consumption and emissions, fire, weather, and smoke dispersion into a system for predicting the cumulative impacts of smoke from prescribed fires, wildfires, and agricultural fires. For the EE DSS, BlueSky may provide fire location and smoke forecasts that are prepared routinely as part of the interagency fire management program.

National Park Service: The National Park Service (NPS) has conducted air quality monitoring for the past 25 years for the purpose of protecting visual air quality near national parks. NPS is also performing extensive source and receptor analyses to establish the contribution of different sources. For the EE DSS the NPS contributions would include access to the IMPROVE data, air mass back trajectories for each of the monitoring sites, plume simulations of the smoke dispersion as well as other analysis tools and products. The data gathered through the EE DSS may also be beneficial for the air quality assessments and decision-making processes conducted in NPS.

End Users

State/RPOs: The States perform the flagging of EE-influenced samples and also prepare the EE flag justification reports. Hence, the States are the most important users of EE DSS. Their inputs into the design, implementation and testing of the EE DSS will be crucial. In order to address more complex and/or regional issues, a group of States may cluster and form Regional Organizations such as the Regional Planning Organizations (RPOs) for Regional Haze.

Regional EPA: The monitoring data samples that are flagged as exceptional will be evaluated by Regional EPA based on the EE Flag Justification reports submitted by the States. The flag evaluation will also incorporate the use of the EE DSS.

Federal EPA: EPA is the driver for the introduction of the Exceptional Event Rule and also the evaluator of its implementation. The development of the EE DSS and its wide use by the States and Regional EPA offices throughout the development of the EE DSS, EPA will provide both guidance and evaluation. The Federal EPA ensures consistency between the Regional EPA evaluations.

GEOSS: The data sharing infrastructure of GEOSS is a mechanism will allow the publication, finding and reusing the Earth Observation resources at an international scale. The role of GEOSS for this project is to provide an architectural framework through the GEOSS Common Infrastructure and also GEOSS ideals for sharing and developing trust. Conversely, the EE DSS and in particular, NEDS will play an important role as examples for the system of systems approach.

FASTNET: Community Event Analysis Network

Full understanding and characterization of air pollution events is a very labor-intensive, subjective and sporadic process. Collecting and harmonizing the variety of data sources, describing events in a coherent, compatible manner and assuring that significant events will not ‘fall through the cracks’ is a challenging task for research groups, but even more for State and Regional air quality analysts. The detection and characterization of short-term events is performed by monitoring a wide range of observations arising from real-time surface and satellite sensors, air quality simulations and forecast models. The gathering of the distributed data and the tools for data exploration and processing are described through the constituent nodes of NEDS.

Initial event analysis can be performed in real-time while, by necessity, the detailed event characterization that includes slower data streams is conducted post-facto. Conceivably, the event analysis performed in the community workspace could serve as triggers and guides to the States in deciding which station-data to flag. Real-time continuous PM monitoring provides the record for short term event detection. Time-integrated and less frequent speciated PM samples provide the chemical signatures for specific aerosol types, such as smoke or dust. Satellite images delineate both the synoptic-scale as well as fine-scale features of PM events under cloud-free conditions. The full integration of these diverse PM data arising from a variety of measurements is still a major challenge for the data analyst. Air quality models that assimilate the various observations could serve as effective data integration platform. Unfortunately, the science and technology of such data assimilation is not yet available for the modeling and data analysis communities.

We are proposing that general event analysis to be conducted by a virtual community of analysts. The FASTNET (Fast Aerosol Sensing and Tools for Natural Event Tracking)concept was introduced by Poirot, et al (2005). It began as an air pollution event detection and characterization project, which includes a set of tools, methods as well as a community of analysts. FASTNET was initially developed and supported by the Regional Planning Organizations (RPOs) for the characterization of major natural events relevant to the Regional Haze Rule. Forest fire smoke and windblown dust are particularly interesting events, due to their large emission rates over short periods of time, continental and global-scale impacts, and unpredictable sporadic occurrence. Such dust and smoke events are also the dominant causes of Exceptional Events under the EE Rule.

The FASTNET concept will be adpoted to the specific needs of the EE Rule. The FASTNET for EE DSS will consist of a core group of analysts whose effort will ensure that:

- Major EEs with exceptional impacts on many sites will be analyzed and described so that individual States can use well-documented, authoritative event descriptions.

- The core group will be available for consultations or to perform special analyses for difficult EE cases identified by the State, Regional or Federal offices.

- The core group will also guide the development of additional tools and methods for the general characterization of EEs by identifying new data sources, combining and fusing multi-sensory data and interacting with the event modelers and forecasters.

Community interaction for event characterization is particularly vital since aerosol events are being identified, recorded and to various degree analyzed by diverse groups for many different purposes. We propose to "harvest" the event analyzes being conducted by groups and to combine it with analysis being done by FASTNET community serving NEDS. In the past the virtual community of analysts have been gathered by ad-hoc means. It is hoped that through this project, the virtual workgroups may receive more extensive and powerful tools and technical support in form of Analyst Consoles, Anomaly detection tools, collaboration space and more effective communication. This collaboration support will allow better harvesting of the experience and insights of the broader interested community.

The FASTENET virtual community of analyst will conduct much of its business on an open wiki workspace. In the FASTNET workspace, each event will be assigned an EventSpace which will combine information on data, interpretation, discussion and community-produced event summary. (See example event workspace) A classical event workspace is for the 1998 Asian Dust Event (Ref- asian dust). EventSpaces for more recent events include Georgia Smoke (ref) and Southern California Fires (ref).

The searchable Event Catalog facilitates the finding and reuse of past event analyzes. The organization, statistics and spatial-temporal display of past aerosol events by type is also helpful in developing a long-term climatology of events. For example, the Regional Haze Rule requires the establishment of the natural haze conditions, which is to be attained by 2064. [ Add links out to ..]

The outputs of the community event analysis includes event characterizations as contributed by the joint effort of the participating community. These event descriptions are integrative and general purpose so that they are applicable to many users, such as informing the public, improving the model forecasts as well as advancing atmospheric science. The community-based event descriptions are also necessary in the formal EE DSS since many of the events extend well beyond the territory of any state. Thus, they provide a broader context that is required for event justification.

The organizational challenges for FASNet will be numerous. Who should package EE description? Who should be receiving/notified with the EE description/trigger? Which agencies, organizations should be most encouraged for particpation? What should be the governance structure of the EE detection/description? In resolving these challenges we will draw upon the broad past experience of this proposing team.

EE Reporting Facility

The EE Reporting Facility is devoted to satisfy the needs of the EE Rule implementation. It constitutes the main development activity of the proposed project. The facility will be used by the States to prepare the flag justification reports which are then submitted to Regional EPA. The facility will also be used by the Regional and Federal EPA to evaluate the submitted flag justifications. In the initial application, the EE Reporting Facility would be used to prepare the reports months after the event has occurred. However, in the future this reporting facility could perform some of its functions in near-real-time (see transition approach).

The EE Reporting Facility which includes a comprehensive set of tools and methods for preparing and evaluating EE justification reports. This facility draws upon resources and tools of NEDS and FASTNET (Section X).

The EE Flag Justifications have to provide evidence in accordance with the four clauses, A-D, expressly stated in the Exceptional Event Rule (section..).

A. The event satisfies the criteria set forth in 40 CFR 50.1(j); In the first step it is established whether a site is in potential violation of the PM2.5 standard; is the concentration over the 15ug/m3 annual or 35 ug/m3 daily standard? Only samples that are in non-compliance are qualified for EE status flag. Next, qualitative or quantitative evidence is gathered and presented showing that the event could have been caused by a source that is not reasonably controllable or preventable

B. The main analysis step, provides key quantitative information for demonstrating a clear causal relationship between the measured exceedance value and the exceptional event.

C. Next, the sample is evaluated whether the measured high value is in excess of the normal, historical values. If not, the sample is not exceptional.

D. Finally, the contribution of the exceptional source to the sample is compared to 'normal' anthropogenic sources. Only samples where the exceedances occur but for the contribution of the exceptional source qualify for EE flag.

A: Exceedance Description

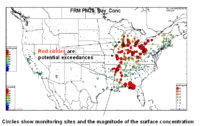

The purpose of this DSS component is to demonstrate that the event satisfies the criteria set forth in 40 CFR 50.1(j), i.e. that there is a potential pollutant source which is not controllable or preventable, such as forest fires, dust storms, or pollution from other, extrajurisdictional regions. It is also necessary to establish whether a site is in potential violation of the PM2.5 daily (35ug/m3) or annual (15ug/m3) standard. Only samples that are in non-compliance are qualified for EE status flag.

Approach: The evidence needed for this component is gathered from multiple sources. Each responding to different requirements, including the event description, the presumed uncontrollable source, potential violation of NAAQS.

Reports of the Event from Media and General Public. Event desc. FASTNET

Measured PM2.5 Concentration Showing Exceedance (>15ug/m3 or > 35ug/m3 ) - Site specific. The first step is to establish that a sample is a likely contributor to noncompliance. A site is in noncompliance if the 98 percentile of the PM2.5 concentration over a three year period is over 35 ug/m3. However, a sample may be in compliance even if the PM2.5 concentration is > 35ug/m3, provided that such values occur less than 2 percent of the time.

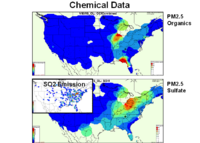

Measured PM2.5 Speciation Data for the Event Day - Site Specific (EC/OC/SO4...). A compelling line of evidence for establishing a causal relationship is through the chemical fingerprints of aerosol samples. This speciated aerosol monitoring data can be used to indicate unusual exceptional status based on unusual chemical composition, e.g. organics for smoke and sulfates for non-exceptional sources. Speciated aerosol monitoring data can be used to indicate unusual exceptional status based on unusual chemical composition, e.g. organics or smoke, and soil components for wind-blown dust. Speciated aerosol composition data provide strong evidence for the impact of smoke on ambient concentrations. The figures below show the chemical composition data for sulfate and organics, respectively for May 24 and May 27. Challenges: Now difficult for States..With FASTNET, general event description is provided.

B: Clear Causal Relationship between the Data and the Event

The purpose of this component is to demonstrate that there is a clear causal relationship between the measurement under consideration and the event that is claimed to have affected the air quality in the area. The main scientific, technical challenge arises from the requirements of this clause B: Establishing a clear causal relationship. For PM pollution, for example, the fact that many different source types contribute to PM2.5 concentrations. Some sources are anthropogenic, others are natural; some are located nearby, others can be located far away. Emissions from natural and 'extra-jurisdictional' sources, such as biomass fires and windblown dust or intercontinental pollution transport can contribute to severe episodic PM events, i.e. short-term concentration spikes. However, accounting for the contributions of these extra jurisdictional events in the implementation of the NAAQS is still under development.

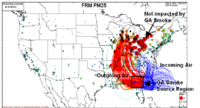

Approach: The evidence includes (1) backtrajectory analysis to establish whether the air masses associated with the exceedance pass through the source region of the exceptional source. (2) Speciated aerosol data showing unusual chemical composition, e.g. organics for smoke, soil components for wind-blown dust, and potassium for July 4th. (3) Forward model simulations can also indicate a causal relationship. (4) Temporal signatures (spikes) may also yield additional evidence. While none of the evidence provides proof, the combination of evidence from multiple independent perspectives can provide sufficient weight for decision making. Hence, the purpose of this report section and these tools is to illustrate the multiple lines of evidence and how to combine these for making a strong argument.The selection of datasets and tools as well as the presentation of the evidence is in the hands of the analysts.

Satellites: Under cloud-free conditions, near real-time satellite images and data products are useful for the identification of exceptional events such as forest and agricultural fires, wind-blown dust events. The fire pixels, obtained from satellite and other observations, provide the most direct evidence for the existence and location of major fires. In the above map of fire pixels, the cluster of fires in southern Georgia is evident. The true color MODIS images from Terra (11am) and Aqua (1pm) show a rich texture of clouds, smoke/haze and land. Inspection of the images shows evidence of smoke/haze, while the Aerosol Optical Thickness (AOT) product, derived from MODIS Sensors (Terra and Aqua satellites) shows the column concentration of particles. The Absorbing Aerosol Index provided by the OMI satellite reveals the smoke in the immediate vicinity of the fire pixels as well as the transported smoke. The lack of OMI smoke signal further away from the fires indicates an absence of smoke. It is also possible that the smoke is below the cloud layer and therefore not visible from the satellite. Also, the OMI smoke signal is most sensitive to elevated smoke layers, while near-surface smoke is barely detected by the Absorbing Aerosol Index..

Combined Air Quality Trajectory Tool: One line of evidence for causal relationship is combining the observed source of an exceptional event with backtrajectories of high concentration events. In the figures below, we show the color coded concentration samples along with the backtrajectories which show the air mass transport pathway. (CATT Manual, CATT Ref)

Model simulations and forecasts: may also provide evidence for exceptional events. For example, the ability of regional and global-scale models for forecasting wind-blown dust events is continuously improving. This is evidenced by the good performance of the Naval Research Laboratory NAAPS global dust model. The simulation and forecasting of major smoke events is much more difficult due to the unpredictable geographic-time-height-dependence of the biomass smoke emissions. Hence, currently reliable and tested smoke forecast models do not exist, however, models such as BAMS' CMAQ which will be ingesting Deep Blue and surface data, with boundary conditions to-be-linked w/ NAAPS, show promise. Thus, some of the model simulations provide useful additional evidence for the cause of the high PM levels.

VIEWS Speciation Data - Carbon On May 24, the highest sulfate concentration was recorded just north of the Ohio River Valley. On the other hand, the highest organic carbon concentration is measured along the stretch from Georgia/Alabama to Wisconsin. This spatial separation of sulfate and organics indicates different source regions. Based on the combined chemical data and backtrajectories it is evident that the high PM concentrations that are observed along the western edge of the red trajectory path is due to the impact of the Georgia smoke. On the other hand the high PM2.5 concentrations just north of the Ohio River Valley are primarily due to known, controllable sulfate sources.

Challenges Establishing causality between alleged sources and site exceedances is a scientifically challenging task since it requires the establishment of a quantitative and defendable source-receptor relationship. Establishing such relationship for EEs exacerbated by the unpredictable emission location and time and usually complex transport processes. The organizational challenges stem primarily from the need to acquire observations from many organizations. The key implementation challenge is the proper integration of the multiple lines of evidence for estimating the causality of the anomalous source impact.

C: The Event is in Excess of the "Normal" Values

The purpose of this component is to demonstrate that the event is associated with a measured concentration in excess of normal historical fluctuations, including background.

Approach: Establishing the magnitude of normal, historical values can be performed through many different statistical measures. The air pollution pattern varies in space, time and also depends on the pollutants. In case of PM, it also depends on the species in the PM chemical mix. The sulfate pattern, for example, is very different from nitrate, organics or dust. Thus, the metrics that meaningfully describe the "normal" historical pattern require many parameters including space, time composition along with those from parametric and/or nonparametric statistics.

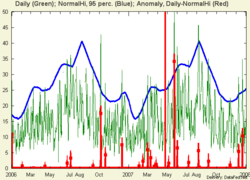

Spatial Normals - PM2.5, Chemical Constituents. A useful measure of the "normal" concentration is the high, say 95th percentile, for a given station. In the illustration below, a time windows of +/- 15 days (one month window) was chosen. This period is longer than a typical exceptional event, but it is sufficiently short to preserve seasonality. In order to establish the normal values the concentrations can be averaged over multiple years for the given time window measured in Julian days. Hence, a particular sample is considered anomolously high (deviates from the normal) if its value are substantially higher than the 95th percentile of the multi-year measurements for that "month" of the year. See Help:Using the Concentration Anomaly Tool to learn how to change these parameters.

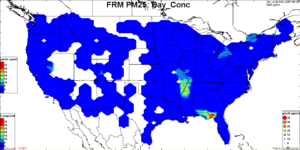

In the figures below, the concentration and anomaly patterns are illustrated for two days 2007-05-24 and 2007-05-27. For each day, the leftmost figure shows the measured day average PM2.5 concentration. The circles are color coded using the same coloring scheme as the contour for the concentration field. The middle figure shows the contour field for the 95th percentile PM2.5 concentrations. The color coded circles still represent the concentration for the selected day. The rightmost figure shows the concentration anomaly, the excess concentration of the current day values over the 95th percentile values. While the rightmost figures show that the excess concentrations are high, these by themselves cannot establish whether the origin is from controllable or exceptional sources.

Browser for: FRMPM25_diff

The FRM shows that on May 24, the high excess concentrations over the median were confined to a well-defined plume north of the Ohio River Valley. The deep blue areas adjacent to the PM plume indicate that large portions of the eastern U.S. were near or below their median values.

While the excess concentrations are high, these by themselves cannot establish whether the origin of the excess is from controllable or exceptional sources.

Temporal Normals

Challenges: In the project, it will be necessary to decide (1) what should be the specific metric for the "normal" high concentration (2) what should be the excess above normal high value to qualify for exceptional high. With these settings specified, this tool can be devised to automatically detect whether a station's anomaly is exceptional or not.Detecting anomalies requires that there is a meaningful metric that quantifies the "normal" values. Unfortunately, quantifying the normal value of air pollutants is difficult and ambiguous since pollutant concentrations vary in time and space much more than the ... Hence, the key challenge is to devise suitable metrics for normal, normal-high etc. The organizational challenge is to devise a suitable metric for the normal that incorporates both the requirements of the regulatory agency, EPA, as well as that of the scientific community that is familiar with the nature of the pollutant variability. When suitable metrics for normal are derived it is desireable to develop appropriate tools that automatically calculate the anomalies and display those through appropriate visualization of spatial and temporal anomalies.

D: The Exceedance or Violation would not Occur, But For the Exceptional Event

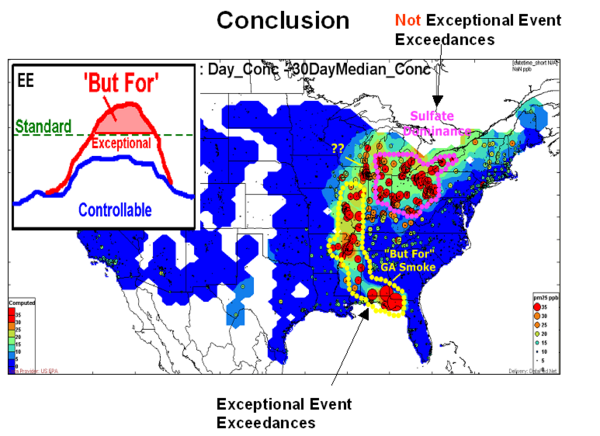

The ultimate test whether a sample can be flagged is the “but for” condition, i.e. the exceedance would not have occurred “but for” the presence of the exceptional event. Without the additional excess concentration there would not have been an exceedance (See inset Fig….) “But for” is a very stringent and doable condition to satisfy. For example, if an exceedance would have occurred without the exceptional contribution – no EE flag. Conversely, if adding the exceptional concentration does not result in an exceedance – no EE flag.

The “but for” condition also places extreme demands on the analysts and the DSS for gathering the supportive evidence. In essence, it is required to perform a source apportionment of the measured ambient concentration that separates the “normal” and the exceptional source contributions. The “normal” includes the industrial and normal natural contributions from nearby sources.

At this time practical, reliable and generally applicable tools for producing “but for” evidence do not exist. EE flags are being ex… semi-qualitative by consolidating and weighing a variety of corroborating evidence. The but for contribution opens up the need for research source, receptors hybrid. There would have been no exceedance or violation but for the event. Finally, the contribution of the exceptional source to the sample is compared to 'normal' anthropogenic sources. Only samples where the exceedances occur but for the contribution of the exceptional source qualify for EE flag.

According to the EE Rule, observations can be EE-flagged if the violation is caused by the exceptional event. Considering the subtleties of the EE Rule, below are graphical illustrations of the Exceptional Event criteria:

- The leftmost figure shows a case when the 'exceptional' concentration raises the level above the standard. A valid EE to be flagged.

- In the next case, the concentration from controllable sources is sufficient to cause the exceedance. This is not a 'but for' case and should not be flagged.

- In the third case, there is no exceedance. Hence, there is no justification for an EE flag.

Conclusion: On May 24, the exceedances north of the Ohio River Valley were caused by the known anthropogenic sulfur emissions. This is deduced from the high sulfate concentration and airmass trajectories that have passed over the high emission regions. The region over which the exceedances were but for the presence of the exceptional Georgia Smoke is highlighted with yellow dots. The evidence arises primarily from (1) the high concentration of aerosol organics, (2) the backtrajectories to exceeding sites passing over the known fire region (3) model simulation of sulfate and smoke and (4) numerous qualitative reports of smoke in the region. The level of sulfates was sufficiently low (< 10 ug/m3) and the level of organics was sufficiently high such that the violation would not have occurred but for the smoke organics.

Based on the combined chemical data and backtrajectories it is evident that the high PM concentrations that are observed along the western edge of the red trajectory path is due to the impact of the Georgia smoke. On the other hand the high PM2.5 concentrations just north of the Ohio River Valley are primarily due to known, controllable sulfate sources.

- General Goal and Framework needs to satisfy EE Rule

- Methods of EE flagging can be developed based on the EE Rule and constrained by the available data/analysis resources

- EE DSS consists of:

- EE Flagging template - lays out sequence and possible lines of evidence

- Tools (based on template) that help preparing flags (States), approving (EPA Regions), deciding (Federal EPA)

The DSS needs to be robust and up-to-date, continuously or in batch mode. Need to request to EPA to expose the FRM PM2.5 and Ozone data, say every 3 months, after the sampling Members of the 'Core' network need to agree to provide robust service of data and tools.

Tools for EE Report Preparation

Standards-based data access permits the development of generic tools for data exploration, processing and visualization. The same tool is then applicable to all the datasets that are standards compliant. Below is a short listing and description of a generic data exploration tool and three tools to be fully developed for the Exceptional Event Rule implementation. All the tools leverage the benefits of OGC standards-based service oriented architecture: Each tool is applicable to multiple datasets; Service orchestration makes it easy to create new tools; The shared web-based tools promote collaboration and communal data analysis. While the tools listed below are built on the DataFed infrastructure, efforts will be made to use the web-based tools of other partners in the network.

The DataFed Browser/Editor is the primary tool for the exploration of spatial-temporal pattern of pollutants. The multi-dimensional data are sliced and displayed in spatial views (maps) and in temporal views (timeseries). Each data view also accepts user input for point and click navigation in the data space. The cyclic view is for the display of diurnal, weekly and seasonal cycles at a given location or within a user-defined bounding box. The DataFed browser is also an editor for data processing workflows using a dedicated SOAP-based workflow engine. A typical workflow for map view is shown in Fig. XX. Google Earth Data Browser, is a software mashup between DataFed, and Google Earth. The two applications are dynamically linked and the user can select and browse the spatial views of any federated dataset. The Google Earth user interface is particularly suitable for the overlay and display of overlapping, multi-sensory data. The temporal animation of sequential data in Google Earth is also instructive for the visualization of air pollutant dynamics and transport.

Analyst Console An Analysts Console (or dashboard) is a facility to display the state of the current aerosol system. It is anticipated that the Analysts Consoles will be the key dashboards for establishing the emergence, evolution and dispersal of exceptional events. Through a collection of synchronized views data from a variety of disparate providers are brought together, the sampling time and spatial subset (zoom rectangle) for each dataset is synchronized, and that the user can customize the console’s data content and format. The analyst community, using these tools will make decisions regarding specific events.

Concentration Anomaly Tool is to be developed and used operationally by the States and EPA to provide an automatic calculation of the normal pattern of air quality or as the deviation from the normal. A useful measure of the "normal" concentration is the 84th percentile (+1 sigma) for a given station. This tool permits the calculation of concentration anomalies. In order to establish the normal values the concentrations can be aggregated over multiple years for the given time window measured in Julian days, i.e. days between 160 and 190. Hence, a particular sample is considered anomalously high (deviates from the normal) if its value are substantially higher than the 84th percentile of the multi-year measurements for that "month" of the year. There is considerable need for flexibility in defining the 'normal' when calculating the deviation above normal.

Combined Air Quality Trajectory Tool (CATT) Backtrajectory analysis can be used to establish whether the air masses associated with the exceedance pass through the source region of the exceptional source. One approach is combining the observed source of an exceptional event with backtrajectories of high concentration events. Color coded concentration samples along with the backtrajectories which show the air mass transport pathway. Given the availability of FRM PM2.5 concentrations, it is instructive to examine the backtrajectories (air mass histories) associated with above-standard concentrations. If those backtrajectories pass through areas of known exceptional sources (forest fires, dust storms), then the corresponding high concentrations may be attributed to that event.