NASA ROSES08: Regulatory AQ Applications Proposal- Technical Approach

Air Quality Cluster > Applying NASA Observations, Models and IT for Air Quality Main Page > Proposal | NASA ROSES Solicitation | Context | Resources | Schedule | Forum | Participating Groups

Event types

- Dust

- Smoke

- LRTP

- July 4th

Technical Approach

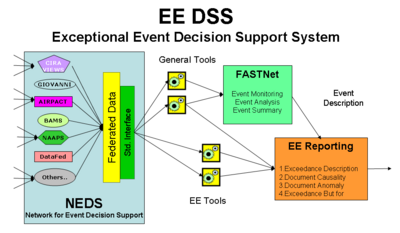

Description of DSS

The main purpose of this project is to support the implementation of EPA's new Exceptional Event Rule by developing and delivering a suitable Decision Support System, Networks for Exceptional Event Decision Support (NEEDS). The functionality of the system includes detecting and describing EEs; flagging and justification of EE-influenced samples by the States, as well as the evalutaion of the EE flags by Regional and Federal EPA.

The main functionality of NEEDS includes: accessing and processing data, analyzing events and preparing EE Justification reports which is accomplished by:(1) Data network, DataFed, for accessing and integrating EE-relevant data; (2) FASTNET, a networked, community of analysts for detecting, analyzing and describing exceptional events; (3)A comprehensive set of tools and methods for preparing and evaluating EE justification reports.

These major components can be viewed as a stack of three layers that constitute NEEDS. DataFed is an infrastructure layer for connecting and accessing distributed data and models. The FASTNET layer is a data analysis "middleware" for performing general-purpose analysis that is useful for many AQ management, science, education and other applications. The third application component produces outputs specifically required by EE Rule.

The three components of NEEDS working together constitute an end-to-end information processing system that takes observations as inputs and produces "actionable" knowledge necessary for air quality decision making. However, the proposed DSS will not be a monolithic "stove pipe". Rather, NEEDS follows a "network-centric" architecture and implementation where the data access/processing will be distributed. Furthermore, NEEDS will consist of a network of collaborating analysts. Hence the EE flag justification process is supported by a pair of networks, one for sharing data and another for sharing and creating event-related knowledge. A third key feature NEEDS is an open, participatory approach that encourages the inclusion of new nodes in both the data and analysis networks.

In order to satisfy the operational requirements of the end-user organizations (States, Regional and Federal EPA), the open, loosely-coupled networks will be fortified by a core data network and a core analyst network that can deliver required data, tools and analysis products to the NEEDS customers. The core network will be composed of the co-investigators and collaborators of this project.

Major parts of the proposed EE DSS is composed of components that have been developed over the past decade in projects, DataFed and FASTNet, described in detail elsewhere. The new components of NEEDS include the EE-specific tools and as well as the Exceptional Event Reporting Facility.

DataFed: IT Archtecture and Data Network Implementation

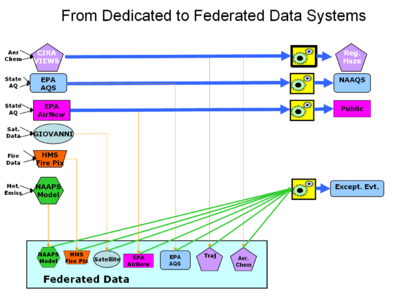

The data required for Exceptional Event Analysis will be integrated using the federated data system, DataFed (Need REF). The key roles of the federation infrastructure are to (1) facilitate registration of the distributed data in a user-accessible catalog; (2) ensure data interoperability using international, standard protocols; (3) provide a set of basic tools for data exploration and analysis.

Federation occurs by connecting to existing data systems and providing those with standard data access interfaces. In effect, providing a standard interface constitutes a federation of distributed data provided that there is a data catalog. From the user's perspective, the physical location of the data becomes irrelevant. This design architecture is consistent with the GEOSS motto: "Any data is applicable to multiple benefit areas. Any benefit area needs multiple data. (look up)". In fact, both the EE DSS and the FASTNET only uses federated data provided by other sources.Current data sharing situation:

Distributed Data arising from air quality surface observations, satellites, emissions and models are "federated" by applying standard interfaces. This universal access is accomplished by ‘wrapping’ the heterogeneous data, a process that turns data access into a standardized web service. As a result, an array of homogeneous, virtual datasets that can be queried by spatial and temporal attributes and processed into higher-grade data products. The Service Oriented Architecture (SOA) of DataFed is used to build web-applications by connecting the web service components (e.g. services for data access, transformation, fusion, rendering, etc.) in Lego-like assembly. The generic web-tools created in this fashion include data browsers for spatial-temporal exploration, transport analysis, spatial-temporal pattern analysis, ... While other tools are specific to the Exceptional Event Rule.

The special aspects of NEEDS data sharing network is that (1)the nodes have EE-relevant content; (2) the data flow is harmonized and well-tested and (3) the members of NEEDS are pursing the collective value-creation by combining... producing composite end products that would not be possible by any node alone. Establishing the software connections for NEEDS will be through DataFed, using standard interfaces including OGC WMS for image data and WCS for point and grid datasets. The connectivity architecture will be either through mediators such as DataFed or GIOVANNI or directly through peer-to-peer connections. Due attention will be given to the sensitivities of the data providers such as proper attribution and use constrains. The needs of the data users will be represented by proper metadata including data lineage, dat quality and ohter detailed information. The creation of user-contributed metadata and the communication between data providers and data users will be facilitated through metadata workspaces, i.e. hybrid (structured/unstructured) wiki pages that are dedicated to each dataset. This proposed project is not the developer of the data network. The network development is supported by individual projects including the REASON grant, SHAiRED, at Washington University.

Core Data for NEEDS

Data Providers: AQS, VIEWS, Airnow, NOAA HMS, GIOVANNI, PULSENet, BlueSky, AIRPACT, NAAPS, BAAMS

For the FASTNET project, 14 specific data sets are highlighted which include various surface-based aerosol data (EPA fine mass, speciated aerosol composition from EPA and IMPROVE network), hourly surface meteorology and visibility data, aerosol forecast model results, and various satellite data and images. Many of these data are available in near-real-time, while others (for example the IMPROVE filter-based aerosol chemistry data and associated back trajectories) are available with a time lag of about 1 year. Analysts access the desired data through the DataFed Data Catalog (Figure 2.) The selected data are automatically loaded into a web-based data browser designed for easy exploration of the spatiotemporal pattern. Semantic data homogenization assures that all datasets can be properly overlaid in space and time views Figure 2).

Tools for Data Processing, Exloration and Visualization

Standards-based data access permits the development of generic tools for data exploration, processing and visualization. The same tool is then applicable to all the datasets that are standards compliant. Below is a short description of the generic tools developed for the browsing and processing datasets that are registered in DataFed. In addition, several tools are listed which were developed specifically for the support of the Exceptional Event Rule implementation.

DataFed Browser/Editor The DataFed browser/editor is the primary tool for the exploration of spatial-temporal pattern. The multi-dimensional data are sliced and displayed in spatial views (maps) and in temporal views (timeseries). Each data view also accepts user input for point and click navigation in the data space. The cyclic view is for the display of diurnal, weekly and seasonal cycles at a given location or within a user-defined bounding box.

The DataFed browser is also an editor for data processing workflows using a dedicated SOAP-based workflow engine. A typical workflow for map view is shown in Fig. XX. The services are organized as a stack of workflow chains. Each row is a data layer, where the values that are displayed are computed through the service chain, which starts with data access service followed by several processing services and then completed through a rendering service.

Google Earth Data Browser A recently developed tool is a mashup between the data access and processing services, DataFed, and Google Earth for data display and user interface. The two applications are dynamically linked and the user can select and browse any dataset and spatial data views accessible through DataFed. The Google Earth user interface is particularly suitable for the overlay and display of overlapping, multi-sensory data. The temporal animation of sequential data in Google Earth is also instructive for the visualization of air pollutant transport.

Analyst Console An Analysts Console (or dashboard) is a facility to display the state of the current aerosol system. It is anticipated that the Analysts Consoles will be the key dashboards for establishing the emergence, evolution and decay of aerosol events. The analyst community, using these tools will make operational decisions regarding specific events.

Each Analysts Console provides a collection of views, each representing a different aspect of the aerosol-relevant system. Key features of these consoles include: data from a variety of disparate providers are brought together, the sampling time and spatial subset (zoom rectangle) for each dataset is synchronized, and that the user can customize the console’s data content and format. Analysts Consoles. are collections of related geo-time-referenced data views shown on a web-single page. The views may contain data overlays. The synchronized views of distributed data allow limited navigation over the space and temporal domain of data.

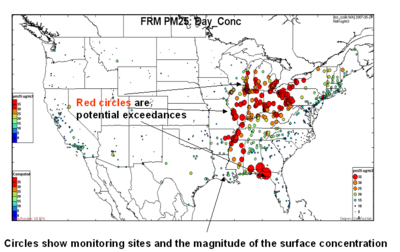

PM2.5 Exceedance Screening Tool The PM2.5 samples that are potential contributors to non-compliance can be determined visually and qualitatively by the PM2.5 Exceedance Screening Tool. The data browser tool has a map view and a time series view. The map view shows the PM2.5 concentration as colored circles for each station for a specific date. The time view shows the concentration time series for a selected site. The selection of time for the map view can be accomplished by entering the desired date in the date box or clicking the date in the time view. The selection of the station for the time series is accomplished either by choosing from the station list box or clicking on the station in the map view. (Note: Better description of generic DataFed browser needed). The coloring of the PM2.5 concentration values (circles) is adjusted such that the concentrations above 35ug/m3 are shown in red. This provides an easy and obvious (to everyone except Neil) way to identify the candidate samples for noncompliance

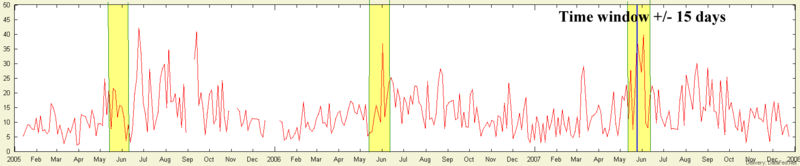

Concentration Anomaly Tool A useful measure of the "normal" concentration is the 84th percentile (+1 sigma) for a given station. A concentration anomaly is a deviation from the normal. This tool permits the calculation of concentration anomalies. In the illustration below, a time windows of +/- 15 days (one month window) was chosen. This period is longer than a typical exceptional event, but it is sufficiently short to preserve seasonality. In order to establish the normal values the concentrations can be averaged over multiple years for the given time window measured in Julian days, i.e. days between 160 and 190. Hence, a particular sample is considered anomolously high (deviates from the normal) if its value are substantially higher than the 84th percentile of the multi-year measurements for that "month" of the year. There is also need for flexibility in defining the 'normal' when calculating the deviation above normal. For example in figures below, the excess concentration is plotted based on 'normal' defined as the 50th, 84th (+ sigma) and 95th percentile of the distribution. Clearly, on a given day, the excess above the 95th percentile is much smaller than excess above the 50th percentile.

Trajectory Analysis ToolBacktrajectory analysis can be used to establish whether the air masses associated with the exceedance pass through the source region of the exceptional source. One approach is combining the observed source of an exceptional event with backtrajectories of high concentration events. In the figures below, we show the color coded concentration samples along with the backtrajectories which show the air mass transport pathway. Given the availability of FRM PM2.5 concentrations, it is instructive to examine the backtrajectories (air mass histories) associated with above-standard concentrations. If those backtrajectories pass through areas of known exceptional sources (forest fires, dust storms), then the corresponding high concentrations may be attributed to that event.

On the other hand, if the backtrajectories pass through known anthropogenic emission regions, then those sources are likely responsible. Also if the backtrajectories indicate slow air mass motion in the vicinity of the receptor then atmospheric stagnation may be responsible for the accumulated high values. The Figures below show the backtrajectories to those sights that have PM2.5 concentrations in excess of 35 ug/m3. The backtrajectories to all sites with concentration below 35 ug/m3 were suppressed in order to highlight the transport pattern to the potentially violating sites.

- The pre-computed backtrajectories used in this analysis are only available for IMPROVE and STN sites. Since there are much more FRM PM2.5 sites, many monitoring stations do not have backtrajectories. As a result, there are sites with red circles (>35 ug/m3) without backtrajectories for airmass transport analysis.

FASTNET: Community Event Analysis Network

NEEDS community analysis network "middleware" is for performing general-purpose analysis that is useful for many other applications. The third application component produces outputs specifically required by EE Rule. Th three components working together constitute an end-to-end information processing system that using a networked approach takes observations as inputs and produces "actionable" knowledge necessary for decision making.

Analyst chooses the tool and the data for the tool to operate on; Single tool provides multiple kinds of evidence and could work with different datasets; Analyst chooses which type of evidence tool should provide. Synthesize array of evidence for same line of justification to combine the different kinds of evidence for reasons that the analyst gives. Evidence is used for flag justification report

General event analysis will be conducted by a virtual community of analysts. It will consist of a core group of analysts whos' effort will ensure that:

- Major EEs with exceptional impacts on many sites are analyzed and described so that individual states can use that general authoritative even description.

- The core group will be available for consultations or to perform special analyses for difficult EE cases identified by the State, Regional or Federal offices.

- The core group will also continue the development of tools and methods for the general characterization of EEs by identifying new data sources, combining and fusing multi-sensory data, interacting with the event forecast modelers.

The core group will include representation from Federal, Regional and State, NASA, ...

Ad hoc members of the virtual community may include ... but harvesting without the active "participation" ....

Full characterization of events is a very labor-intensive, subjective and sporadic process. Collecting and harmonizing the variety of data sources, analyzing the various events in a coherent, compatible manner and assuring that significant events will not ‘fall through the cracks’ is a challenging task for both research groups and or the community at large.

FASTNET (Fast Aerosol Sensing and Tools for Natural Event Tracking) (Poirot, et al, 2005) is an air pollution event detection and characterization project, which includes a set of tools, methods and community of analysts. FASTNET was initially developed and supported by the Regional Planning Organizations (RPOs) for the characterization of major natural events, in particular natural aerosol events from forest fire smoke and windblown dust are particularly interesting events, due to their large emission rates over short periods of time, continental and global-scale impacts, and unpredictable sporadic occurrence. Event analysis is performed in real-time while by necessity the detailed event characterization is conducted post-facto.

The detection and characterization of short-term events is performed by monitoring a wide range of observations arising from real-time surface and satellite sensors, air quality simulations and forecast models as well as from more detailed data obtained by slower sensors and used in post-analysis. The gathering of the distributed data and the tools for data exploration and processing are described by a companion project, DataFed, described in section..

Community interaction for event characterization is particularly vital since aerosol events are being identified, recorded and to various degree analyzed by diverse groups for many different purposes (See List of Analysis groups). We propose to harvest the event analyses being conducted by groups and combine it with analysis being done by FASTNET community. The community event analysis workspace, Interactive Virtual Workgroup (IVW), is an open facility to allow active participation of this diverse virtual community in the acquisition, interpretation and discussion of the aerosol events as well as an archive for cataloging past event analyses and descriptions. The searchable event catalog facilitates the finding and reuse of past analyses. The organization, statistics and spatial-temporal display of past aerosol events by type is also helpful in developing a long-term climatology of events. For example, the Regional Haze Rule requires the establishment of the natural haze conditions, which is to be attained by 2064. [ Add links out to ..] Conceivably, the event analysis performed in the community workspace could serve as triggers and guides to the States in deciding which station-data to flag.

The outputs of the community event analysis also includes event characterizations as contributed by the joint effort of the participating community (see section..) These event descriptions are integrative and general purpose so that they are applicable to many users, such as informing the public, improving the model forecasts as well as ... The community-based event descriptions are also necessary in the formal EE DSS since many of the events extend well beyond the territory of any state. Thus, they provide a broader context that is required for event justification. Further discussion on the role of the community event analysis in EE DSS is given in section..States have local (site specific) perspective; Regional/Federal have both site and broader perspective.

Harvesting Community Analysis

Potential Analysts: VIEWS, Airnow, NPS, NOAA OESI, BlueSky, AIRPACT, States, EarthObservatory, MODIS Rapid Response, NASA Gateway to Astronaut Photography, Texas Natural Resources Cons. Comm (TNRCC)

Analysis and Methods for EE Rule

Event occurs, in real time analysts are notified AQ monitoring consoles as well as by news, blog and media reports; Analysts choose tools and data and do further analysis; Evidence (higher quality integrated data) produced by the analysts is published with description in near-realtime as event characterization to be used by other applications including EE DSS; When the EE DSS is triggered and the state analyst chooses to flag the station for a given date then further analysis needs to be done using both EE-specific and generic. Analysts chooses tools to provide specific evidence; When the EE DSS is triggered and the state analyst chooses to flag the station for a given date then further analysis needs to be done using both EE-specific and generic. Analysts chooses tools to provide specific evidence

EE process starts with trigger either from prominent event or high PM value Analyst monitoring console allows analysts to identify potential EEs and to explore evidence if it is an EE (Event monitoring and screen observations for potential events). – need monitoring and screening tool. (Part A) Station-centric see spikes (anomaly tool for Google Earth as an animation, show anomaly through an index – aerosol characterization)

A: Exceedance Description

The purpose of this DSS component is to demonstrate that the event satisfies the criteria set forth in 40 CFR 50.1(j); The legal definition of Exceptional Events are also reproduced in Section XXXX. In this component one needs to establish that there is a potential pollutant source which is not controllable or preventable, such as forest fires, dust storms, or pollution from other, extrajurisdictional regions and that the site in question was non-compliant. In this step it is also necessary to establish whether a site is in potential violation of the PM2.5 standard; is the concentration over the 15ug/m3 annual or 35 ug/m3 daily standard? Only samples that are in non-compliance are qualified for EE status flag. Next, evidence is gathered and presented showing that the event could have been caused by a source that is not reasonably controllable or preventable. The EE Rule identifes different categories of uncontrollable events: (a) Exceedances Due to Transported Pollution (Transported African, Asian Dust; Smoke from Mexican fires; Smoke & Dust from Mining, Agricultural Emissions) (b) Natural Events (Nat. Disasters.; High Wind Events; Wildland Fires; Stratospheric Ozone; Prescribed Fires) and (c) Chemical Spills and Industrial Accidents; Structural Fires; Terrorist Attack.

A.1 Approach:

The evidence needed for this component is gathered from multiple sources. Each responding to different requirements, including the event description, the source is uncontrollable, the site is in potential violation of NAAQS, etc.

Reports of the Event from Media and General Public. The general public provides additional qualitative observations of exceptional events shared through internet-accessible blog posts, photos through Flickr and videos through YouTube.

Exceptional events are inherently noticeable because of the intensity of the short-time emissions and due to the unusual impacts they have on the atmospheric environment. The recent proliferation of continuously recording webcams, individual digital photographs and home videos as well as personal blog reports now constitute a significant new information source. Most of these observations are almost immediately placed on the internet, shared into internet-based repositories like YouTube for videos, Flickr for images and blogs for personal accounts. Given the high density and short response of these sensors to the exceptional events it is said that the Earth, has now acquired a "skin" for the detection of changes in the environment.

Measured PM2.5 Concentration Showing Exceedance (>15ug/m3 or > 35ug/m3 ) - Site specific. The first step is to establish that a sample is a likely contributor to noncompliance. A site is in noncompliance if the 98 percentile of the PM2.5 concentration over a three year period is over 35 ug/m3. However, a sample may be in compliance even if the PM2.5 concentration is > 35ug/m3, provided that such values occur less than 2 percent of the time.

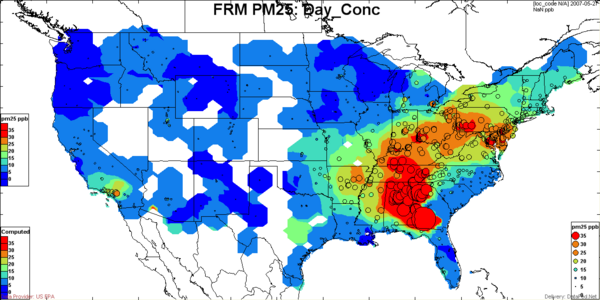

Screening for Potential PM2.5 Exceedances: The PM2.5 samples that are potential contributors to non-compliance can be determined visually and qualitatively by the PM2.5 Data Browser Tool. The data browser tool has a map view and a time series view. The map view shows the PM2.5 concentration as colored circles for each station for a specific date. The time view shows the concentration time series for a selected site. The selection of time for the map view can be accomplished by entering the desired date in the date box or clicking the date in the time view. The selection of the station for the time series is accomplished either by choosing from the station list box or clicking on the station in the map view. The coloring of the PM2.5 concentration values (circles) is adjusted such that the concentrations above 35ug/m3 are shown in red. This provides an easy and obvious (maybe not for Neil) way to identify the candidate samples for noncompliance.

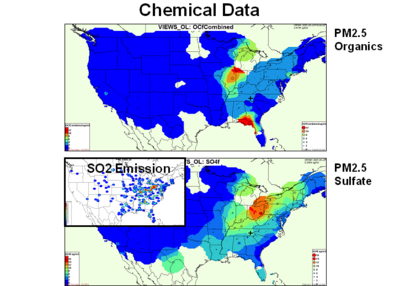

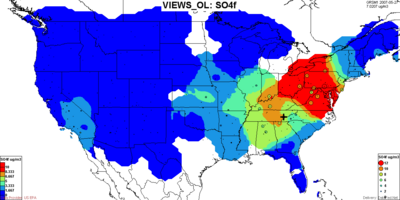

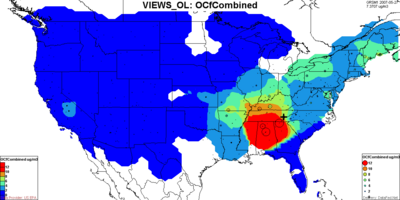

Measured PM2.5 Speciation Data for the Event Day - Site Specific (EC/OC/SO4...). A compelling line of evidence for establishing a causal relationship is through the chemical fingerprints of aerosol samples. This speciated aerosol monitoring data can be used to indicate unusual exceptional status based on unusual chemical composition, e.g. organics for smoke and sulfates for non-exceptional sources. Speciated aerosol monitoring data can be used to indicate unusual exceptional status based on unusual chemical composition, e.g. organics or smoke, and soil components for wind-blown dust. Speciated aerosol composition data provide strong evidence for the impact of smoke on ambient concentrations. The figures below show the chemical composition data for sulfate and organics, respectively for May 24 and May 27.

Location of Flagged Monitor Site and Suspected Source Area. Need maps of source and monitor site.

A.2 Challenges:

Science-technical Challenges

- What are key EE indicator parameters? (a) Satellite images, data (b) Airnow PM2.5, O3; (c) Folk-sensors

- When to gather the descriptive info on the EE? (a) Realtime, (b) Post-analysis, (c) Both?

- How to gather EE descriptor info? (a) dedicated sensors (b) (c) virtual monitoring dashboard/console of relevant parameters

Organizational Challenges

- Who should package EE description? (a) A designated, national EE 'descriptor', (b) Individual State 'observer/descriptor'. (3) Scientist/analysts as a virtual workgroup/wiki?

- Who should be receiving/notified with the EE description/trigger? (a) State analysts (b) public (c) Virtual workgroup

- Which agencies, orgs should be in; what governance on the EE detection/description? (a) fully open, all orgs, any time (b) (c) Core EE group + ad-hoc

Implementation Challenges

- What systems architecture? (a) ad-hoc, by State (b) dedicated EE detection system (c) system of systems

- Who develops, implements applies the tools, methods

- How is the entire activity to be 'connected'?

B: Clear Causal Relationship between the Data and the Event

The purpose of this component is to demonstrate that there is a clear causal relationship between the measurement under consideration and the event that is claimed to have affected the air quality in the area. There are multiple lines of evidence that can support the relationship between observations and the exceptional event. These include (1) backtrajectory analysis to establish whether the air masses associated with the exceedance pass through the source region of the exceptional source. (2) Speciated aerosol monitoring data can be used to indicate unusual exceptional status based on unusual chemical composition, e.g. organics or smoke, and soil components for wind-blown dust. (3) Forward model simulations can also indicate a causal relationship. (4) Temporal signatures (spikes) may also yield additional evidence.

None of the methods providing evidence for causal relationship can provide 100 percent proof. However, the combination of evidence from multiple independent perspectives can provide sufficient weight for decision making. Hence, the purpose of this section is to illustrate the multiple lines of evidence and how to combine these for making an argument.

B.1 Approach:

Impact on Ambient Concentration Satellites

- Does Plume cross monitor locations?

- Are elevated PM readings observed there?

Screening for Causes: Satellite images and satellite-derived aerosol products are useful for the identification of exceptional events such as biomass burning and forest and agricultural fires, wind-blown dust events. Both the satellite images as well as the numeric data products are generally available in near-realtime. A limitation of the satellite data is that they are semi-quantitative, particularly for estimating surface concentrations. Furthermore, satellite observations of surface-based aerosols are only available during cloud-free conditions.

The fire pixels, obtained from satellite and other observations, provide the most direct evidence for the existence and location of major fires. In the above map of fire pixels, the cluster of fires in southern Georgia is evident.

The true color MODIS images from Terra (11am) and Aqua (1pm) show a rich texture of clouds, smoke/haze and land. The clouds over Georgia are clearly evident. Inspection of the images shows evidence of smoke/haze along the Mississippi River as well as over the Great Lakes.

The Aerosol Optical Thickness (AOT), derived from MODIS Sensors (Terra and Aqua satellites), shows a data void over Georgia due to clouds.

The Absorbing Aerosol Index provided by the OMI satellite shows intense smoke in the immediate vicinity of the fire pixels. The lack of OMI smoke signal further away from the fires indicates an absence of smoke. However, it is also possible that the smoke is below the cloud layer and therefore not visible from the satellite. Also, the OMI smoke signal is most sensitive to elevated smoke layers, while near-surface smoke is barely detected.

Auxiliary Observations

In many areas quantitative observations of PM2.5 are available through additional monitoring networks. In some areas there are also local monitoring stations that can augment the large-scale observations. Sun photometers, measuring the vertical aerosol optical thickness, fall in this category.

A clear causal relationship between the exceedance at a given site and the source of the exceptional event is quantified.

Trajectory Analysis Background: One line of evidence for causal relationship is combining the observed source of an exceptional event with backtrajectories of high concentration events. In the figures below, we show the color coded concentration samples along with the backtrajectories which show the air mass transport pathway. Backtrajectory analysis can be used to establish whether the air masses associated with the exceedance pass through the source region of the exceptional source. One approach is combining the observed source of an exceptional event with backtrajectories of high concentration events. In the figures below, we show the color coded concentration samples along with the backtrajectories which show the air mass transport pathway. Given the availability of FRM PM2.5 concentrations, it is instructive to examine the backtrajectories (air mass histories) associated with above-standard concentrations. If those backtrajectories pass through areas of known exceptional sources (forest fires, dust storms), then the corresponding high concentrations may be attributed to that event.

On the other hand, if the backtrajectories pass through known anthropogenic emission regions, then those sources are likely responsible. Also if the backtrajectories indicate slow air mass motion in the vicinity of the receptor then atmospheric stagnation may be responsible for the accumulated high values. The Figures below show the backtrajectories to those sights that have PM2.5 concentrations in excess of 35 ug/m3. The backtrajectories to all sites with concentration below 35 ug/m3 were suppressed in order to highlight the transport pattern to the potentially violating sites.

There are several limitations of the trajectory analyzes presented here:

- The backtrajectories used in this analysis were calculated using the ATAD algorithm developed at NOAA ARL. These are derived from the observational wind field of radiosonde network and pre-computed at CIRA as part of the VIEWS operation activity. The ATAD algorithm provides only two-dimensional trajectories by estimating the "characteristic" transport within the boundary layer. Consequently, the ATAD trajectories can not be used to estimate transport that occur during 3-dimensional meteorological situations, e.g. strong subsidence or significant convective lifting above the boundary layer. In several intercomparison studies the ensemble ATAD trajectories were evaluated against the more elaborate HISPLIT trajectory algorithm (BRAVO study).

- The pre-computed backtrajectories used in this analysis are only available for IMPROVE and STN sites. Since there are much more FRM PM2.5 sites, many monitoring stations do not have backtrajectories. As a result, there are sites with red circles (>35 ug/m3) without backtrajectories for airmass transport analysis.

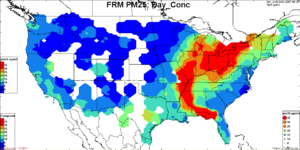

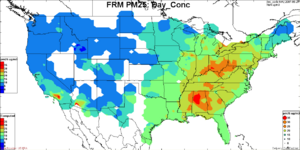

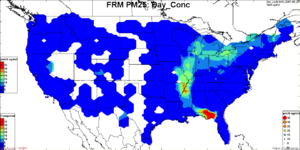

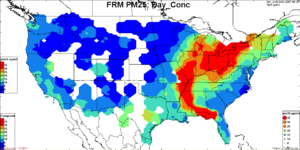

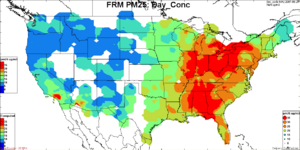

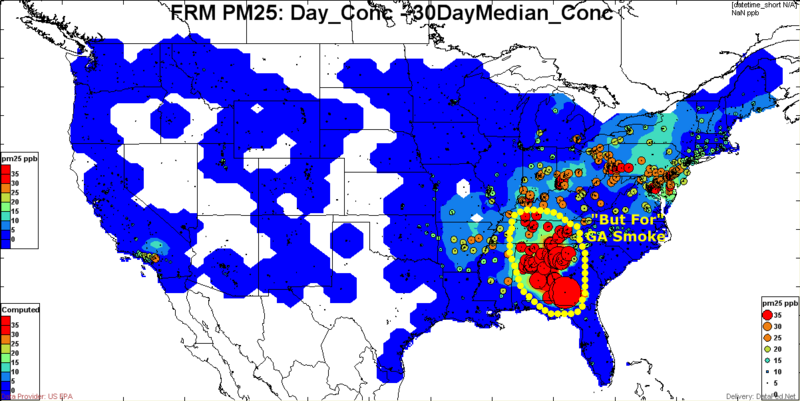

The images below show the concentration of FRM PM2.5 as circles at each of the monitoring sites that report data on a given day. The magnitude of the circles' diameter is proportional to the concentration. The concentration is also color coded for the interior of the circles: blue represents the lower end of the concentration scale, while red indicates the higher end. By setting the scale maximum to 35 ug/m3, the red circles represent samples that may potentially be in non-compliance of the daily PM2.5 standard.

Analysis Approach: In the analysis below the pattern of PM2.5 concentration is displayed to identify the monitoring sites that exhibit concentrations above 35ug/m3 (red). In the next steps, two different trajectory analyzes are applied to delineate which of the monitoring sites are likely to be impacted by the smoke. In the primary trajectory analysis the location of the smoke source area is delineated by the black rectangle, centered on the Okefenokee fire location. All the backtrajectories that pass through that "source rectangle" are made visible while the other trajectories are suppressed. The coloration of individual trajectories prior to entering the source rectangle is set to thin blue lines. During and after the passage through the source rectangle, the trajectory line thickness and color is changed according to the concentration at the receptor site. By following the trajectories leaving the source rectangle, it is possible to delineate the regions of potential smoke impacts. That region can potentially be satisfying the "but for" condition. The monitoring sites whose back trajectories do not pass through the source region do not qualify for the "but for" condition. Standard back trajectory analysis can be applied to ascertain that the air mass is a way from the fire zone.

Evidence of high carbon concentrations, relative to typical and extreme historical levels.

Carbon Model Simulations. Model simulations and forecasts may also provide evidence for exceptional events. For example, the ability of regional and global-scale models for forecasting wind-blown dust events is continuously improving. This is evidenced by the good performance of the Naval Research Laboratory NAAPS global dust model. The simulation and forecasting of major smoke events is much more difficult due to the unpredictable geographic-time-height-dependence of the biomass smoke emissions. Hence, currently reliable and tested smoke forecast models do not exist. Nevertheless, some of the model simulations provide useful additional evidence for the cause of the high PM levels.

VIEWS Speciation Data - Carbon

Browser for: VIEWS May 24

On May 24, the highest sulfate concentration was recorded just north of the Ohio River Valley. On the other hand, the highest organic carbon concentration is measured along the stretch from Georgia/Alabama to Wisconsin. This spatial separation of sulfate and organics indicates different source regions. Based on the combined chemical data and backtrajectories it is evident that the high PM concentrations that are observed along the western edge of the red trajectory path is due to the impact of the Georgia smoke. On the other hand the high PM2.5 concentrations just north of the Ohio River Valley are primarily due to known, controllable sulfate sources.

On May 27, the displacement of the sulfate and organics over the Eastern US is even more evident. The sulfates peak over Virginia/Ohio/Pennsylvania, while the high concentration patch of organics is located over the southern states, Georgia/Alabama.

For local event, was the concentration higher than surrounding monitors? For regional event, were ambient concentrations consistently high?

- Show PM2.5 mass measured at nearby monitors on that day

- Display in map form if possible

Here, the same monitoring data for May 24, 2007 are presented as contour maps of daily average PM2.5 mass concentration. On this day, a large swath of the eastern U.S. had PM2.5 concentration above 35ug/m3. However, the PM2.5 mass measurements do not reveal whether these potential exceedances were due to known controllable emissions or due to exceptional causes.

B.2 Challenges

Science-technical Challenges

Establishing causality between alleged sources and site exceedances is a scientifically challenging task since it requires the establishment of a quantitative and defendable source-receptor relationship. Establishing such relationship for EEs exacerbated by the unpredictable emission location and time and usually complex transport processes.

Organizational Challenges

The organizational challenges stem primarily from the need to acquire observations from many organizations.

Implementation Challenges

The key implementation challenge is the proper integration of the multiple lines of evidence for estimating the causality of the anomolous source impact.

C: The Event is in Excess of the "Normal" Values

- Other anomalies/checks - can't be more than x% different than nearest neighbor or more than y% different from previous hour.

The purpose of this component is to demonstrate that the event is associated with a measured concentration in excess of normal historical fluctuations, including background. Next, the sample is evaluated whether the measured high value is in excess of the normal, historical values. If not, the sample is not exceptional. In the third step, the sample is evaluated whether the measured high value is in excess of the normal, historical values. If not, the sample is not exceptional. Establishing the magnitude of normal, historical values can be performed through many different statistical measures. The air pollution pattern varies in space, time and also depends on the pollutants. In case of PM, it also depends on the species in the PM chemical mix. The sulfate pattern, for example, is very different from nitrate, organics or dust. Thus, the metrics that meaningfully describe the pattern require many parameters including space, time composition along with those from parametric and/or nonparametric statistics.

C.1 Approach

Spatial Normals - PM2.5, Chemical Constituents. A useful measure of the "normal" concentration is the 84th percentile (+1 sigma) for a given station. In the illustration below, a time windows of +/- 15 days (one month window) was chosen. This period is longer than a typical exceptional event, but it is sufficiently short to preserve seasonality. In order to establish the normal values the concentrations can be averaged over multiple years for the given time window measured in Julian days, i.e. days between 160 and 190. Hence, a particular sample is considered anomolously high (deviates from the normal) if its value are substantially higher than the 84th percentile of the multi-year measurements for that "month" of the year. See Help:Using the Concentration Anomaly Tool to learn how to change these parameters.

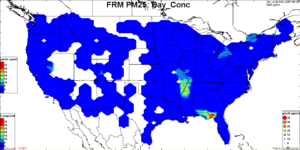

In the figures below, the concentration and anomaly patterns are illustrated for two days 2007-05-24 and 2007-05-27. For each day, the leftmost figure shows the measured day average PM2.5 concentration. The circles are color coded using the same coloring scheme as the contour for the concentration field. The middle figure shows the contour field for the 84th percentile PM2.5 concentrations. The color coded circles still represent the concentration for the selected day. The rightmost figure shows the concentration anomaly, the excess concentration of the current day values over the 84th percentile values.

It will be necessary to decide (1) what should be the specific metric for the "normal" high concentration (2) what should be the excess above normal high value to qualify for exceptional high. With these settings specified, this tool can be used to automatically detect whether a station's anomaly is exceptional or not.

While the rightmost figures show that the excess concentrations are high, these by themselves cannot establish whether the origin is from controllable or exceptional sources.

May 24, 2007

Browser for: FRMPM25_Day |

Browser for: FRMPM25_84perc |

Browser for: FRMPM25_diff

84th Percentile

95th Percentile

The FRM shows that on May 24, the high excess concentrations over the median were confined to a well-defined plume north of the Ohio River Valley. The deep blue areas adjacent to the PM plume indicate that large portions of the eastern U.S. were near or below their median values.

While the excess concentrations are high, these by themselves cannot establish whether the origin of the excess is from controllable or exceptional sources.

Sulfate Model Simulations

C.2. Challenges:

Science-technical Challenges

EEs require that a given sample is anomolous compared to historical values. Detecting anomalies requires that there is a meaningful metric that quantifies the "normal" values. Unfortunately, quantifying the normal value of air pollutants is difficult and ambiguous since pollutant concentrations vary in time and space much more than the ... Hence, the key challenge is to devise suitable metrics for normal, normal-high etc.

Organizational Challenges

The organizational challenge is to devise a suitable metric for the normal that incorporates both the requirements of the regulatory agency, EPA, as well as that of the scientific community that is familiar with the nature of the pollutant variability.

Implementation Challenges

When suitable metrics for normal are derived it is desireable to develop appropriate tools that automatically calculate the anomalies and display those through appropriate visualization of spatial and temporal anomalies.

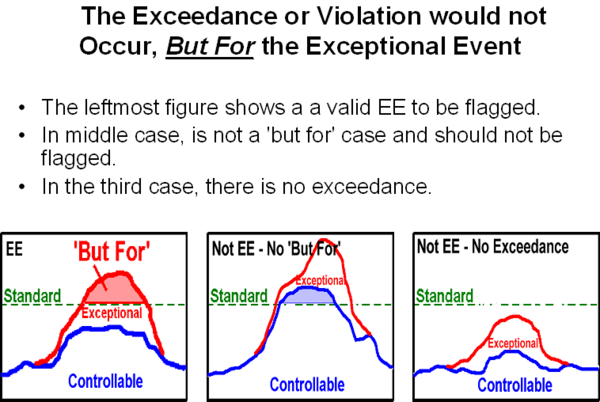

D: The Exceedance or Violation would not Occur, But For the Exceptional Event

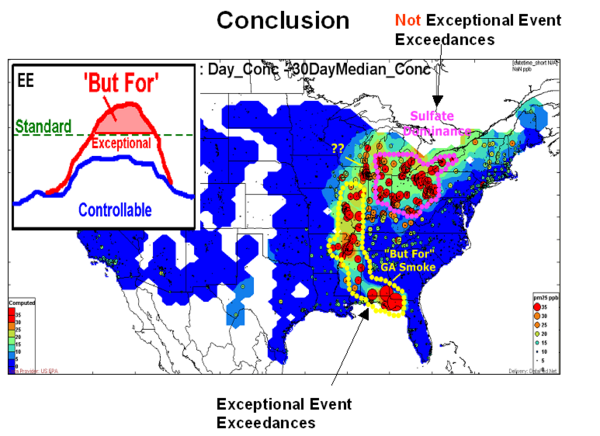

There would have been no exceedance or violation but for the event. Finally, the contribution of the exceptional source to the sample is compared to 'normal' anthropogenic sources. Only samples where the exceedances occur but for the contribution of the exceptional source qualify for EE flag.

According to the EE Rule, observations can be EE-flagged if the violation is caused by the exceptional event. Considering the subtleties of the EE Rule, below are graphical illustrations of the Exceptional Event criteria.

- The leftmost figure shows a case when the 'exceptional' concentration raises the level above the standard. A valid EE to be flagged.

- In the next case, the concentration from controllable sources is sufficient to cause the exceedance. This is not a 'but for' case and should not be flagged.

- In the third case, there is no exceedance. Hence, there is no justification for an EE flag.

D.1 Approach:

D.2 Challenges:

Science-technical Challenges

Organizational Challenges

Implementation Challenges

Based on the above analysis, it is now possible to delineate the regions of the Eastern Us of which the potential exceedances occurred but for the presence of the Georgia Smoke, which caused an exceptional event.

Conclusion: On May 24, the exceedances north of the Ohio River Valley were caused by the known anthropogenic sulfur emissions. This is deduced from the high sulfate concentration and airmass trajectories that have passed over the high emission regions. The region over which the exceedances were but for the presence of the exceptional Georgia Smoke is highlighted with yellow dots. The evidence arises primarily from (1) the high concentration of aerosol organics, (2) the backtrajectories to exceeding sites passing over the known fire region (3) model simulation of sulfate and smoke and (4) numerous qualitative reports of smoke in the region. The level of sulfates was sufficiently low (< 10 ug/m3) and the level of organics was sufficiently high such that the violation would not have occurred but for the smoke organics.

Based on the combined chemical data and backtrajectories it is evident that the high PM concentrations that are observed along the western edge of the red trajectory path is due to the impact of the Georgia smoke. On the other hand the high PM2.5 concentrations just north of the Ohio River Valley are primarily due to known, controllable sulfate sources.

Conclusion: On May 27, the exceedances were clustered downwind of the GA smoke source. The multi-state region over parts of GA, AL, TN had low SO4 concentration (< 6 ug/m3), high OC concentration in excess of 10 ug/m3 and backtrajectories pointing toward the GA fires as the source of the organics. Thus, it is concluded that these exceedances over GA, AL, TN would not have occurred but for the impact of the GA smoke plume.

- General Goal and Framework needs to satisfy EE Rule

- Methods of EE flagging can be developed based on the EE Rule and constrained by the available data/analysis resources

- EE DSS consists of:

- EE Flagging template - lays out sequence and possible lines of evidence

- Tools (based on template) that help preparing flags (States), approving (EPA Regions), deciding (Federal EPA)

The DSS needs to be robust and up-to-date, continuously or in batch mode. Need to request to EPA to expose the FRM PM2.5 and Ozone data, say every 3 months, after the sampling

Members of the 'Core' network need to agree to provide robust service of data and tools.

Application to Other DSS's and Significance

Summary and Significance

A statement of the perceived significance of the proposed work to the objectives of the solicitation and to NASA interests and programs in general.

- Better air quality management decisions

- Wider distribution of NASA products

- Demonstration of GEOSS concept

The objectives and expected significance of the proposed research, especially as related to the objectives given in the NRA;

The relevance of the proposed work to past, present, and/or future NASA programs and interests or to the specific objectives given in the NRA;

EE Emission estimation from iterative observation-model emission refinement. Better forecasts Better estimation of SIP Baseline

Other DSSs: VIEWS, Airnow, PULSENet, BlueSky, AIRPACT, BAMS

From NRA

As the main body of the proposal, this section should cover the following material:

- Objectives of the proposed activity and relevance to NASA’s Strategic Goals and Outcomes given in Table 1 in the Summary of Solicitation of this NRA;

- Strategic Subgoal 3A: Study planet Earth from space to advance scientific understanding and meet societal needs. - 3A.7 Expand and accelerate the realization of societal benefits from Earth system science.

- Methodology to be employed, including discussion of the innovative aspects and rationale for NASA Earth research results to be integrated;

- Systematic approach to integrate Earth science results into the decision-making activity (existing or new) and to develop and test the integrated system and address integration problems (technical, computational, organizational, etc.);

- Approach to quantify improvements in the system performance, including characterization of risk and uncertainties;

- Approach to quantify (or quantitatively estimate) the socioeconomic value and benefits from the resulting improvements in decision-making;

- Challenges and risks affecting project success (technical, policy, operations, management, etc.) and the approach to address the challenges and risks; and

- Relevant tables/figures that demonstrate key points of the proposal.

Proposals seeking to create a new decision-making activity should describe the tool, system, assessment, etc. in detail, including the decision analysis, factors, unique roles for Earth science research results, and other pertinent information.

From NASA Proposer Guidebook - Technical/Science/Management Section

[Ref.: Appendix B, Parts (c)(4), (c)(5), and in-part (c)(6)] As the main body of the proposal, this section must cover the following topics in the order given, all within the specified page limit. Unless specified otherwise in the NRA, the limit is 15 pages using the default values given in Section 2.3.1:

- The objectives and expected significance of the proposed research, especially as related to the objectives given in the NRA;

- The technical approach and methodology to be employed in conducting the proposed research, including a description of any hardware proposed to be built in order to carry out the research, as well as any special facilities of the proposing organization(s) and/or capabilities of the Proposer(s) that would be used for carrying out the work. (Note: ref. also Section 2.3.10(a) concerning the description of critical existing equipment needed for carrying out the proposed research and the Instructions for the Budget Justification in Section 2.3.10 for further discussion of costing details needed for proposals involving significant hardware, software, and/or ground systems development, and, as may be allowed by an NRA, proposals for flight instruments);

- The perceived impact of the proposed work to the state of knowledge in the field and, if the proposal is offered as a direct successor to an existing NASA award, how the proposed work is expected to build on and otherwise extend previous accomplishments supported by NASA;

- The relevance of the proposed work to past, present, and/or future NASA programs and interests or to the specific objectives given in the NRA;

- To facilitate data sharing where appropriate, as part of their technical proposal, the Proposer shall provide a data-sharing plan and shall provide evidence (if any) of any past data-sharing practices.

The Scientific/Technical/Management Section may contain illustrations and figures that amplify and demonstrate key points of the proposal (including milestone schedules, as appropriate). However, they must be of an easily viewed size and have self-contained captions that do not contain critical information not provided elsewhere in the proposal.