Difference between revisions of "WCS Access to netCDF Files"

| Line 26: | Line 26: | ||

===Wrapper software description and installation=== | ===Wrapper software description and installation=== | ||

| + | |||

| + | Design goals of Datafed WCS Wrapper server: | ||

| + | |||

| + | *Promote WCS as standard interface. | ||

| + | *Promote NetCDF and CF-1.0 conventions | ||

| + | *Make it easy to deliver your data via WCS from your own server or workstation. | ||

| + | *No intrusion: You can have the NetCDF files where you want them to be. | ||

| + | *A normal PC is enough, no need to have a server. | ||

| + | *Minimal configuration | ||

The WCS protocol consists of three service calls: | The WCS protocol consists of three service calls: | ||

| Line 51: | Line 60: | ||

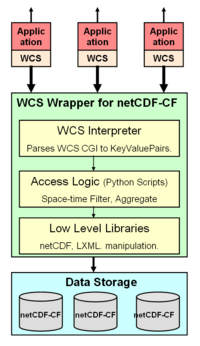

[[Image:WCS netCDF Wrapper.png|200px|left]] | [[Image:WCS netCDF Wrapper.png|200px|left]] | ||

The main components of the wrapper software are shown schematically in the Figure below. At the lowest level are open source libraries for accessing netCDF, XML files. At the next level are Python scripts for extracting spatially subset slices for specific parameters and times. At the third level, is the WCS interpreter that parses the WCS url. Administrative tools are also provided for creating the Capabilities and the Description documents. | The main components of the wrapper software are shown schematically in the Figure below. At the lowest level are open source libraries for accessing netCDF, XML files. At the next level are Python scripts for extracting spatially subset slices for specific parameters and times. At the third level, is the WCS interpreter that parses the WCS url. Administrative tools are also provided for creating the Capabilities and the Description documents. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Revision as of 16:23, November 13, 2007

Wrapper software for WCS access to netCDF files

Background

The netCDF-CF file format is a common way of storing and transferring gridded meteorological and air quality model results. The netCDF files that are also conformant with the CF convention further enhances the semantics of the netCDF files. Most of the recent model outputs are conformant with netCDF-CF. The netCDF-CF convention is a key step toward standard-based storage and transmission of Earth Science data.

The low level libraries provided by UNIDATA also provide a clear API for creating and accessing data from netCDF files. However, such libraries are not adequate to access user-specified data subsets over the Web. For standardized web-based access, another layer of software is required to connect the high-level user queries to the low-level interface of the netCDF libraries. We call this interface the WCS-netCDF Wrapper.

The initial effort is focused on developing and applying the WCS wrapper template to the HTAP global ozone model outputs created for the HTAP global model comparison study. These model outputs are being managed by Martin Schultz's group at Forschungs Zentrum Juelich, Germany. It is hoped that following the successful implementation at Juelich, the WCS interface could also be implemented at Michael Schulz's AeroCom server that archives the global aerosol model outputs.

Data access through OGC WCS protocol

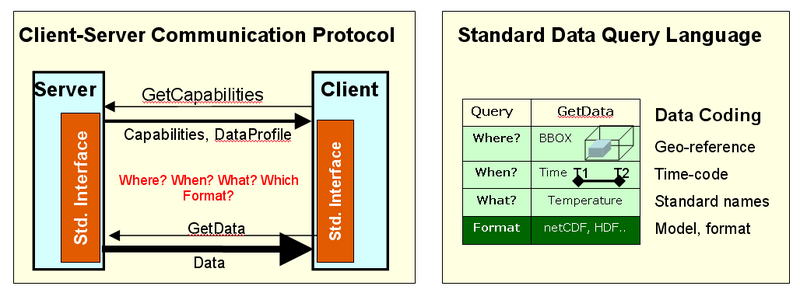

The WCS protocol uses a physical coordinate system for accessing data, by explicitly facilitating space-time queries using a geographic bounding box and a time-range to define the data access request. The purpose of this effort is to create a portable software template for accessing netCDF-formated data using the WCS protocol. Using that protocol will allow accessing the stored data by any WCS compliant client software. It is hoped that the standards-based data access service will promote the development and use of distributed data processing and analysis tools.

The adoption of a set of interoperability standards is a necessary condition for building an agile data system from loosely coupled components for HTAP. During 2006/2007, members of HTAP TF have made considerable progress in evaluating and selecting suitable standards. They also participated in the extension of several international standards, most notably standard names, data formats and a standard data query language.

The naming of individual chemical parameters will follow the CF convention used by the Climate and Forecast (CF) communities. The existing names for atmospheric chemicals in the CF convention were inadequate to accommodate all the parameters used in the HTAP modeling. in order to remedy this shortcoming the list of standard names was extended by the HTAP community under leadership of C. Textor. She also became a member of the CF convention board that is the custodian of the standard names. The standard names for HTAP models were developed using a collaborative wiki workspace. It should be noted, however, that at this time the CF naming convention has only been developed for the model parameters and not for the various observational parameters.(See Textor, need a better paragraph).

For modeling data, the use of netCDF-CF as a standard format is recommended. The use of a standard physical data format and the CF naming conventions allows, in principle, a semantically well-defined data transfer between data provider and consumer services. The netCDF CF data format is most useful for the exchange of multidimensional gridded model data. It was also demonstrated that the netCDF format is well suited for the encoding and transfer of station monitoring data. Traditionally, satellite data were encoded and transferred using the HDF format.

Figure 2. Interoperability protocols and standard query language

The third aspect of data interoperability is a standard data query language through which user services request specific data from the provider services. It is proposed that for the HTAP data information system adapts the Web Coverage Service (WCS) as the standard data query language. The WCS data access protocol is defined by the international Open Geospatial Consortium (OGC), which is also the key organization responsible for interoperability standards in GEOSS. Since the WCS protocol was originally developed for the GIS community, it was necessary to adapt it to the needs of "Fluid Earth Sciences". Members of the HTAP group have been actively participating in the adaptation and testing of the WCS interoperability standards.

Wrapper software description and installation

Design goals of Datafed WCS Wrapper server:

- Promote WCS as standard interface.

- Promote NetCDF and CF-1.0 conventions

- Make it easy to deliver your data via WCS from your own server or workstation.

- No intrusion: You can have the NetCDF files where you want them to be.

- A normal PC is enough, no need to have a server.

- Minimal configuration

The WCS protocol consists of three service calls:

- getCapabilities

- describeCoverage

- getCoverage

The purpose of the wrapper software is to respond to these three HTPP GET queries. The main query is getCoverage, which returns the requested data subset. The getCapabilities returns an XML file which lists the datasets offered by the server. The describeCoverage describes each data layer in more detail.

The WCS-netCDF wrapper software was developed for DataFed using Python 2.5, C++ and lxml 1.3.6 and NetCDF 3.6.1 beta 1 libraries. It's tested on Windows and Linux operating systems. In fact, public test servers have been prepared for both platforms.

The main components of the wrapper software are shown schematically in the Figure below. At the lowest level are open source libraries for accessing netCDF, XML files. At the next level are Python scripts for extracting spatially subset slices for specific parameters and times. At the third level, is the WCS interpreter that parses the WCS url. Administrative tools are also provided for creating the Capabilities and the Description documents.