HTAP Report, Sub-Chap. 6 - Data/Info System

Back to Integrated Global Dataset

This wiki page is intended to be the collaborative workspace for Task Force members interested in the HTAP Information System development, implementation and use. The contents can be freely edited by anyone who is logged in. Comments and feedback regarding the wiki page or its content can also be sent by email to rhusar@me.wustl.edu.

Introduction

The purpose of Chapter 6 is to discuss the need to integrate information from observations, models, and emissions inventories to better understand intercontinental transport. A draft outline of Chapter 6 is also on this wiki. This section focuses on the information system in support of the integration of observations, emissions and models for HTAP.

The previous chapters have assessed the current knowledge of HTAP through the review of existing literature and through a set of model intercomparison studies. These assessments highlight the complexities of atmospheric transport and our limited ability to consistently estimate the magnitude of hemispheric transport. A reconciliation of the observations with the current set of models is an even larger challenge.

Recent developments in air quality monitoring, modeling and information technologies offer outstanding opportunities to fulfill the information needs for the HTAP integration effort. The data from surface-based air pollution monitoring networks now routinely provide spatio-temporal and chemical patterns of ozone and PM. Satellite sensors with global coverage and kilometer-scale spatial resolution now provide real-time snapshots which depict the pattern of industrial haze, smoke, dust, as well as some gaseous species in stunning detail. Detailed phisico-chemical models are now capable of simulating the spatial-temporal pollutant pattern on regional and global scales. The ‘terabytes’ of data from observations and models can now be stored, processed and delivered in near-real time. The instantaneous ‘horizontal’ diffusion of information via the Internet now permits, in principle, the delivery of the right information to the right people at the right place and time. Standardized computer-computer communication languages and Service-Oriented Architectures (SOA) now facilitate the flexible access, quality assurance and processing of raw data into high-grade ‘actionable’ knowledge suitable for HTAP policy decisions. Last but not least, the World Wide Web has opened the way to generous sharing of data, models and tools leading to collaborative analysis in virtual workgroups. Nevertheless, air quality data analyses and data-model integration face significant hurdles. The section below presents an architectural framework, an implementation strategy, and a set of action-oriented recommendations for the proposed HTAP information system.

Interdependence of Emissions, Models and Observations

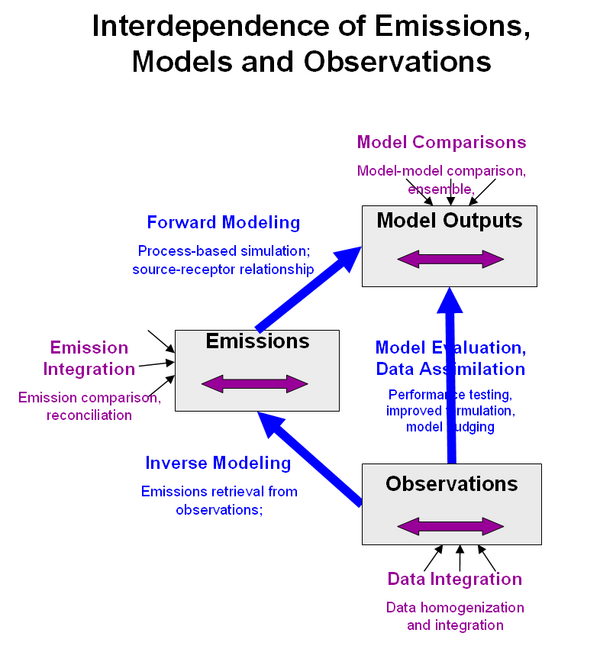

The assessment of the current observational evidence (Chapter 3), emission inventories (Chapter 4) and global chemical transport modeling (Chapter 5) indicates that these three aspects of HTAP are interdependent. A particularly burning issue is the large uncertainty in emissions for some species. The linkages and dependencies for observations, emission inventories and modeling are shown schematically in Figure 1. The boxes represent information resources from observations, emission inventories and modeling. The purple arrows indicate connections and operations within each of the three boxes, such as inter-comparisons, reconciliation and homogenization of data.

The blue arrows represent the major connections and operations that link observations, emission inventories and modeling. Observations are providing the data for model validation as well as for real-time or retrospective assimilation into the models (reanalysis). Through inverse modeling, observations also allow emission estimation for sources that do not have reliable emission inventories (e.g. sulfur-nitrogen in developing countries, biomass burning; dust emissions; biogenics). In many areas of the world such poorly characterized emission dominate the concentration of the species. Lacking reliable emission inventories, chemical models cannot provide simulations or forecasts that are verifiable by observations.

The second problem associated with model verification is the lack of suitable global-scale air quality data that are suitable for model validation. At this time, only satellite data for aerosols NO2, CO, and formaldehyde are available with the necessary spatial and temporal coverage. However, such satellite data represent columnar integrals throughout the atmosphere and therefore do not help in verifying which vertical elevation a pollutant resides. In the case of aerosols, a further limitation is that the aerosol optical thickness retrieved from the satellites includes all chemical species regardless of their source and composition. Hence, even if there is a matching AOT between model and observations, it is no guarantee that the chemical apportionment is correct.

Complete aerosol chemical composition data are available only for limited sampling locations in few regions of the world. Fortunately, in the US territories, rich datasets are available from the IMPROVE aerosol chemistry network (over 200 sites, since the 1980s) and from the EPA Speciation Trends Network (about 150 sites, since 2002). Hourly surface ozone data from 600+ stations along with limited speciated gas chemistry data are available for model validation. The emission inventories for anthropogenic pollutants over North America are also reasonably well established. Given the dual difficulty of poor emission inventories and poor observational coverage, it is recommended that the systematic chemical model validation be initiated using the 350 station aerosol chemistry data and the 600 station surface ozone monitoring data over the US. Within Europe, extensive chemical monitoring is being conducted, ..... model evaluation with European data (expand Europend model-data description?). Subsequently, the verification could be expanded to other global locations with sparser data coverage.

It is evident, that a full reconciliation will require an integrated approach where the best available knowledge from of observations, emissions and models is combined. Furthermore, reconciliation will probably require considerable iteration until the deviations are minimized. Thus, the supporting information system will need to connect and facilitate the data flow of observations, emissions and models.

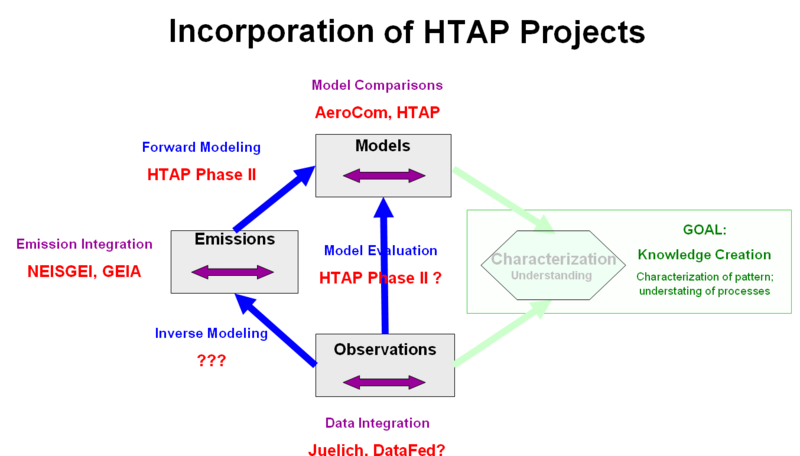

Within HTAP, a number of integration and reconciliation projects are already under way....need more on this??...(a chart like below, would convey the diverse projects that emerged through or in response of HTAP)

HTAP Information System (HTAP IS)

The HTAP Information System has to facilitate communication, provide a shared workspace and offer tools and methods for data/model analysis. The HTAP IS needs to facilitate open communication between the collaborating analysts. The IT technologies may include wikis and other groupware, social software, science blogs, Skype, etc., along with the traditional communication channels. The HTAP TF also needs to have a workspace where the tools and artifacts of the TF are housed. The most important component of the HTAP IS is the HTAP Integrated Data System (HTAP IDS) discussed below.

The HTAP IS will consists of a connectivity infrastructure (cyberinfrastructure), as well as a set of user-centric tools to empower the collaborating analysts. The HTAP IS will not compete with existing tools of its members. Rather it will embrace and leverage those resources through an open, collaborative federation philosophy. Its main contribution is to connect the TF participating analysts, their information resources and their tools.

A focal point of HTAP IS is the integrated dataset to be used for model evaluation and pollutant characterization. The front end of the data system is designed to produce this high quality integrated dataset from the available observational, emission and modeling resources. The back-end of the data system is aimed at deriving knowledge from the data, i.e. a variety of the comparisons: model-model, observation-observation, model-observation, etc.

The primary goal of the data system is to allow the flow and integration of observational, emission and model data. The model evaluation requires that both the observations and if possible the emissions are fixed. For this reason it is desirable to prepare an integrated observational database to which the various model implementations can be compared to. The integrated dataset should be as inclusive as possible but, such a goal needs to be tempered by many limitations that preclude a broad, inclusive data integration. The proposed HTAP data system will be a hybrid combination of both distributed and fixed components.

HTAP Integrated Data System (HTAP IDS)

The HTAP IDS will (1)facilitate seamless access to distributed data,(2)allow easy connectivity of data processing components through standard interfaces, and (3)provide a set of basic tools for data processing, integration, and comparisons.

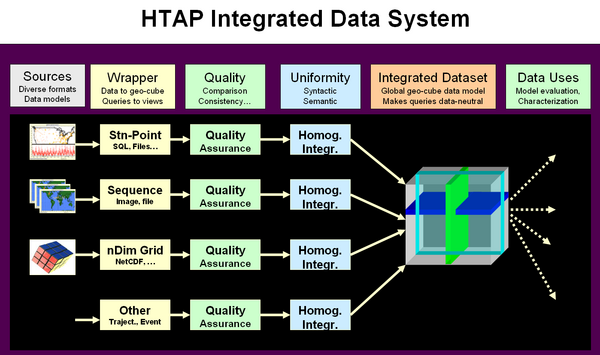

The architectural framework for processing and integrating the HTAP data is patterned after the IGACO framework. The specific expansion of that generic framework consists of specifying multiple HTAP datasets and models that needed to be integrated and reconciled.

Figure 1a. Architecture of the HTAP Integrated Data System.

Figure 1b. Reference Framework of IGACO.

Traditionally data processing, integration, and model comparisons have been performed using dedicated software tools that were handcrafted for specific applications. The Earth observations and modeling of hemispheric transport is currently pursued by individual projects and programs in the US and Europe. These constitute autonomous systems with well defined purpose and functionality. The key role of the Task Force is to assess the contributions of the individual systems and to integrate those into a system of systems.

Both the data providers as well as the HTAP analysts-users will be distributed. However, they will be connected through an integrated HTAP database which should be a stable, virtually fixed database. The section below describes the main component of this distributed data system.

The multiple steps that are required to prepare the integrated dataset are shown on the left. The sequence of operations can be viewed as a value chain that transforms the raw input data into a highly organized integrated dataset.

The operations that prepare the integrated dataset can be broken into distinct services that sequentially operate on the data stream. Each service is defined by its functionality and by firmly specified service interface. In principle, the standards-based interface allows linking of service chains using formal work flow software. The main benefit of such a Service Oriented Architecture (SOA) is the ability to build agile application programs that can respond to changes in the data input conditions as well as the output requirements.

This flexibility offered through the chaining and orchestration of distributed, loosely coupled web services is the architectural framework for the building of agile data systems for the support of future demanding HTAP applications.

The service oriented software architecture is an implementation of the System of Systems approach, which is the design philosophy of GEOSS. Each service can be operated by autonomous providers and the "system" that implements the service is behind the service interface. Combining the independent services constitutes System of Systems. In other words, following the SOA approach, not only the data providers but also the processing services can be distributed and executed by different participants. This flexible approach to distributed computing allows the distribution of labor and the easy creation of different processing configurations.

The part of the integrated data system to the right of the integrated dataset (Figure 1) aids the analysts in performing high level analysis such as data data,..., in particular, the comparison of models and observations. The service oriented architecture of HTAP IS is well suited for the rapid implementation of model intercomparison techniques. At this time, neither the specific model evaluation protocols nor the supporting information system is well defined. It is anticipated, however, that the observational and modeling members of the HTAP TF will develop such protocols soon.

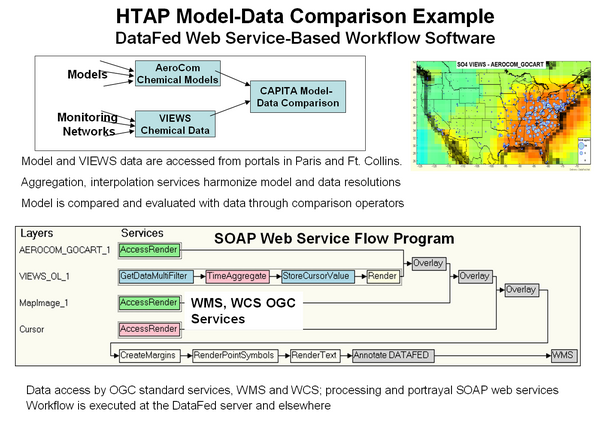

Figure 2. Example Data Processing Service Chain for Comparison of Observational and Model Values.

Interoperability Work within HTAP TF

The adoption of a set of interoperability standards is a necessary condition for building an agile data system from loosely coupled components for HTAP. During 2006/2007, members of HTAP TF have made considerable progress in evaluating and selecting suitable standards. They also participated in the extension of several international standards, most notably standard names and a standard data query language.

The methods and tools for model inter comparisons was a subject of a productive workshop at JRC Ispra in March 2006. European and American members of the HTAP TF presented their respective approaches to model and data intercomparisons as part of their respective projects ENSEMBLES, Eurodelta, ACCENT, AEROCOM, GEMS, and DataFed. Several recommendations were made to improve future use of intercomparison data. The most important recommendation was to adopt a common data format, netCDF CF conventions and to develop a list of Standard Names.

The naming of individual chemical parameters will follow the CF convention used by the Climate and Forecast (CF) communities. The existing names for atmospheric chemicals in the CF convention were inadequate to accommodate all the parameters used in the HTAP modeling. in order to remedy this shortcoming the list of standard names was extended by the HTAP community under leadership of C. Textor. She also became a member of the CF convention board that is the custodian of the standard names. The standard names for HTAP models were developed using a collaborative wiki workspace. It should be noted, however, that at this time the CF naming convention has only been developed for the model parameters and not for the various observational parameters.(See Textor, need a better paragraph).

For modeling data, the use of netCDF-CF as a standard format is recommended. The use of a standard physical data format and the CF naming conventions allows, in principle, a semantically well-defined data transfer between data provider and consumer services. The netCDF CF data format is most useful for the exchange of multidimensional gridded model data. It was also demonstrated that the netCDF format is well suited for the encoding and transfer of station monitoring data. Traditionally, satellite data were encoded and transferred using the HDF format.

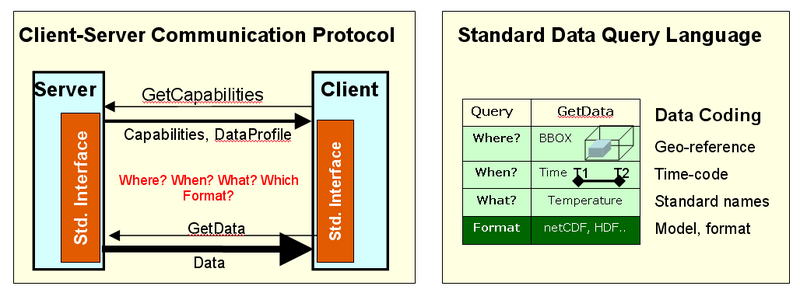

Figure 2. Interoperability protocols and standard query language

The third aspect of data interoperability is a standard data query language through which user services request specific data from the provider services. It is proposed that for the HTAP data information system adapts the Web Coverage Service (WCS) as the standard data query language. The WCS data access protocol is defined by the international Open Geospatial Consortium (OGC), which is also the key organization responsible for interoperability standards in GEOSS. Since the WCS protocol was originally developed for the GIS community, it was necessary to adapt it to the needs of "Fluid Earth Sciences". Members of the HTAP group have been actively participating in the adaptation and testing of the WCS interoperability standards.

Wrapping

The first stage of IDS is wrapping the existing data with standard interfaces for data access. Standards-based data access can be accomplished by ‘wrapping’ the heterogeneous distributed data into standardized web services, callable through well-defined Internet protocols (SOAP, REST). Homogenization can also be accomplished by physically transferring and uniformly formatting data in a central data warehouse. The main benefit of virtually or physically homogeneous data access is that further processing can be performed through reusable processing components that are loosely coupled. The result of this ‘wrapping’ process is an array of homogeneous, virtual datasets that can be accessed through a standard query language and the returned data are packaged in standard format, directly usable by the consuming services. This is the approach taken in the federated data system DataFed. Given a standard interface to all datasets, the Quality Assurance service can be performed by another provider that is seamlessly connected to the data access service. Similarly, the service that prepares a dataset for integration can be provided by another service in the data flow network. These value adding steps have to be performed for each candidate dataset that is to be used in the integrated dataset.

Quality Assurance

(This is just a place holder. Need help from WMO. Here we need to explain the quality assurance steps that are needed to prepare the HTAP Integrated Dataset. For true quality assurance and for data homogenization the data flow channels for individual datasets need to be evaluated separately, as well as in the context of other data. QA should be ubiquitous process occurring throughout the data flow and processing. This means, that IS needs to provide channels for a QA feedback loops to the data providers. )

Homogenization and Integration

The HTAP Integrated Dataset will be used to compare models to observations. The dataset will be created from the proper combination of surface, upper air and satellite observations. Monitoring data for atmospheric constituents are now available from a variety of sources, not all of which are suitable for the integrated HTAP dataset. Several criteria for data selection are given below.

- The suitability criteria may include the measured parameters, the spatial extent and coverage density, as well as the time range and sampling frequency with major emphasis on data quality.

- Initially, focus should be on PM and ozone, including their gaseous precursors. Subsequently, data collection should also include atmospheric mercury and persistent organic pollutants (POP).

- In order to cover hemispheric transport, the dataset should accept and utilize data from outside the geographical scope of EMEP.

- Special emphasis should be placed on the collection of suitable vertical profiles from aircraft measurements as well as from surface and space-borne lidars.

- The data gathering should begin with the existing databases in Europe (RETRO, TRADEOFF, QUANTIFY, CRATE, AEROCOM) and virtual federated systems in the US (DataFed, Giovanni, NEISGEI, and others).

- TF data integration should also contribute to and benefit from other ongoing data integration efforts, most notably, with ACCENT project in Europe, and similar efforts in the US.

However, before the inclusion into HID, each dataset will need to be scrutinized to make it suitable for model comparison. The scrutiny may include filtering, aggregation and possibly fusion operations.

A good example is the 400-station AIRNOW network reporting hourly ozone and PM2.5 concentrations over the US. The network includes urban sites that are strongly influenced by local sources. These need to be removed from or flagged in the integrated dataset since they are not appropriate for comparison with coarse resolution global models. Developing the specific criteria and procedures for the HTAP integrated dataset will require the attention of a HTAP subgroup.

Given the limited scope and resources of HTAP, it will be necessary to select a suitable subset of the available observational data for the preparation of the 2009 assessment. The evaluation of suitable observational datasets for model validation and fusion will require close interaction between the modeling and observational communities. A wiki workspace for open collaborative evaluation of different datasets is desirable.

Interoperability Challenges

Air_quality_interoperability_challenges

Dimensions of AQ Infosystem Description

- Interoperability Stack

- Spatial

- Value Chain

- Data Access, Processing, Delivery

Data System Implentation

HTAP Information Network

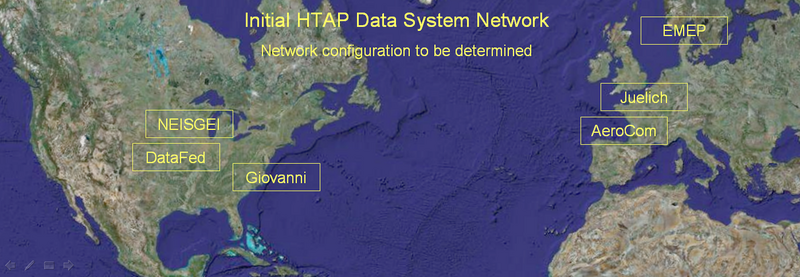

The above described architecture needs to be implemented as soon as possible so that the HTAP integrated dataset can be created and the model data comparisons can commence. An important (incomplete) initial set of nodes for the HTAP information network already exist as shown in Figure 3. Each of these nodes is, in effect, is a portal to an array of datasets that they expose through their respective interfaces. Thus, connecting these existing data portals would provide an effective initial approach of incorporating a large fraction of the available observational and model data into the HTAP network. The US nodes DataFed, NEISGEI and Giovanni are already connected through standard (or pseudo-standard) data access services. In other words, data mediated through one of the nodes can be accessed and utilized in a companion node. Similar connectivity is being pursued to the European data portals Juelich, AeroCom, EMEP and others.

Figure 3. Initial HTAP Information Network Configuration

(Here we could say a few words about each of the main provider nodes) Federated Data System DataFed; NASA Data System Giovanni; Emission Data System NEISGEI; Juelich Data System; AeroCom; EMEP.

HTAP Datasets - need list of others

See 20 selected datasets in the federated data systems, DataFed; TOMS_AI_G - Satellite; SURF_MET - Surface; SEAW_US - Satellite; SCIAMACHYm - Satellite; RETRO ANTHRO - Emission; OnEarth JPL - Satellite ; OMId - Satellite; NAAPS GLOBAL - Model; MOPITT Day - Satellite; MODISd G - Satellite; MODIS Global Fire - Satellite; MISRm G - Satellite; GOMEm G - Satellite; GOCART G OL - Model; EDGAR - Emission; CALIPSO - Satellite; AIRNOW - Surface; VIEWS OL - Surface; AERONETd - Surface; AEROCOM LOA - Model

Organizational Aspects

Relationship to GEO and GEOSS

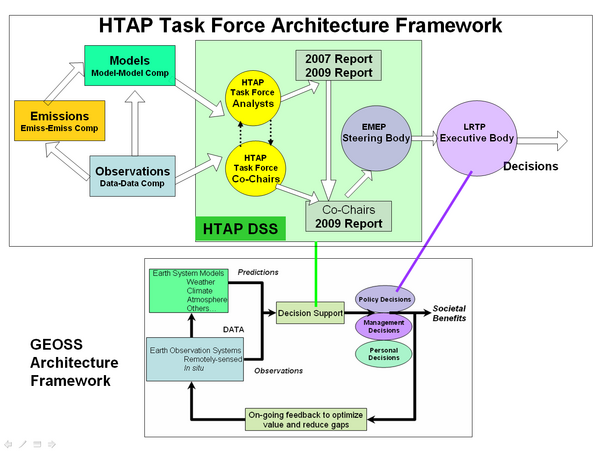

There is an outstanding opportunity to develop a mutually beneficial and supportive relationship between the activities of the HTAP Task Force and that of the Group of Earth Observations (GEO). The national and organizational members of GEO have adapted a general architectural framework for turning Earth observations into societal benefits. The three main components of this architecture are models, and observations, which feed into decision support systems for a variety of societal decision making processes.

Figure 4. Architecture Framework for HTAP and GEOSS. Rectangular boxes represent machine processors while the circular entities are human "processors" or decision-makers.

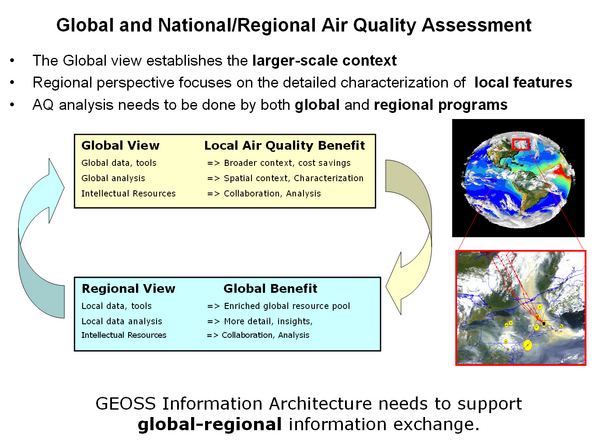

Figure 5. Global-Regional Interaction of Information Systems

This general GEO framework is well suited as an architectural guide to the HTAP program. However, it is void of specific guidelines and details that are needed for application areas such as HTAP. The HTAP program provides an opportunity to apply and extend the GEO framework. In case of HTAP, the major activities are shown in the architectural diagram of Figure 4. The modeling is conducted through global scale chemical transport models. The observations arise from satellite, ground-based and airborne observations of chemical constituents and their hemispheric transport. In case of HTAP, a third input data stream is needed for emissions which i neither observation, nor a model.

The HTAP decision support system consists primarily of humans. They need to be supported by an IT infrastructure and a set of enabling tools.

The first cluster is composed by the analysts who are the members of the HTAP task force. Their products are the 2007 and 2009 assessment reports submitted to the HTAP co-chairs and to EMEP. A second shorter report is prepared and submitted to the EMEP executive body, which is the decision making body of the LRTP convention. (Terry, Andre this description of the HTAP DSS needs your help).

Developing a higher resolution design chart for the HTAP DSS is an important task because it can guide the design and and implementation of the supporting information system. Furthermore, the detailed DSS architectural map may also serve as a communications channel for the interacting system of systems components. The insights gained in developing the HTAP DSS may also help the DSS design in similar applications.

The implementation of the GEO framework utilizes the concept of Global Observing System of Systems (GEOSS). Traditionally, Earth observations were performed by well defined systems such as specific satellites and monitoring networks which were designed and operated using systems engineering principles. However, GEO recognized that the understanding of Earth system requires the utilization and integration of the individual, autonomous systems.

While system science is a well developed engineering and scientific discipline, the understanding and development of System of Systems is in its infancy. The work of HTAP TF may provide an empirical testbed for the study of this new and promising integration architecture. Since, the HTAP TF activity encompasses virtually all aspects of GEOSS system of systems integration, it is an attractive "near-term opportunity" to demonstrate the GEOSS concept. An initial low-key demonstration could be accomplished as part of the HTAP TF 2009 assessment. Such a GEOSS demonstration is particularly timely since the data resources, data mediators and the connectivity infrastructure is nearly ready to be connected into a system of systems. Also, there are strong societal drivers to extend an update of LRTP convention to incorporate the air pollution impacts of one continent to another.

An HTAP-GEOSS demonstration would also demonstrate System of Systems approach not through stovepipe but through a dynamic network approach.

This sequence of activities constitutes an end to end approach that turns observations and models into actionable knowledge for societal decision making. One could say that this is an octagonal approach to more deliberate step by step development of GEOSS.

Relationship to IGACO

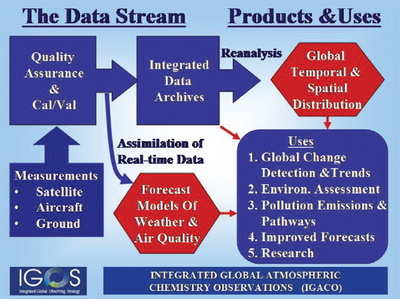

The HTAP program can also have a mutually beneficial interaction with the Integrated Global Atmospheric Chemistry Observations (IGACO) program. The IGACO is part of the Integrated Global Observing Strategy (IGOS). IGACO proposes a specific framework for combining observational data and models. It also specifies the higher level information products that can be used for creating social benefit.

In the IGACO framework, the data products from satellite, surface, and aircraft observations are collected for inclusion into an integrated data archive. A key component in the IGACO data flow is the mandatory quality assurance and QA QC that precedes the archiving. This is particularly important for HTAP where multisensory data from many different providers are to be combined into an integrated database. In the IGACO framework, a key output from the data system is a high integrated spatial-temporal dataset which can be used in a multitude of applications that produce social benefit. The goal of creating such an integrated dataset is shared by the IGACO and the HTAP programs (?? Len, this section could benefit from your input)

HTAP Relationship to other Programs

The Hemispheric Transport of Air Pollutants Program draws most of its resources from a variety of other programs. Conversely, the HTAP activity and its outcome can have significant benefits to other related programs. In the US, the Web Services Chaining experiments for connecting the core HTAP network will be conducted through the Earth Science Information Partners (ESIP) partnership. The interoperability experiment for the standard data query language, OGC Web Coverage Service (WCS) will be in conjunction with the GALEON II interoperability experiments. It is anticipated that th core HTAP network will be one of the OGC Networks demonstrating interoperability. (need more examples of HTAP links to other activities - how about European projects??)

Links

Chapter 6: Initial Answers to Policy-Relevant Science Questions

- How does the intercontinental or hemispheric transport of air pollutants affect air pollution concentration or deposition levels in the Northern Hemisphere for ozone and its precursors; fine particles and their precursors; and compounds that contribute to acidification and eutrophication?

- More specifically, for each region in the Northern Hemisphere, can we define source-receptor relationships and the influence of intercontinental transport on the exceedance of established standards or policy objectives for the pollutants of interest?

- How confident are we of our ability to predict these source-receptor relationships? What is our best estimate of the quantitative uncertainty in our estimates of current source contributions or our predictions of the impacts of future emissions changes?

- For each country in the Northern Hemisphere, how will changes in emissions in each of the other countries in the Northern Hemisphere change pollutant concentrations or deposition levels and the exceedance of established standards or policy objectives for the pollutants of interest?

- How will these source-receptor relationships change due to expected changes in emissions over the next 20 to 50 years?

- How will these source-receptor relationships be affected by changes in climate or climate variability?

- What efforts need to be undertaken to develop an integrated system of observational data sources and predictive models that address the questions above and leverages the best attributes of all components?

- Improving the modeling of transport processes using existing and new field campaign data.

- Improving emissions inventories using local information and inverse modeling.

- Identifying and explaining long-term trends by filling gaps in the observing system and improving model descriptions.

- Developing a robust understanding of current source-receptor relationships using multiple modeling techniques and analyses of observations.

- Estimating future source-receptor relationships under changing emissions and climate.

- Improving organizational relationships and information management infrastructures to facilitate necessary research and analysis.

7.4 The Continuing Role of the TF HTAP

There are various ways of source apportionment and those need to be compared receptor oriented methods and also reconcile the receptor and the forward oriented methods

- Exceptional Event analysis/managemnt: Identify the (1)info needs, (2) potential providers and (3) info paths/processes/echnologies between

- Hemispheric Transport: Identify the (1)info needs, (2) potential providers and (3) info paths/processes/echnologies between

- Find a union of the two (1)info needs, (2) potential providers and (3) info paths/processes/echnologies between

- Seek a core neteork, stating with the Union

Rhusar 01:20, 24 September 2007 (EDT)