Example SQL for Stations

Back to WCS Access to netCDF Files

A Real life example how to serve station timeseries point data through WCS data access protocol.

AIRNOW

Daily PM 2.5, PM 10 and Ozone data can be downloaded from date encoded folders. The data and location tables are in text files.

Folder for 2011-07-05: http://www.epa.gov/airnow/2011/20110705/

PM 10 observations: http://www.epa.gov/airnow/2011/20110705/pm10_070511.obs

Active sites: http://www.epa.gov/airnow/2011/20110705/active-sites.dat

Below is the description of the public database interface.

The Design of the SQL Database

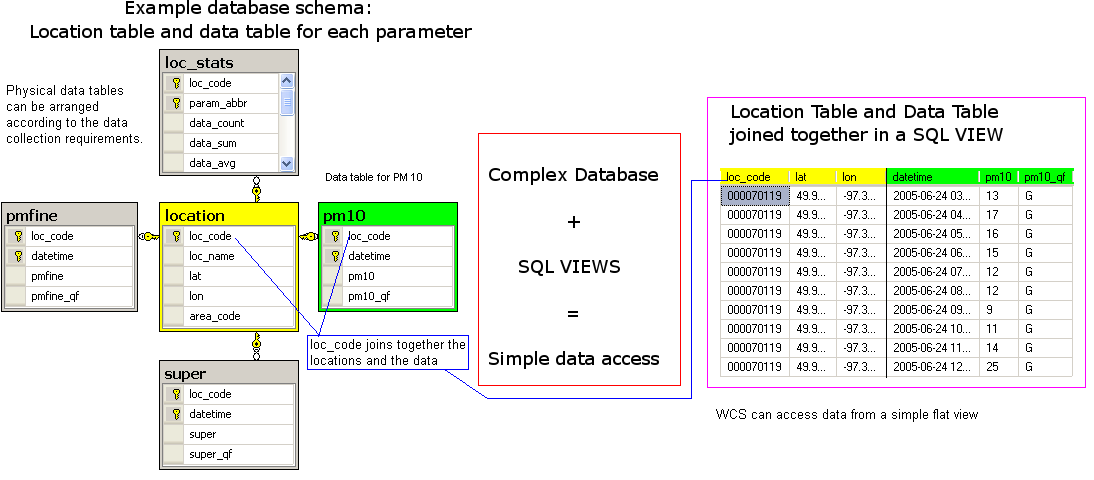

The most flexible method of storing station time series point data is an SQL database. Typically, the collector of the data designs a schema, that captures all the measured data, metadata of the measurements, station instrument information etc. This typically requires a database with lots of tables and relationships between them. There is no one-size-fits-all design around, because requirements for data collecting systems are different.

Fortunately, relational databases were invented just to solve this standardization problem:

The complex database on the left is the real data storage, which is not an easy thing to understand.

On the right, is the requirement for the WCS: a flat, simple table listing place, time and data value.

The tool that brings these two together is an SQL View. It allows the administrator to create a view, a virtual table, that can be queried with a simple filter, taking the complexity off from the WCS point access system.

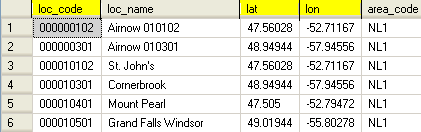

Location table

The Location table needs at least 3 columns: loc_code, lat and lon

The names are fixed. This goes with fairly popular design philosophy, convention over configuration. Rather than allowing any name, longitude or längengrad, and then mark it to be the longitude column somewhere else, the field name is fixed to lon. This is done at the SQL View level, renaming the fields.

The fixed field names are loc_code, loc_name (optional), lat, lon, elev (optional). It is important to use these names, since client software, like datafed browser, expects them.

Any other fields can be added. The system will just copy the additonal data to output.

So it there is a raw location table:

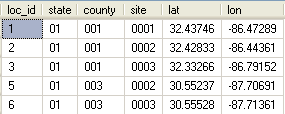

The location table has no loc_code. The primary key contains three fields: state, county and site codes. The loc_id is a surrogate key, automatically generated number and carries no information by itself.

In this case, the location view can make the loc_code in two ways. It can either concatenate the 3 numeric codes into a 9-character loc_code field, or use loc_id as a code. If loc_id is used as a loc_code, it has the drawback that it must be maintained forever, where as state, county, and site ID's are given from outside.

Parameter Views

Similarly, parameters can be published as views. The data view picture above shows a table with columns loc_code, lat, lon, datetime and pm10. It could also contain data quality metadata for pm10.

Again, field names are standardized. datetime is the standard name for observation time column. The parameter column names can be anything, as well as the metadata flag columns.

It is to be noted, that the parameter view is a result of a join, and therefore properly normalized. There is no denormalized table, that repeats the loc_code, lat and lon columns over and over again.

Fact - Data Table

Data Views in SQL

the importance of views, flexibility of SQL, snapshot views

creating data views

calculating statistics to filter the location table

Configuring the WCS Service and WFS Service for point data

WFS for locations

WCS for data

using python dictionaries ??