Applications of Semantic Web for Earth Science

Introduction

Semantic web technology is becoming ever more important in Earth Science applications in a number of diverse roles. Furthermore, it is likely to become an even more important enabler as ambitious data science efforts, such as the Earth Cube initiative and ESIP's own Earth Science Collaboratory, more forward. These enterprises seek to make it easier to bring disparate datasets together as well as disparate disciplines and even communities in an effort to leverage our burgeoning data in the service of understanding the Earth as a system. As these various resources and the communities leveraging them diversify, the need for semantic technology to help users navigate the sea of resources becomes more apparent. Indeed, this role in discovery is acknowledged in the key capabilities determined through the first EarthCube Charrette.

However, we should not neglect the important role semantic technology can and does play in other aspects of data for Earth Sciences. For instance, semantic technology can be found in a key role in several other areas noted in the Earth Cube charrette capabilities:

- Automated Quality Assurance and Quality Control

- Provenance capture and interpretation

- Workflow construction

- Data fusion

Many such applications use underpinned by semantic technology, with the result that its value is not always readily apparent. In this short white paper, we discuss several ongoing or completed projects and applications that use semantic web as an underpinning in order to raise awareness of this critical technology.

Data Quality Screening Service

by Christopher Lynnes, NASA/GSFC

The Data Quality Screening Service (DQSS) is designed to help automate the filtering of remote sensing data on behalf of science users. Whereas this process today involves much research through quality documents, followed by laborious coding, the DQSS acts as a Web Service to provide data users with data pre-filtered to their particular criteria, while at the same time guiding the user with filtering recommendations of the cognizant data experts. Data that do not pass the criteria are replaced with fill values, resulting in a file that has the same structure and is usable in the same ways as the original (Fig. 1).

At the core of DQSS is an ontology that describes data fields, the quality fields for applying quality control and the interpretations of quality criteria. This allows a generalized code base that can nonetheless handle both a variety of datasets and a variety of quality control schemes. Indeed, a data collection can be added to the DQSS simply by registering instances in the ontology if it follows a quality scheme that is already modeled in the ontology. This will allow DQSS to scale to more data products with minimal cost.

For more on DQSS, see http://disc.sci.gsfc.nasa.gov/services/data-quality-screening-service.

Earth and Space Science Informatics Linked Open Data

by Tom Narock (University of Maryland, Baltimore County) and Eric Rozell (Rensselaer Polytechnic Institute)

Linked Open Data (LOD) is a data publishing methodology comprised of four simple principles.

- Use unique identifiers (URIs) as names

- Make those identifiers dereferenceable via HTTP

- Dereferencing an identifier should return RDF (the data representation language of the semantic web)

- Include links to other data sets

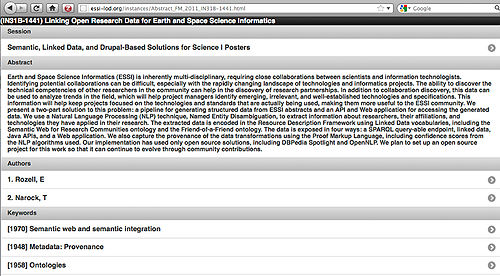

Following these principles results in structured data with explicit semantics. As a result, data from different sources can be connected and queried. Earth and Space Science Informatics Linked Open Data (ESSI-LOD) is a project aimed at creating Linked Open Data within the Earth and Space Sciences. Initial data sets to this project include historical conference data from the American Geophysical Union (AGU) as well as membership and meeting data from the Federation of Earth Science Information Partners (ESIP). Many members of the Earth science community participate in both AGU and ESIP, and there are many implicit relationships between the groups. However, answering questions across the two organizations has been difficult due to data being stored in proprietary and non-interoperable formats.

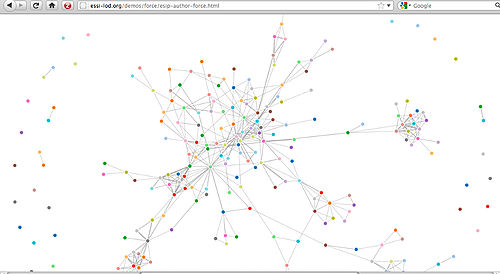

ESSI-LOD created Linked Open Data to alleviate these limitations. We converted 7 years of AGU conference data (2005-2010)and 5 years of ESIP membership/meeting data (2007-2011) into RDF and exposed it as Linked Open Data. Links between the data sets were made at the person level where we identified identities between AGU authors and ESIP members. Exposing LOD has opened the data to cross-organizational question answering, collaboration discovery, analysis of trends, and insight into the underlying social network. Moreover, LOD is scalable and easily affords other organizations the ability to link their LOD data to ESSI-LOD - thus further extending data fusion and querying capabilities.

The benefits of ESSI-LOD have been enabled by simple semantic web principles and reusable vocabularies. These semantic web technologies have facilitated rapid browsing (Figure 1), querying, visualizing (Figure 2), and extensibility not easily obtainable with the original data sets.

For more information on ESSI-LOD visit: http://essi-lod.org/.

OPeNDAP's Hyrax Data Server Provides RDF

by James Gallagher and Nathan Potter, OPeNDAP, Inc.

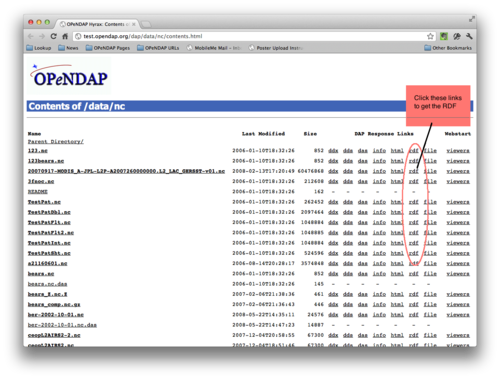

OPeNDAP's Hyrax Data Server produces RDF for each of the datasets it serves. Because the RDF response document is produced on-the-fly from the DAP2 XML representation of the dataset, this feature is available for any dataset served by Hyrax without any configuration by the data provider.

The RDF document may be accessed using the HTML 'directory' pages produced by the server (see Figure 1.) or it may be accessed by appending the suffix .rdf to the pathname component of the dataset's URL. Example: For the COADS Climatology dataset, the service endpoint URL is http://test.opendap.org/dap/data/nc/coads_climatology.nc and by appending .rdf, you can access the RDF document describing that dataset: http://test.opendap.org/dap/data/nc/coads_climatology.nc.rdf. In fact you can gets lots of information from the service endpoint URL by appending different suffixes like .ddx or .html (Try it, it's fun!).

This RDF output is used in our experimental WCS servlet and was developed as a general mechanism to perform dataset attribute translation based on ontologies. The RDF uses the default namespace http://xml.opendap.org/ns/DAP/3.2#; the schema for DAP can be found at http://xml.opendap.org/dap/dap3.2.xsd. An ontology for DAP2 can be found at: http://xml.opendap.org/ontologies/opendap-dap-3.2.owl.

Sea Ice Ontology Browsing at NSIDC

The Sea Ice Interoperability Initiative is an NSF funded effort whose goal is to enhance the interoperability of sea ice data and establish a network of practitioners working to enhance the interoperability of all Arctic data. As a part of that project, NSIDC is developing detailed, yet broad, sea ice ontologies linked to relevant marine, polar, atmospheric, and global ontologies and semantic services. Our overall goal is to improve the interoperability, usefulness, and understanding of Arctic sea ice data using Semantic Web approaches and technologies.

We've been working with two use cases; one involving assessments of sea ice conditions in the arctic from a transportation safety viewpoint and the other from a view to improving the characterization of albedo for models. However, we hope to explore how to incorporate the knowledge and perspectives of Arctic residents in later stages of the project.

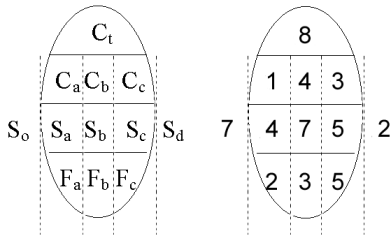

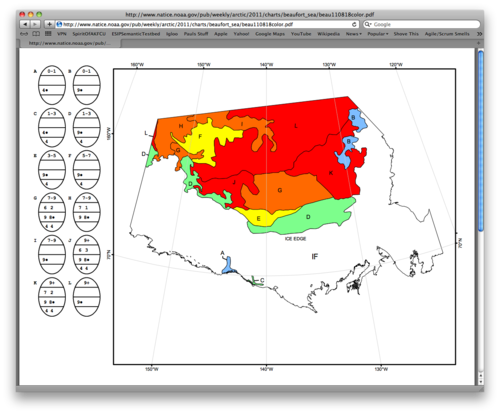

Over the first year of the project we've concentrated on the transportation use case and in particular on the "egg codes" (see Fig. 1) that are used on ice charts by the national ice services around the world to communicate the state of the ice and the Sigrid 3 data format that is used to digitally transfer this knowledge between services and end users. Towards that end, we've developed a number of ontologies that model some aspects of the sea ice terminology of the World Meteorological Organization (WMO) as well as "egg code" and "Sigrid" ontologies that allow NSIDC's Sigrid 3 formatted data to be transformed into linked data.

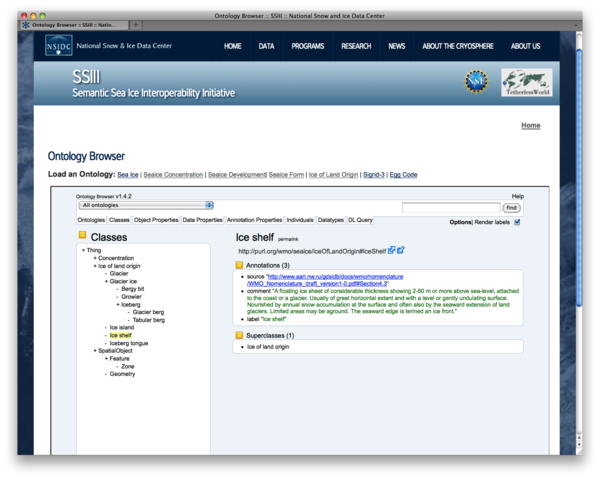

One of the main issues with the semantic web is the need for developers to capture and model information from experts within a field and display that in a manner that will allow those or other experts to review and comment on the semantic models generated without having to learn XML, OWL, or any other semantic technology. This is particularly true in our case where the expertise is literally distributed world wide. To facilitate this review, NSIDC installed and tailored a copy of the Manchester Ontology Browser.