On Decision-Support System Architectures

Back to <Data Summit Workspace

Back to <Community Air Quality Data System Workspace

Decision Making and Decision Support Systems

- AQ-relevant decisions are made by people, technical analysts,

- AQ decision makers are informed analysts, managers, lawyers...other people

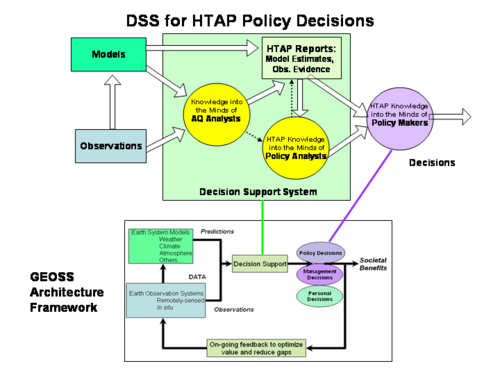

DSS for Hemispheric Transport of Air Pollutants

Air quality managers need quantitative information to justify their decisions and actions. Such information is provided by the decision support system. A typical air quality decision support system consists of several active participants. Models and the observations are interpreted by experienced Technical Analysts who summarize their findings in 'just in time’ reports.

Often these reports are also evaluated and augmented by Regulatory Analysts who then inform the decision-making managers. With actionable knowledge in hand, decision makers act in response to the pollution situation.

While the arrows indicate unidirectional flow of information, each interaction generally involves considerable iteration. For example, analysts explore and choose from numerous candidate datasets. Also most reports are finalized after considerable feedback.

Note that the key users of formal information systems are the technical analysts. Hence, the system needs to be tailored primarily to the analysts needs.

- The decision support system for hemispheric transport assessment performs both model to model comparison as well as model to observation comparison.

- The reports of analyses are submitted to policy analysts in the hemispheric transport Task Force.

- The policy makers decide whether and in what way hemispheric pollutant transport should be included in International Long-Range Transport Protocols.

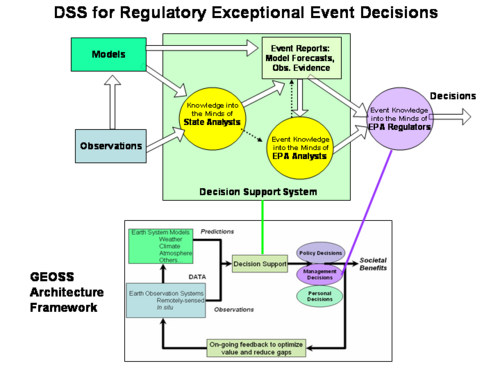

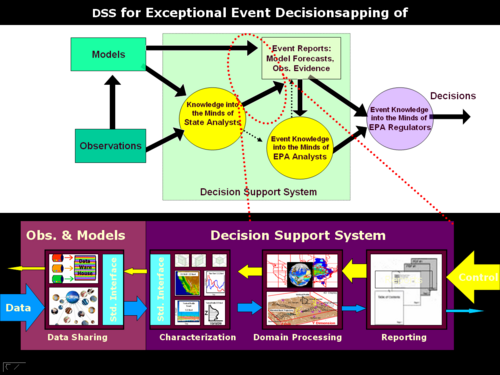

DSS for Exceptional Event Rule Implementation

- In case of natural exceptional pollution events, such as forest fire smoke the air quality analysts are typically affiliated with the impacted states.

- They submit their reports to the federal EPA as part of their request for exceptional event waivers.

- EPA analysts review the requests and make their recommendations to the EPA regulatory decision makers

- In this case, the DSS needs to support both state and the federal analysts.

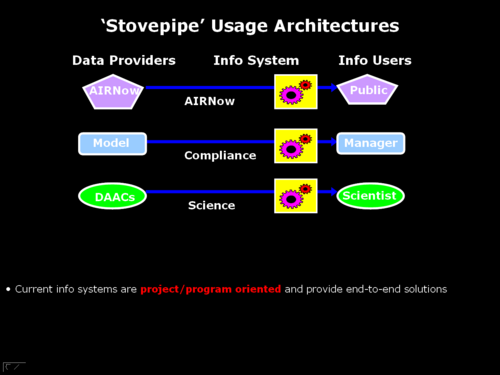

Traditional air quality information systems provide end-to-end solutions through dedicated ‘Stovepipe’ processing streams

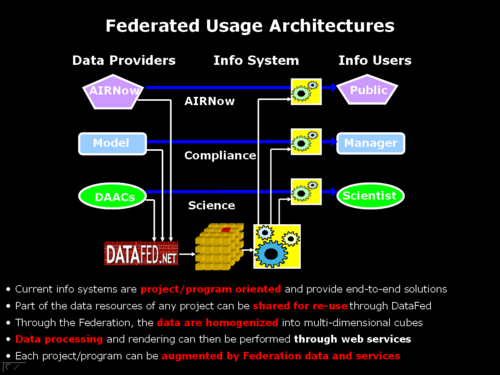

However, many formerly closed data systems are now opening up and they share their data – through DataFed or other mediators

In a federated approach data are homogenized and augmented with services and tools streams…

The federated resources in turn can be fed back into individual project processing streams

The transition from the stovepipe to the networked, service oriented architecture can be gradual and non-disruptive.

The intensely human decision system for air quality management can and needs to be augmented by computer and communication facilities.

Matching the mechanical support system to the human user needs is an art that is difficult to master.

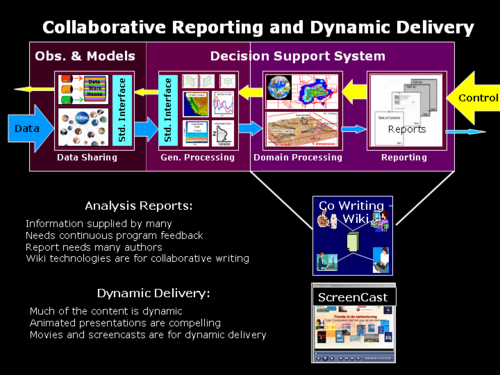

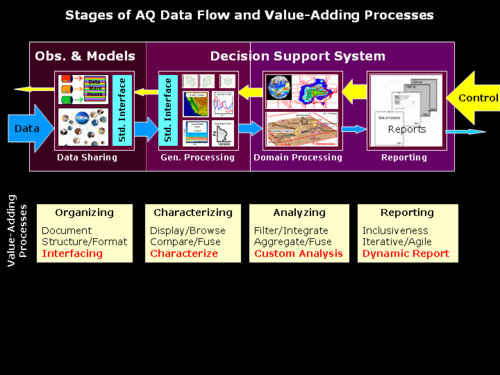

This figure represents an attempt to relate the human and machine-executed processes, represented by arrows.

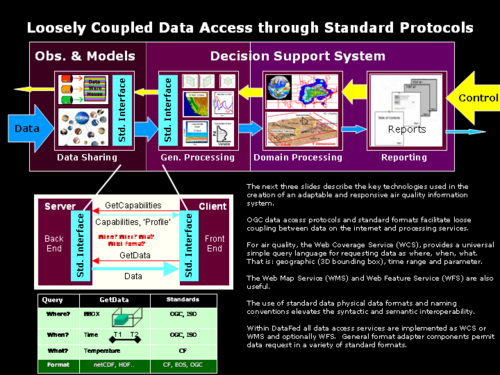

The first stage includes data access. Standardized data access services such as the OGC standards reduce the impediments and improves the agility of the system.

Turning models and observations into informing or scientific knowledge is the next step. This step begins with characterization.

The second stage of analysis entails transforming the general knowledge into application specific, actionable knowledge.

As the information flows from left to right, the nature of processing shifts from machine to human dominated processors.

There are value-adding processes in each step of the information flow system.

Observational and model data are first organized and exposed to standard interfaces.

The next step is the general characterization of the pollutant by displaying, comparing and fusing different datasets

Subsequent custom analysis provides specific information needed for the particular decision process.

Reporting summarizes the analyses for the decision makers.

The next three slides describe the key technologies used in the creation of an adaptable and responsive air quality information system.

OGC data access protocols and standard formats facilitate loose coupling between data on the internet and processing services.

For air quality, the Web Coverage Service (WCS), provides a universal simple query language for requesting data as where, when, what. That is: geographic (3D bounding box), time range and parameter.

The Web Map Service (WMS) and Web Feature Service (WFS) are also useful.

The use of standard data physical data formats and naming conventions elevates the syntactic and semantic interoperability.

Within DataFed all data access services are implemented as WCS or WMS and optionally WFS. General format adapter components permit data request in a variety of standard formats.

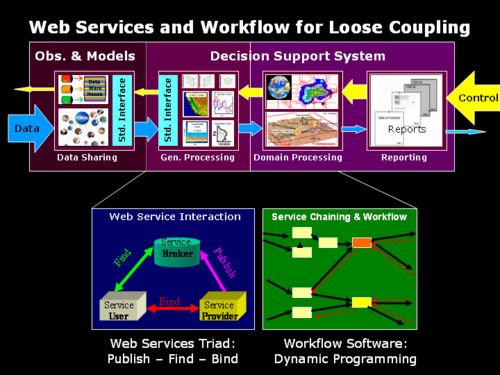

Workflow software is the technology that allows dynamic connection of loosely coupled work – components called web services.

The ability to combine services offered by different providers into arbitrary workflow programs seems to be a revolutionary way of using the internet

In DataFed all the web services are accessible through both standard SOAP-WSDL as well as through URL interfaces.

Consequently, web applications can be built either through SOAP-based chaining engines or through light-weight client languages, such as JavaScript and AJAX.

A companion demonstration by Liping Di in this workshop shows the interoperability and mashing of DataFed and other service providers.

The preparation of reports and other human-human interactions can now be supported by “wiki” technologies

Beyond unhindered collective document creation, wikis encourage and support content reuse in small granules.

Within DataFed, more and more of the human interaction takes place on the wiki.

Another interesting incremental technology is the screencast that is used to deliver this message.

Related Links