Difference between revisions of "Dust"

| Line 426: | Line 426: | ||

-Ron. | -Ron. | ||

| − | |||

| − | |||

| − | |||

| − | |||

Revision as of 09:21, January 4, 2007

AeroCom wiki discussion entry

Go back to AeroCom/Working group structure

See also summary of AeroCom/Recommendations

AeroCom working group DUST

Participants for analysis

Ginoux, Balkanski, Mahowald, Schulz, Winker, Mann, Takemura, Miller?, Tegen?, Zender?,

NEXT Telephone conference: 10th of January 17 UTC (= 18 Paris time, 12 ET, 10 Boulder Time?)

Goals

Investigate the possible impact of anthropogenic dust sources

Compare dust simulations to multiple observational datasets

Recommend properties for dust size and refractive index

Processes and possible Diagnostics

Dust erosion - wind frequency and speed ; threshold velocities; effective source fluxes; source size distribution

Dust settling - dry removal velocities per size bin;

Wet Dust removal - wet scavenging efficients; vertical distribution; wet deposition of Calcium, dust;

Scattering and absorption - refractive index; AOD; size; absorption

Short-term actions/experiments

Organise regular teleconferences

Prepare a natural and an anthropogenic dust source for a joint

experiment

Follow up on ideas from earlier Mahowald publications.

Assemble observations

(Visibility, IDDI, MISR, MODIS, MODIS Deepblue, Deposition, Aeronet,

Aeroce, Calipso, Asian dust observations, Ca concentrations in rain)

Retrieve from AeroCom database dust variables and wind speed fields

Eventually participate in dust conference in Italy

Data to look at

The AeroCom database has unanalysed data on dust deposition and wind speed statistics in some models.

Aeronet dust dominated sites

Refractive index and size in literature

Dust, Fe and Ca concentrations in air and precipitation

Satellite AOD in dust dominated regions (MISR, MODIS, TOMS, DEEPBLUE-MODIS, CALIPSO)

Fine mode AOD in dust regions?

Enter the DISCUSSION HERE

Michael Schulz: Dec. 14th 2006

Dear dust colleagues,

Having met Nathalie and Paul in Paris during the GEIA conference I am motivated again for suggesting joint dust work. I think there is a good chance to get a better 'dust analysis' going on the basis of the AeroCom data. But we probably need to do/propose some additional experiments.

During the AeroCom workshop we have said that a working group should be installed and Paul kindly agreed to lead it (for now). As suggested by the meeting we intend to maintain wiki pages for joint discussion and I have set up one with basic initial thoughts:

http://wiki.esipfed.org/index.php/Dust

However - Nathalie and me thought that some few telephone conferences would be really helpful to get things going. I have no real experience but can only propose skype. Any better suggestion would be perfect!! I just tested: we could also download images on the wiki page prior/during a telecon. Not so complicated. I suggest you use the wiki page to enter propositions for a first teleconference.

Right now I am not sure we will manage to start before Christmas. But some reaction to this proposal would be nice,

have nice winter days! Michael

Ina Tegen: Dec. 15th 2006,

Dear colleagues, a dedicated AEROCOM-DUST analysis is a good idea and is quite timely considering the ever-increasing interest in dust aerosol, and I would be happy to contribute. I agree with Michael that some new experiments would be needed, which obviously have to be carefully designed. To have Paul lead a working group on this is great. I suggest to also include Stephanie Woodward from the Hadley Center in this exercise. (stephanie.woodward@metoffice.gov.uk).

After having looked at the WIKI page, here are some additional comments:

- What is the best choice for an anthropogenic dust source? While the estimates for total anthropogenic dust contribution to total dust are now considerably lower compared to the IPCC 2001 number, this emission and the geographical distribution is still very uncertain. In addition to ‘direct’ anthropogenic dust sources as from agricultural soil disturbance it would be nice to have a ‘second order’ anthropogenic dust source that is caused by anthropogenic climate change, ie present-preindustrial sources taking into account any changes in surface wind and precipitation as well as vegetation, such as estimated recently by Natalie. In addition to her results it would be useful to have additional estimates from other models to minimize a possible model dependence of these results. Maybe putting together a map of vegetation changes from different vegetation model results for the preindustrial to present can be helpful.

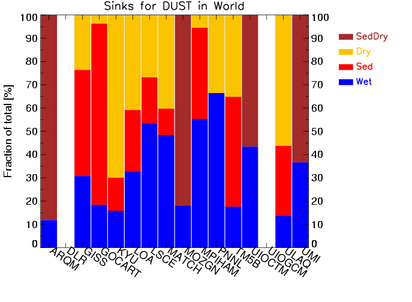

- A while ago I looked at the AEROCOM results for dust– it seemed that the differences between the results for Experiment B (fixed sources) were larger than to be expected from differences in deposition schemes alone (although these may be considerable too)- and indeed it seems that while everyone used the same emission fluxes they were distributed quite differently on the different particle sizes, due to the different treatments of size distribution in the models (mode vs bin schemes) and of course if the same mass it put into larger size bins, the atmospheric load depletes more quickly due to higher gravitational settling velocities, and the importance of the different deposition processes (as seen in the Figure included in the Wiki page)) partly differs simply due to this. It is crucial to pay attention to this for any new runs comparing results with identical dust source fluxes– if the effect of the different transport and deposition schemes are to be compared it has to be ensured that the sources are in fact identical, including identical initial size distribution.

- The choice of the dust refractive index is of course important for computing radiative flux changes, but to my knowledge no model uses yet refractive indices which vary with the source region to account eg for hematite/goethite content (my apologies if I have overlooked something here). Also, I see that you intend to compare Ca contents of the dust – this should also vary for the different source regions. So I am not sure if we can make any real progress by model intercomparison, apart from compiling appropriate literature data. BTW, the German SAMUM dust experiment that took place in Morocco past summer should have some very good data on dust optical properties by next year (the measurement results are currently still being analysed).

- Which should be the focus year(s) for those models which are driven by reanalysis fields or nudged meteorology? For the AEROCOM years I suggest to concentrate on 2001, which had Ace Asia, and some nice Saharan dust events. If additional years will be simulated, I suggest 2006 of course, if we want to make use of Calipso data. Also, for this year eventually some results from the SAMUM and AMMA field campaigns results will become available.

- If this is possible it would be useful to perform an emissions only experiment in addition to the full atmospheric dust run to systematically compare the performance of the different schemes, e.g. computing dust emissions for the different schemes with identical surface wind speeds in addition to compute the fluxes with their own wind fields. Of course it will difficult to decide on the ‘best’ emission scheme by this as there are no global scale dust emission flux data, but a test of the performance could possibly be done be in terms of modelled ’emission events’ compared to meteorological dust storm data, IDDI, and TOMS AI > 0.7 (?)events (any other ideas?).

Regarding a telephone conference, sometime January would be fine. Having never set up one myself, I cannot offer any other suggestions on the best way to do this, sorry.

A happy holiday season to all of you, Best wishes, Ina

Dr. Ina Tegen Leibniz Institute for Tropospheric Research Permoserstr. 15 04318 Leipzig Germany

Tel.: +49 (0)341 235 2146 Fax: +49 (0)341 235 2139 Email: itegen@tropos.de

Stefanie Woodward: Dec. 18th 2006,

Dear Ina et al,

I think a dedicated AEROCOM dust intercomparison would definitely be a good idea, and I'd be interested in being involved, if possible.

I agree with the points that Ina makes. The idea of a comparison of emissions schemes is particularly interesting, as they must account for a large part of the differences between models as they are actually run. It could be a challenge to set up a level comparison, though, as emissions schemes tend to be tuned to the GCM they're run in (intentionally or otherwise), so if the prescribed wind fields etc vary significantly from those in the "parent" GCM, dust emissions could be very different from what the scheme normally produces. As to looking at "second order anthropogenic dust", I have some data which might be useful: fields from HadAM3 simulations run with vegetation fractions and SSTs taken at 2000 and 2100 from a coupled climate - carbon-cycle simulation, which allowed the vegetation changes to feed back on the climate.

I don't know what the projected timescale for an AEROCOM dust analysis is, but we'd be in a much better position to take part in something later in the year. Our latest climate model is still in the process of being tuned, though this should, hopefully, be completed fairly early next year. Also, we don't at the moment have the facility for running the model for specific time periods: this should be available later in the year.

Best wishes,

Steph

Paul Ginoux: Dec. 18th 2006

Hi Stephanie,

It seems that we are in the same boat, I mean tuning our GCM for IPCC AR-5.

Michael's idea was to have a working group on dust as AERCOM to address some specific issues. One element is dust emission, and for the comparison of the different sources and emission parameterizations, Reha Cakmur and Ron Miller have developed and published a method that I would suggest to follow for start. The idea would follow the template of AEROCOM-A: each dust model provides a set of defined fields (used by Cakmur-Miller's code), these fields are compared with different sets of observation, and by varying the weighting of each datasets different error analyzes are performed (Cakmur et al., JGR, 2006). The fields are generally archived by models and would not necessitate to re-run them. Later, when the balls are rolling, we could propose AEROCOM_B type experiments.

Ina has suggested some interesting ideas and as others would like to add also their ideas, the best would be to use a wiki page, as suggested by Michael. Meanwhile, I will contact Ron to ask for his participation and thoughts about this.

Happy holidays,

Paul.

Ron Miller: Dec 19th 2006

Hello,

Paul's suggestion is a good one.

I originally talked with Stefan at the Los Alamos Aerosol Workshop in July, and subsequently with Natalie and Michael, about applying the Cakmur et al method to the original AEROCOM models. However, the obs comparison would work just as well on the new models.

In particular, we would like not only to measure the simulation quality with the observations, but also see to what extent the simulation can be improved by tuning.

Here is the background (Paul, Ina, and Charlie were co-authors, and Natalie was a reviewer so they can skip this next paragraph.)

Background: One of the big uncertainties in global dust modeling is how to calculate the emission for a given wind speed: i.e. if E = C u^2 (u - u_T ) where E=emission, u=surface wind speed, and u_T=threshold for emission, what is C? In practice, people tune the coefficient C so that their model output is in rough agreement with a particular data set, e.g. concentration measured at Barbados. In Cakmur et al, we decided to tune to match simultaneously to a number of data sets representing a worldwide array of observations. There were a few motivations for this: i) we wanted to tune quantitatively, ii) we wanted to be explicit about the data and criteria we used for tuning, and iii) we wanted to use as many obs as possible with the hope that our tuning would be robust, and not dependent upon our choice of data sets. As a result of this tuning, we derived a global emission that was in optimal agreement with the observations. We also found that the minimum model error with respect to the obs was not sharply defined; thus, in spite of all our input data, the optimal emission actually had a large uncertainty (and ranged between 1000 and 3000 Tg). Most of this uncertainty came from our choice of dust source region.

Plan: Stefan, Michael, Natalie and I discussed extending this to the AEROCOM models. There would be two benefits i) we could bring each model into optimal agreement with the obs by rescaling all the variables on the dust cycle (equivalent to tuning C) and see how far the submitted model emission is from optimal agreement with obs. In other words, we could see to what extent each model benefits from tuning. ii) we could see what range of calculated emission results among the suite of models.

Method: This would require someone (most likely me in all my free time!) extracting the necessary diagnostics from each AEROCOM model and running it through Reha Cakmur's optimization program. A major assumption is that all the model output is in a single `easy to access' format such as netcdf. For each model, we can calculate the improved agreement with obs that results from tuning, along with the optimal emission (and other dust cycle variables like load). We can also calculate which obs are the strongest constraint on each model (as in Figure A3 in Cakmur et al). I also plan to expand the range of datasets to benefit from more recent obs, such as MODIS and MISR AOT for example, and possibly a deposition dataset from Francois Dulac that I have heard rumors about. (When Reha and I started this project for the GISS model, the MODIS and MISR records were too short to offer a stable climatology, but this is no longer the case.)

To start, I would need to get some AEROCOM model output, and work on extracting the necessary fields. A longer term dream would be to create a web interface at the AEROCOM site where users could upload their model output and have their model tuned on the spot (providing an optimal emission) using a subset (or entirety) of the available data. This might aid model development for individual groups. However, I currently have no web programming skills, so I would need some guidance if we got this far. A more pressing issue is me finding time to tune the AEROCOM models, but Perugia might be a good incentive.

One issue is getting permission to use the University of Miami surface concentration observations, but Joe Prospero has been generous about this and I don't think he would object.

Comments welcome!

-Ron.

P.S. For what it is worth, GISS is also still tuning our AR-5 model.

Ron Miller

NASA Goddard Institute for Space Studies 2880 Broadway New York, NY 10025

Armstrong 550 Dept of Applied Physics and Applied Math Columbia University New York NY 10027

ph : (212) 678-5577 FAX: (212) 678-5552 email: rlm15@columbia.edu

rmiller@giss.nasa.gov

Paul Ginoux: Dec. 19th 2006

Hi Ron,

I am glad to hear directly from you that you are willing to participate and share your analysis code, but I would not like to you have all the burden of the work. Would it be not more simple for you if we provide in netcdf format the fields require by your code? If I remember correctly a discussion with Michael, your "dream" is possible (if not immediately in the near future). The problem will be the manpower, we are hiring a programmer who could work in part on setting up the interface code/web and the post-analysis with IDL (or other graphical program), but ... we are searching for someone.

If your are OK to run multiple times the code with the exact same format but different values, this could be a first nice test, and if the modeler are OK to provide the netcdf file.

I was in NY this morning, and thought to stop by GISS to discuss this with you, but I was afraid to disturb you. However, I could pass by another day.

Regards,

Paul.

Michael Schulz: Dec. 20th 2006

Hi Ron and Paul,

Christmas is approaching fast for me - but I can promise for January to pull out the available data from the AeroCom data base. netcdf, pretty harmonised. I think thats the minimum service from our side. So dont worry on that.

In the near future we will also give out accounts and hove hopefully an automated tool. But lets start with pulling out data and then analysing them in the old fashioned way.

If I remember well - the Cakmur analysis optimised the ratio between silt and clay fractions. This will not be available for most of the model output. But we may try to get model fields through variation of the total load in accordance with several observations, that we should try.

Ron, for now lets try to fix a list of observations, so that we know what I should pull out of the AeroCom database. ...use eventually wiki for that???

season greetings Michael

Paul Ginoux: Dec. 20th 2006

Hi Michael,

Thanks Michael for proposing your help. Ron: Would it be not simpler for you if the modelers provided in netcdf format, the monthly climatological mean fields (after you specified independent and dependent field names, units, grid)?: - column integrated size distribution at the dusty AERONET sites - surface concentration of dust at the U. of Miami sites - dust deposition at the DIRTMAP sites - dust deposition at the Ginoux_2001 sites - AOD at 550 on the grid you specify Would you specify all these information?

Modelers: Would it be a big burden to provide these informations to Ron in a netcdf format within 2 months?

Does Ron-Reha method could be extended to other aerosols?

For the datasets, next year will bring fantastic satellite data for dust models: MODIS Deep Blue (February 2007), Calipso. There are some interesting data, although in their infancy, to consider: 3D dust retrievals from AIRS by a French group (I forget the acronym of the lab but Cyril Cervoisier is here at Princeton and will provide more info). Of course AMMA, and some upcoming field campaigns. I would like to propose the TOMS AI technique developed by Omar Torres and myself. This will necessitate someone next year to ingest all these data, but as Michael said lets start by getting what we have: Ron-Reha's code.

Thanks,

Paul.

Yves Balkanski: Dec. 20th 2006

> Hello all, > > This list is getting active and we should do it in Wiki . This way we > cankeep a nice history of our suggestions and can work easily include > them in the protocol for the run(s). > > I would strongly suggest that we keep track of the size distribution > in the different models from the emission regions to the receptor > sites. > If we do not, the integrated deposition will be very hard to interprete. > > Best, > > Yves

Ron Miller: Dec. 20th 2006

Response to Paul, Michael, and Yves.

(PAUL)

> If your are OK to run multiple times the code with the exact same > format but different values, this could be a first nice test, and if > the modeler are OK to provide the netcdf file.

Yes, uniform netcdf output would help greatly. My assumption is that previous AEROCOM output is in a uniform format, which Michael seems to confirm in his susbsequent email:

(MICHAEL)

> Christmas is approaching fast for me - but I can promise for January > to pull out the available data from the AeroCom data base. netcdf, > pretty harmonised. I think thats the minimum service from our side. So > dont worry on that.

> If I remember well - the Cakmur analysis optimised the ratio between > silt and clay fractions. This will not be available for most of the > model output. But we may try to get model fields through variation of > the total load in accordance with several observations, that we should > try.

Yes, my plan for the old output is simply to optimize the total emission, and not the silt and clay separately. (Sorry for overlooking this point.) This would be more simple.

> Ron, for now lets try to fix a list of observations, so that we know > what I should pull out of the AeroCom database. ...use eventually wiki > for that???

Yes, I should learn to use wiki.

In the meantime....

As for Cakmur et al, we used:

AOT at 550 nm (TOMS, AVHRR, AERONET).

Surface Concentration (U Miami)

Deposition (Literature values, esp Ginoux et al 2001; DIRTMAP from Karen Kohfeld and Ina)

Size Distribution (AERONET from Paul and Oleg Dubovik, DIRTMAP from Karen Kohfeld and Ina)

Since we started our study, MODIS and MISR have accumulated enough data to infer climatologies. Also, a lot of AERONET stations have become long enough, so we could augment our list of stations.

We would like to get climatological values of model output in netcdf format (as Paul notes in his subsequent email), with the lon-lat grid in addition to the physical variable. Then, we could extract the locations for comparison to obs automatically.

(PAUL)

> Modelers: Would it be a big burden to provide these informations to > Ron in a netcdf format within 2 months?

2 months?!? Your optimism is exemplary! (I'm going to be swamped with USCCSP duties in the next month or two so progress will be incremental.) The main deadline I see looming is the Perugia meeting in July. Is there an earlier deadline?

> Does Ron-Reha method could be extended to other aerosols?

Yes, this is quite simple, if people can decide on what obs they want to compare.

> For the datasets, next year will bring fantastic satellite data for > dust > models: MODIS Deep Blue (February 2007), Calipso. There are some > interesting data, although in their infancy, to consider: 3D dust > retrievals from AIRS by a French group (I forget the acronym of the > lab but Cyril Cervoisier is here at Princeton and will provide more info). > Of > course AMMA, and some upcoming field campaigns. I would like to > propose the TOMS AI technique developed by Omar Torres and myself. > This will necessitate someone next year to ingest all these data, but > as Michael said lets start by getting what we have: Ron-Reha's code.

Yes, these are exciting, and I believe that we can add them to the comparison quite easily (so long as modelers archive the necessary variables.)

(YVES)

> This list is getting active and we should do it in Wiki . This way we > cankeep a nice history of our suggestions and can work easily include > them in the protocol for the run(s).

Good point. I will have to make an effort to learn how to use Wiki.

> I would strongly suggest that we keep track of the size distribution > in the different models from the emission regions to the receptor > sites. If we do not, the integrated deposition will be very hard to interprete.

Yes, at the very least, we currently compare to AERONET size retrievals.

Cheers,

-Ron.

Ron Miller

NASA Goddard Institute for Space Studies 2880 Broadway New York, NY 10025

Armstrong 550 Dept of Applied Physics and Applied Math Columbia University New York NY 10027

ph : (212) 678-5577 FAX: (212) 678-5552 email: rlm15@columbia.edu

rmiller@giss.nasa.gov

Mian Chin: Dec. 22nd 2006

Dear all,

I've seen a lot of enthusiasm in these email exchanges! I just managed to catch up with your conversations. Here is my two cents (ignore whatever you think is wrong):

1. AERCOM dust experiments: I totally support this idea and would be very much interested in participating. I am particularly interested in using different dust sources in our model. Of course a lot can be learned from the existing AEROCOM phase A, since it has already accumulated a rich ensemble of several model output with concentrations, AOD, size bins, etc. in a uniform netcdf format. These models use different dust sources (including Ginoux, Tegen, Marticorena, and probably Zender), so some nice analyses can be done from the existing results. New AEROCOM modeling experiments could be leveraged with other planned experiments, such as HTAP. If you have seen Michael's email about HTAP experiments, we are looking for dust source-receptor experiments (SR6 and SR6d) results to be submitted by April 15, 2007. With some careful planning, SR6 and 6d can be a part of more comprehensive dust experiments.

2. Dust emission: I think the greatest and simplest misleading number in most dust model intercomparison articles (probably including AEROCOM), is the disconnection between total mass emitted and the size range included. For example, in our model using GEOS-3 winds with dust size from 0.1 to 6 micron (same size bins as in Ginoux et al. 2001), total dust emission is 2060 Tg/yr for 2001; but emission increases by more than 25% when we add another coarse bin (6-10 micron). Imagine if we had extended emission size to 100 micron! Therefore we should not only talk about "large discrepancies in dust emission" unless the sizes ranges are similar.

3. On the other hand, large particles have short lifetime, so they don't go very far from their sources to contribute much to total dust burden and AOD. Optimizing dust emission from AOD or surface measurements over oceans applies to certain size ranges, such as Cakmur et al's paper for dust emissions with sizes smaller than 8 micron. In addition, mass extinction coefficient that converts mass to AOD could be quite different among the AEROCOM models. Cautions should be taken when the same optimization code is used for different AEROCOM models.

4. Using satellite/AERONET data to constrain dust emissions: Be very careful. There are actually very few places of AERONET sites where dust dominates all the time, and satellite retrial over land usually has large error bars. Also different satellite data can have a factor of 2 difference in AOD. Besides, emission is not the only problem - removal process is just as important but is very difficult to evaluate.

5. Thinking ahead, we may also design some dedicated experiments for biomass burning aerosol only, that would deal with a suite of parameters used in estimating biomass burning emissions...

Happy holidays and have a productive new (aerosol) year,

Mian

Ron Miller: Dec. 20th 2006

Hi Mian,

Thanks for your thoughtful comments. As you note, Cakmur et al make a number of assumptions, which means that their method has to be applied judiciously with a recognition of its assumptions and limitations.

re 2): Zender et al (Eos 2004) advocated that emission be compared across a common size range (with diameter limited to less than roughly 10um). One remaining challenge is that authors do not always state whether their specified size range refers to particle radius or diameter! As you note, load is less sensitive to size range because the larger particles (whose inclusion varies most among models) fall out faster. We (and AEROCOM) might do better to focus on load instead of emission to be less vulnerable to ambiguously documented size categories. This might also be justified because we have many more observational constraints upon load than emission.

3) Incorrect optical parameters are a source of AOT error that can't be fixed by tuning the emission. Uncertainties in the obs themselves are also a problem, but we could in principle weight measurements in which we have greater confidence, such as ocean AOT (vis-a-vis land).

4) In Cakmur et al, we tried to estimate the contribution of other aerosols to AOT by using a modeled multi-aerosol distribution. Unfortunately, we found that the optimal dust emission was sensitive to this (highly uncertain) contribution, which supports your point. Possibly, separate retrievals for the fine and coarse fraction by MODIS and MISR could offer some guidance, at least over the ocean.

Your comment about removal uncertainty is also apt. The tuning by Cakmur et al assumes that the biggest source of model uncertainty is the coefficient linking emission to wind speed. Obviously, this has to be tested (laboriously...) by each model. Parenthetically, we did calculate the sensitivity of the error to emission of different size classes, and found that the model error was nearly unchanged by variations in the larger categories. The obs provide the strongest constraint on particles with radii less than about 5 um diameter.

Despite these uncertainties, we feel that the Cakmur et al methodology is useful because modelers often tune emission, even if only qualitatively. We feel it would be useful to do this tuning explicitly (while documenting the criteria). Obviously, we need to note all the caveats, but even without any tuning of emission, the error criterion provides a semi-objective way of characterizing the models. We will solicit advice from the mailing list when constructing our error measure.

Cheers and happy holidays,

-Ron.