RDA Big Data/Analytics

Big Data Definitions

Gartner’s big data definition - “Big data” is high-volume, -velocity and -variety information assets that demand cost-effective, innovative forms of information processing for enhanced insight and decision making. Variety: companies are digging out amazing insights from text, locations or log file ( Multi sensor data for science) Velocity is the most misunderstood data characteristic: it is frequently equated to real-time analytics. Yet, velocity is also about the rate of changes, about linking data sets that are coming with different speeds and about bursts of activity (Data fusion issues - time, space, resolution issues) Volume is about the number of big data mentions in the press and social media.

Jim Frew:

My favorite definition is: You can't move it---if you want to use it, you have to go where it is (kind of like a pipe organ...)

For data generally, that means it has to be housed in a system that can do everything you'd want to do to it.

I.e. you send the problem to the data, not v.v.

For science data specifically, this means the data has to live in a reasonably complex processing environment.

Kuo: In the context of scientific research

- I think we can all agree that “Big Data” has to do with more than just "large data volume," because "large" is vague and relative.

- The existence of “Big-Data problems” implies the existence of “Small Data,” which apparently is not much of a problem.

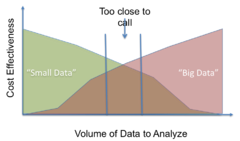

- I believe the definition of "Big Data," or more precisely its distinction from "Small Data", should rely on "cost effectiveness." That is, which kind of techniques, "Big-Data techniques" or "Small-Data techniques", is more cost effective at what level. The reason is that, just because “Small Data” pose not much of a problem, it does not necessary mean the way we are dealing with them is cost effective.

- How do we define “cost effectiveness” in this context? I would like to propose the "cost to effective FLOPS” ratio.

- “Cost” should include all the costs incurred, for example, not only costs of hardware and software but also data management as well as administration and maintenance of the compute facility. Similarly, “Effective FLOPS” should take into account all computation overheads, such as I/O.

- Although the real, total cost is hard to estimate, I suspect we can all agree that when the volume of data to analyze is below a threshold (a range really) it is more cost effective to use “Small Data” approaches. And, when the volume is above the range it is more cost effective to adopt “Big Data” approaches. It is then no obvious “winner,” i.e. neither approach has an edge over the other within this said "range."

- The above point implies the distinguishing characteristics between "Small Data" and "Big Data" techniques. In general, the cost-effectiveness of "Small-Data techniques" decreases as the data volume increase while that of the "Big-Data techniques" increases with the data volume.

- This may be best summarized and illustrated by the graph at right:

- It should be understood that the curves are problem and technology dependent.

Use Case Template

Science Driver = [ Short Description including field(s) of application, user needs ]

Data Characteristics

- Data Set Names

- Volume = [Approximate size Tb/Pb}

- Data Type = [Array, Tabular, Text etc]

- Heterogeneity = [Spatial, Temporal scale mismatch etc]

- Format = [bin, ascii, netCDF etc]

Analysis Needs

Science Use Cases

Use Case 1: Event Analysis ( Dr. Tom Clune, Dr. Kuo GSFC/NASA)

An Earth Science event (ES event) is defined here as an episode of an Earth Science phe- nomenon (ES phenomenon). A cumulus cloud, a thunderstorm shower, a rogue wave, a tornado, an earthquake, a tsunami, a hurricane, or an El Niño, is each an episode of a named ES phe- nomenon, and, from the small and insignificant to the large and potent, all are examples of ES events. An ES event has a finite duration and an associated geo-location as a function of time; it is therefore an entity in four-dimensional (4D) spatiotemporal space. The interests of Earth scientists typically rivet on Earth Science phenomena with potential to cause massive economic disruption or loss of life. But, broader scientific curiosity also drives the study of phenomena that pose no immediate danger, such as land/sea breezes. Due to Earth Sys- tem’s intricate dynamics, we are continuously discovering novel ES phenomena. We generally gain understanding of a given phenomenon by observing and studying individ- ual events. This process usually begins by identifying the occurrences of these events. Once rep- resentative events are identified or found, we must locate associated observed or simulated data prior to commencing analysis and concerted studies of the phenomenon. Knowledge concerning the phenomenon can accumulate only after analysis has started. However, except for a few high- impact phenomena, such as tropical cyclones and tornadoes, finding events and locating associ- ated data currently may take a prohibitive amount of time and effort on the part of an individual investigator. And even for these high-impact phenomena, the availability of comprehensive re- cords is still only a recent development.

The reason for the lack of comprehensive records for most of the ES phenomena is mainly due to the perception that they do not pose immediate and/or severe threat to life and property. Thus they are not consistently tracked, monitored, and catalogued. Many phenomena even lack commonly accepted criteria for definitions. Moreover, various Earth Science observations and data have accumulated to a previously unfathomable volume; NASA Earth Observing System Data Information System (EOSDIS) alone archives several petabytes (PB) of satellite remote sensing data and steadily increases. All of these factors contribute to the difficulty of methodi- cally identifying events corresponding to a given phenomenon and significantly impede system- atic investigations.

In the following we present a couple motivating scenarios, demonstrating the issues faced by Earth scientists studying ES phenomena.

Heat Wave Heat kills by taxing the human body beyond its abilities. In a normal year, about 175 Americans succumb to the demands of summer heat. Among the large continental family of natural hazards, only the cold of winter—not lightning, hurricanes, tornadoes, floods, or earthquakes—takes a greater toll. — National Weather Service web site1 Heat waves pose a serious public health threat, yet a standard definition of “heat wave” does not exist. Many researchers argue for a changing threshold in heat wave definition (Robinson 2001; Abaurrea et al 2005, 2006). In hot and humid regions, physical, social, and cultural adapta- tions will require higher thresholds to ensure that only those events perceived as stressful are identified. A researcher interested in understanding heat waves will have to first devote a great deal of time in research before associated, concurrent observations (ground-based or remote sensing) can be obtained and analyzed. A literature search for heat wave definitions will yield multiple con- flicting definitions. Some decision on what qualifies as a heat wave would need to be made, fol- lowed by a search for the incidences that satisfy the definition. Each investigator, who is not in collaboration or association with other researchers of like in- terest, must repeat a similar process. Intense and prominent episodes of heat waves are easier to find and not likely to raise questions on definition. Thus, a few intense cases are likely studied repeatedly with great scrutiny while the less intense cases are largely ignored or never identified. An intriguing possibility with undesirable consequences is that emphasis on intense cases (or cases that impact populated regions) has been known to induce biases in past investigations of some phenomena. A systematic treatment of events could thus be an important mechanism for eliminating such bias in certain types of investigation. Additionally, the criteria for heat waves perhaps should be defined depending on the purpose of the investigation. If the purpose is about the physiological effect of excessive heat, aspects such as physical, social, and cultural adaptations should be taken into account. In contrast, as a purely physical phenomenon, latitude-dependent absolute thresholds would be more appropriate. Blizzard According to the National Weather Service glossary2: “A blizzard means that the following conditions are expected to prevail for a period of 3 hours or longer: 1) sustained wind or frequent gusts to 35 miles an hour or greater; and 2) considerable falling and/or blowing snow (i.e., reduc- ing visibility frequently to less than 1⁄4 mile).” Consequently, blizzard is also an ES phenomenon that does not have an unambiguous definition, because both considerable and frequently are vague and not quantified. There exists an opportunity to explore for a more definitive specification. The blizzard entry of Wikipedia3 provides a list of well-known historic blizzards in the United States as well as one instance in Iran in 1972, but is obviously far from complete, espe- cially from a global perspective. Although reanalysis data may not contain a visibility field and usually have a temporal resolution coarser than 3 hours, one might still use the wind and falling snow criteria to find a collection of incidences that contains (i.e., is a superset of) the great ma- jority blizzard events. The spatiotemporal coordinates obtained through this mechanism can in turn aid in the discovery of concurrent, associated observations to determine the qualified cases of blizzards.

Use case 2 from the University of Cambridge (just one example, many more available) (Michael Simmons and Charles Boulton, University of Cambridge)

Particle physics and radiotherapy: the Accel-RT project

The High Energy Physics research group in the Cavendish Laboratory is a Tier 2 centre on the CERN Grid. The organisation’s existing European data centres currently manage up to 15 petabytes of data a year over 100,000 CPUs, but that only represents 20% of the total data generated by its Large Hadron Collider (LHC) accelerator. The LHC’s four major experiment generate around a petabyte of raw data per second but only about one percent of that is stored.

The particle physics community has developed tools and systems to store, tag, retrieve and manipulate vast quantities of particle collision data which is produced as images. At Cambridge we are applying computational techniques from particle physics to problems in radiotherapy imaging and treatment.

High precision radiotherapy treatment has major image handling and manipulation issues. Such treatment comes at a cost, in terms of manual, labour-intensive treatment planning. Before a patient can be treated, a radiation oncologist has to analyse the results of imaging with multiple modalities - CT scans, X-rays, MRI scans and PET images - delineate the tumour, and all adjacent anatomical structures before the planning physicist can calculate the optimal selection of beam angles and intensities. They must take account also of the movement of tumours and organs during radiotherapy. Furthermore, as tumour volume and position will change over time, this analysis would ideally be repeated for each radiotherapy session

To translate the research successes in novel radiotherapy into routine, medical practice, we need to provide computing support for the treatment planning process, building upon recent advances in image analysis and the progress made in metadata-driven data management and integration.

In multimodal imaging for radiotherapy, the data throughput for treatment planning objects is modest: a typical image data set for one patient receiving high precision skull base radiotherapy requires 300Mb of storage space; if the patient is undergoing daily imaging to verify the correct position of the tumour target, the verification image data for one patient is 2.3Gb. For a facility treating 600 patients per year with high precision image-guided radiotherapy, this would generate a data set of 1.4Tb per year for each institution. In order to perform a complete multiple timepoint image registration for a dataset of this size in near real time requires 16 Teraflops of processing power (approximately 100 times the power of a standard PC workstation). To undertake this kind of processing in a clinical environment - and the refinement and optimization of the algorithms involved - will require the application of techniques developed for Grid computing. The partnership with the HEP group in the Cavendish will be the key to this. In due course, these algorithms will be implemented on the newer generation of multi-core workstations and GPGPU.

See www.accel-rt.org

Accel-RT is funded by the UK Science and Technology Facilities Council, www.stfc.ac.uk

Michael Simmons, Knowledge Exchange Co-ordinator, Cavendish Laboratory, www.phy.cam.ac.uk/research/KE

- Use Case 3: spatio-temporal data in the Earth sciences (Pebau (talk) 07:56, 10 May 2013 (MDT))

- Note: there will be a tutorial at the ESIP summer meeting: Using OGC Standards for Big Earth Data Analytics: the EarthServer Initiative

Methodology Classification

Databases traditionally serve "Big Data", with a large toolkit for speeding up both performance and convenience: delclarative queries, indexing, replication, parallelization, etc. Still, for many recent applications their table-oriented paradigm is too limited. In terms of data structures, two core contributors to today's Big Data are graph data and multi-dimensional arrays, according to database vendors like Oracle and IBM. "NewSQL" databases may help here (which actually is a buzzword for spicing up databases to match new needs while not forgetting about existing goodies).

Pebau (talk) 07:57, 10 May 2013 (MDT)