Difference between revisions of "Example SQL for Stations"

(Reverted edits by 157.55.35.34 (talk) to last revision by Hoijarvi) |

|||

| (69 intermediate revisions by 3 users not shown) | |||

| Line 7: | Line 7: | ||

The Locations, Times and Parameters constitute the dimensions of the Station-Point data space. | The Locations, Times and Parameters constitute the dimensions of the Station-Point data space. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Airnow data source == | == Airnow data source == | ||

| − | Daily PM 2.5, PM 10 and Ozone data can be downloaded from date encoded folders. The data and location tables are in | + | Daily PM 2.5, PM 10 and Ozone data can be downloaded from date encoded folders on the EPA web server. The data and location tables are in text files, one for each day. |

* Folder for 2011-07-05: http://www.epa.gov/airnow/2011/20110705/ | * Folder for 2011-07-05: http://www.epa.gov/airnow/2011/20110705/ | ||

| Line 74: | Line 19: | ||

* Active monitoring sites: http://www.epa.gov/airnow/2011/20110705/active-sites.dat | * Active monitoring sites: http://www.epa.gov/airnow/2011/20110705/active-sites.dat | ||

| − | These constitute the raw input data in their native formats. These are then transferred to a SQL database. The schema to the database is totally up to the implementers. The WCS server uses specific, well defined | + | These constitute the raw input data in their native formats. These are then transferred to a SQL database. The schema to the database is totally up to the implementers. The WCS server uses specific, well defined SQL views that are created for WCS. See SQL view descriptions below. |

| − | View in [http://webapps.datafed.net/Core.uFIND?dataset=airnow CORE data catalog] and [http://webapps.datafed.net/datafed.aspx?wcs=http://data1.datafed.net:8080/AIRNOW&coverage=AIRNOW&field=pmfine datafed browser] | + | View AIRNOW in the [http://webapps.datafed.net/Core.uFIND?dataset=airnow CORE data catalog] and [http://webapps.datafed.net/datafed.aspx?wcs=http://data1.datafed.net:8080/AIRNOW&coverage=AIRNOW&field=pmfine datafed browser] |

== The Design of the SQL Database == | == The Design of the SQL Database == | ||

| Line 85: | Line 30: | ||

Fortunately, relational databases were invented just to solve this problem: | Fortunately, relational databases were invented just to solve this problem: | ||

| − | === Physical | + | === Physical Tables and Virtual SQL Views === |

The '''physical''' database on the left is the real data storage, which contains multiple tables and typical relationships of a fully normalized schema. | The '''physical''' database on the left is the real data storage, which contains multiple tables and typical relationships of a fully normalized schema. | ||

| − | On the right is the '''virtual''' WCS data access view, a flat, simple data source to select data from. The WCS does not have to understand the physical structure at all. The real configuration of the WCS system is in the making of these SQL views. | + | On the right is the '''virtual''' WCS data access SQL view, a flat, simple data source to select data from. The WCS does not have to understand the physical structure at all. The real configuration of the WCS system is in the making of these SQL views. |

| − | + | [[Image:SQL_views.png|400px|thumb]] | |

| − | |||

| − | [[Image:SQL_views.png]] | ||

* Physical database: | * Physical database: | ||

| Line 102: | Line 45: | ||

*** data columns value and quality flag. | *** data columns value and quality flag. | ||

| − | * Virtual View | + | * Virtual SQL View |

** loc_code, lat and lon is selected from the location table | ** loc_code, lat and lon is selected from the location table | ||

** datetime, pm10 and pm10 quality flag is selected from the pm10 data table | ** datetime, pm10 and pm10 quality flag is selected from the pm10 data table | ||

** loc_code is used to join location to the data | ** loc_code is used to join location to the data | ||

| − | ** the result is a flat view with six columns | + | ** the result is a flat SQL view with six columns |

| − | + | In the particular case of AIRNOW encoding of PM 10 data in the datafed SQL Server, the SQL view definition is below. The actual SQL operation that joins the location and data tables for any other implementation will depend on the local schema of that server. | |

CREATE VIEW pm10_data AS | CREATE VIEW pm10_data AS | ||

| Line 115: | Line 58: | ||

FROM location | FROM location | ||

INNER JOIN pm10 ON location.loc_code = pm10.loc_code | INNER JOIN pm10 ON location.loc_code = pm10.loc_code | ||

| + | |||

| + | The output of the SQL view will need to follow the content and format shown below. | ||

| + | |||

| + | [[Image:Data_view.png|400px|thumb]] | ||

The WCS point data is published using a fairly popular design philosophy called ''convention over configuration''. Rather than allowing any name, | The WCS point data is published using a fairly popular design philosophy called ''convention over configuration''. Rather than allowing any name, | ||

longitude or längengrad, and then mark it to be the longitude column somewhere else, the field name is fixed to '''lon'''. On the other hand the data and metadata column names can be anything. | longitude or längengrad, and then mark it to be the longitude column somewhere else, the field name is fixed to '''lon'''. On the other hand the data and metadata column names can be anything. | ||

| − | === Location View === | + | === Location SQL View === |

| − | The Location view needs at least 3 columns: '''loc_code''', '''lat''' and '''lon''' | + | The Location SQL view needs at least 3 columns: '''loc_code''', '''lat''' and '''lon'''. These columns are joined with the data table in the WCS data queries. |

| − | The standard field names are ''loc_code'', ''loc_name'' (optional), ''lat'', ''lon'', ''elev'' (optional). It is important to use these names, since client software, like datafed browser, expects them. Any other fields can be added. The system will just copy the additional data to output. | + | The standard field names are ''loc_code'', ''loc_name'' (optional), ''lat'', ''lon'', ''elev'' (optional). It is important to use these names, since client software, like datafed browser, expects them. Any other fields can be added. The system will just copy the additional data to output. |

| − | [[Image:Location_table.png]] | + | [[Image:Location_table.png|300px|thumb]] |

There are two reasons to use an SQL view also for the location table. | There are two reasons to use an SQL view also for the location table. | ||

| − | * The location data may be distributed over several tables. In this case, the view can hide the SQL joins. | + | * The location data may be distributed over several tables. In this case, the SQL view can hide the SQL joins. |

| − | * The location table most likely has different names for the columns. In this case, the view may do nothing else but rename ''SiteCode'' to ''loc_code'' and ''latitude'' to ''lat'' | + | * The location table most likely has different names for the columns. In this case, the SQL view may do nothing else but rename ''SiteCode'' to ''loc_code'' and ''latitude'' to ''lat''. |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=== Notes on the Physical Database Schema Possibilities === | === Notes on the Physical Database Schema Possibilities === | ||

| Line 155: | Line 91: | ||

There is no universally best solution, pros and conses must be weighted for your case. | There is no universally best solution, pros and conses must be weighted for your case. | ||

| − | The main point is '''Physical - Logical Separation!''' Whatever is your physical schema, the flat views are used for data access. The view system allows you to change the physical schema completely without changing the WCS configuration. | + | The main point is '''Physical - Logical Separation!''' Whatever is your physical schema, the flat SQL views are used for data access. The SQL view system allows you to change the physical schema completely without changing the WCS configuration. |

| + | |||

| + | == Client-side browser view of AIRNow WCS == | ||

| + | |||

| + | [[image:AirNOw_WCS_Query.png|100px|thumb]] | ||

| + | |||

| + | Map Query for large area and single time instance: Identifier=AIRNOW RangeSubset=pmfine TimeSequence=2011-07-01T18:00:00 BoundingBox=-90,35,-70,45] . The actual WCS getCoverage call is: | ||

| + | |||

| + | http://data1.datafed.net:8080/AIRNOW?Service=WCS&Version=1.1.2&Request=GetCoverage&Identifier=AIRNOW&Format=text/csv&Store=true&TimeSequence=2011-07-01T18:00:00&RangeSubset=pmfine&BoundingBox=-90,35,-70,45,urn:ogc:def:crs:OGC:2:84 | ||

| + | |||

| + | Time Series Query for a time range and a single location: Identifier=AIRNOW RangeSubset=pmfine[location[420010001]] TimeSequence=2005-06-01/2011-09-01/PT1H . The actual WCS getCoverage call is: | ||

| + | |||

| + | <html><a href="http://data1.datafed.net:8080/AIRNOW?Service=WCS&Version=1.1.2&Request=GetCoverage&Identifier=AIRNOW&Format=text/csv&Store=true&TimeSequence=2005-06-01/2011-09-01/PT1H&RangeSubset=pmfine[location[420010001]]">http://data1.datafed.net:8080/AIRNOW?Service=WCS&Version=1.1.2&Request=GetCoverage&Identifier=AIRNOW&Format=text/csv&Store=true&TimeSequence=2005-06-01/2011-09-01/PT1H&RangeSubset=pmfine[location[420010001]]</a></html> | ||

| + | |||

| + | The syntax ''RangeSubset=pmfine[location[420010001]]'' is standard as documented in [http://www.opengeospatial.org/standards/wcs WCS 1.1.2 Standards]. It specifies the ''pmfine'' parameter, filters by dimension ''location'' selecting loc_code = ''420010001''. | ||

| − | = | + | TimeSequence=2005-06-01/2011-09-01/PT1H has ''time_min/time_max/periodicity''. [http://en.wikipedia.org/wiki/ISO_8601#Durations ISO 8601 definition], PT1H is hourly, P1D is daily. |

| − | + | Using the location table. | |

| + | Web Coverage Service, WCS, was originally designed to serve gridded data, and in the WCS DescribeCoverage response there is no convenient way to encode the location dimension. Web Feature Service, WFS, on the other hand, was designed to serve static geographical features, and matches well for the job. | ||

| + | Location table query for AIRNOW | ||

| − | http://data1.datafed.net:8080/AIRNOW? | + | http://data1.datafed.net:8080/AIRNOW?service=WFS&Version=1.0.0&Request=GetFeature&typename=AIRNOW&outputFormat=text/csv |

| + | The WFS standard is defined in [http://www.opengeospatial.org/standards/wfs WFS 1.0.0]. | ||

| − | + | == AIRNow registered in GEO Air Quality Community Catalog == | |

| + | [[Image:AQ_ComCat.png|300px|thumb]] | ||

| + | Once the AIRNow WCS service is available as a tested and functioning web service, it is ready to be '''publish'''ed in a service catalog(s) where the potential clients can '''find''' it and access ('''bind''' to) it. In other words, it is ready to be included in a network following the publish-find-bind triad of Service Oriented Architecture. | ||

| − | + | Unfortunately, a general registry and catalog of OGC W*S services does not exist. The task of identifying and organizing the available WCS data access services then falls on the communities within specific domains. For the Air Quality domain, a subset of the available interoperable services are gathered in the [[GEO Air Quality Community Catalog]], which is harvested by the GEO Clearinghouse. The service can be registered in multiple catalogs. | |

| − | + | View AIRNow in [http://webapps.datafed.net/Core.uFIND?dataset=airnow&preview=Originators in the AQ Community Catalog]. Fore the selected Dataset=AIRNow , it shows three observation parameters: PM10, pmfine and super (ozone). For each parameter, the remaining discovery metadata are selected from a specific vocabulary, and encoded in ISO 19115 spatial metadata records. | |

| − | + | Also note that for each observation parameter, there is a Browse Data button. This leads to a general purpose [[http://webapps.datafed.net/datafed.aspx?wcs=http://data1.datafed.net:8080/AIRNOW&coverage=AIRNOW&field=pmfine data browser] (the DataFed Browser) which can access and browse any of the registered WCS data parameters, regardless of the server location or type. | |

| − | + | == Configuring the WFS Service and WCS Service for point data == | |

| − | |||

| − | |||

| − | + | In SQL there is not enough metadata to automatically configure the WCS/WFS pair. But with SQL views in place, you only need to tell the servers the SQL view names and column names. In the AIRNOW case, WFS configuration for locations: | |

| − | + | view_alias : location | |

| + | columns : loc_code, loc_name, lat, lon | ||

| − | The data configuration needs the view and some basic metadata. Configuring the PM 10: | + | The data configuration needs the SQL view and some basic metadata. Configuring the PM 10: |

pm10: | pm10: | ||

| Line 192: | Line 146: | ||

columns : loc_code, lat, lon, datetime, pm10, pm10_qf | columns : loc_code, lat, lon, datetime, pm10, pm10_qf | ||

| − | + | The WCS/WFS server can now compile SQL queries against the views and execute them. | |

| + | |||

| + | The location SQL view is easy to query. The WFS server takes the location SQL view name and the configured columns. For extra security, the ''distinct'' keyword removes duplicate rows. | ||

| + | |||

| + | select distinct loc_code, loc_name, lat, lon | ||

| + | from location | ||

| + | |||

| + | The WCS service has more possibilities and therefore the query generation is more complicated. First, if the queried parameter is pm10, | ||

| + | |||

| + | RangeSubset=pm10 | ||

| + | |||

| + | then the WCS processor gets the SQL view and the fields from the configuration | ||

| + | |||

| + | select loc_code, lat, lon, datetime, pm10, pm10_qf | ||

| + | from pm10_data | ||

| + | |||

| + | Then the WCS processor translates the WCS filter parameters to SQL | ||

| + | |||

| + | <html> | ||

| + | <table border="1" cellpadding="2" cellspacing="1"> | ||

| + | <tr> <td><h5>WCS Filter</h5></td> <td><h5>SQL Translation</h5></td> </tr> | ||

| + | <tr> <td>BoundingBox=-90,35,-70,45</td> <td>(lat between 35 and 45) and (lon between -90 and -70)</td> </tr> | ||

| + | <tr> <td>TimeSequence=2011-07-01T18:00:00</td> <td>[datetime] = '2011-07-01T18:00:00'</td> </tr> | ||

| + | <tr> <td>RangeSubset=pm10[location[421330008]]</td> <td>loc_code = '421330008' </td> <td>RangeSubset=pm10 is handled before</td> </tr> | ||

| + | <tr> <td>TimeSequence=2005-06-01/2011-09-01</td> <td>[datetime] between '2005-06-01' and '2011-09-01'</td> </tr> | ||

| + | <tr> <td>TimeSequence=2005-06-01/2011-09-01/PT1H</td> <td>[datetime] between '2005-06-01' and '2011-09-01'</td> <td>periodicity is ignored.</td> </tr> | ||

| + | <tr> <td><h5>datafed extensions</h5></td> </tr> | ||

| + | <tr> <td>months=Dec,Jan,Feb</td> <td>(datepart(month, [datetime]) in (12, 1, 2))</td></tr> | ||

| + | <tr> <td>days_of_week=Sat,Sun,Mon</td> <td>(datepart(weekday, [datetime]) in (5, 6, 0))</td> </tr> | ||

| + | <tr> <td>hours_of_day=11,12,13</td> <td>(datepart(hour, [datetime]) in (11,12,13))</td> </tr> | ||

| + | </table> | ||

| + | </html> | ||

| − | + | Final query for single location timeseries: | |

| − | [ | + | select loc_code, lat, lon, datetime, pm10, pm10_qf |

| + | from pm10_data | ||

| + | where | ||

| + | ([datetime] between '2005-06-01' and '2011-09-01') | ||

| + | and (loc_code = '421330008') | ||

== External Examples == | == External Examples == | ||

Latest revision as of 10:05, September 19, 2012

Back to WCS Access to netCDF Files

This is an example on how to serve Station-Point data through WCS data access protocol. The basis of the use case is the [http:AIRNow.gov AIRNow] dataset that represents hourly near-real-time surface observations of PM10, PM2.5 and ozone over the US. This example use may be applicable to all datasets where:

- Locations: the monitoring is performed a fixed geographic points, i.e. monitoring stations

- Times: the sampling is over a fixed time range (e.g. hour or day) and fixed periodicity (every six hour or every third day)

- Parameters: the number of observed Earth Observation parameters is finite and known

The Locations, Times and Parameters constitute the dimensions of the Station-Point data space.

Airnow data source

Daily PM 2.5, PM 10 and Ozone data can be downloaded from date encoded folders on the EPA web server. The data and location tables are in text files, one for each day.

- Folder for 2011-07-05: http://www.epa.gov/airnow/2011/20110705/

- PM 10 observations: http://www.epa.gov/airnow/2011/20110705/pm10_070511.obs

- PM 2.5 observations: http://www.epa.gov/airnow/2011/20110705/pmfine_070511.obs

- Ozone observations: http://www.epa.gov/airnow/2011/20110705/super_070511.obs

- Active monitoring sites: http://www.epa.gov/airnow/2011/20110705/active-sites.dat

These constitute the raw input data in their native formats. These are then transferred to a SQL database. The schema to the database is totally up to the implementers. The WCS server uses specific, well defined SQL views that are created for WCS. See SQL view descriptions below.

View AIRNOW in the CORE data catalog and datafed browser

The Design of the SQL Database

The most common and flexible method of storing station time series point data is an SQL database. The data collector designs the schema, which captures all the measured data and metadata. This very often requires a database with lots of tables, there is no one-size-fits-all design around because of differing requirements.

Fortunately, relational databases were invented just to solve this problem:

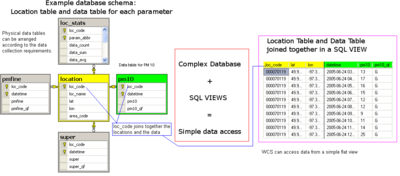

Physical Tables and Virtual SQL Views

The physical database on the left is the real data storage, which contains multiple tables and typical relationships of a fully normalized schema.

On the right is the virtual WCS data access SQL view, a flat, simple data source to select data from. The WCS does not have to understand the physical structure at all. The real configuration of the WCS system is in the making of these SQL views.

- Physical database:

- The center of this database is the location table.

- Each location has a unique loc_code as the key column.

- Each measure parameter has its own data table

- loc_code for the location of the station

- datetime for the observation time

- data columns value and quality flag.

- Virtual SQL View

- loc_code, lat and lon is selected from the location table

- datetime, pm10 and pm10 quality flag is selected from the pm10 data table

- loc_code is used to join location to the data

- the result is a flat SQL view with six columns

In the particular case of AIRNOW encoding of PM 10 data in the datafed SQL Server, the SQL view definition is below. The actual SQL operation that joins the location and data tables for any other implementation will depend on the local schema of that server.

CREATE VIEW pm10_data AS SELECT location.loc_code, lat, lon, datetime, pm10, pm10_qf FROM location INNER JOIN pm10 ON location.loc_code = pm10.loc_code

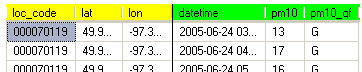

The output of the SQL view will need to follow the content and format shown below.

The WCS point data is published using a fairly popular design philosophy called convention over configuration. Rather than allowing any name, longitude or längengrad, and then mark it to be the longitude column somewhere else, the field name is fixed to lon. On the other hand the data and metadata column names can be anything.

Location SQL View

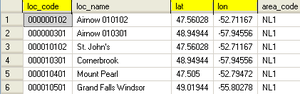

The Location SQL view needs at least 3 columns: loc_code, lat and lon. These columns are joined with the data table in the WCS data queries.

The standard field names are loc_code, loc_name (optional), lat, lon, elev (optional). It is important to use these names, since client software, like datafed browser, expects them. Any other fields can be added. The system will just copy the additional data to output.

There are two reasons to use an SQL view also for the location table.

- The location data may be distributed over several tables. In this case, the SQL view can hide the SQL joins.

- The location table most likely has different names for the columns. In this case, the SQL view may do nothing else but rename SiteCode to loc_code and latitude to lat.

Notes on the Physical Database Schema Possibilities

There are numerous ways to organize the physical data tables, and all of these ways have pros and cons. The AIRNOW database is organized by creating a physical data table for each parameter separately. This arrangement has a some advantages: fastest to query single parameters and no need for NULLs

Other possibilies are:

Big Wide table: One big table with columns loc_code datetime pmfine pm10 super. In this case, querying multiple parameters at the same time requires no joins. Unfortunately, missing data must be expressed with NULLs.

Long and skinny table: loc_code datetime param_code param_value. In this case each row contains two data columns: the parameter code, telling what the measurement actually is, and the parameter value. In this case new parameter codes can be added any time without changing the database schema.

There is no universally best solution, pros and conses must be weighted for your case.

The main point is Physical - Logical Separation! Whatever is your physical schema, the flat SQL views are used for data access. The SQL view system allows you to change the physical schema completely without changing the WCS configuration.

Client-side browser view of AIRNow WCS

Map Query for large area and single time instance: Identifier=AIRNOW RangeSubset=pmfine TimeSequence=2011-07-01T18:00:00 BoundingBox=-90,35,-70,45] . The actual WCS getCoverage call is:

Time Series Query for a time range and a single location: Identifier=AIRNOW RangeSubset=pmfine[location[420010001]] TimeSequence=2005-06-01/2011-09-01/PT1H . The actual WCS getCoverage call is:

The syntax RangeSubset=pmfine[location[420010001]] is standard as documented in WCS 1.1.2 Standards. It specifies the pmfine parameter, filters by dimension location selecting loc_code = 420010001.

TimeSequence=2005-06-01/2011-09-01/PT1H has time_min/time_max/periodicity. ISO 8601 definition, PT1H is hourly, P1D is daily.

Using the location table.

Web Coverage Service, WCS, was originally designed to serve gridded data, and in the WCS DescribeCoverage response there is no convenient way to encode the location dimension. Web Feature Service, WFS, on the other hand, was designed to serve static geographical features, and matches well for the job.

Location table query for AIRNOW

The WFS standard is defined in WFS 1.0.0.

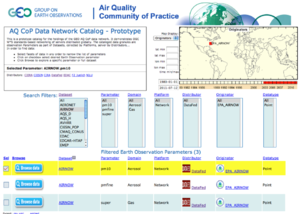

AIRNow registered in GEO Air Quality Community Catalog

Once the AIRNow WCS service is available as a tested and functioning web service, it is ready to be published in a service catalog(s) where the potential clients can find it and access (bind to) it. In other words, it is ready to be included in a network following the publish-find-bind triad of Service Oriented Architecture.

Unfortunately, a general registry and catalog of OGC W*S services does not exist. The task of identifying and organizing the available WCS data access services then falls on the communities within specific domains. For the Air Quality domain, a subset of the available interoperable services are gathered in the GEO Air Quality Community Catalog, which is harvested by the GEO Clearinghouse. The service can be registered in multiple catalogs.

View AIRNow in in the AQ Community Catalog. Fore the selected Dataset=AIRNow , it shows three observation parameters: PM10, pmfine and super (ozone). For each parameter, the remaining discovery metadata are selected from a specific vocabulary, and encoded in ISO 19115 spatial metadata records.

Also note that for each observation parameter, there is a Browse Data button. This leads to a general purpose [data browser (the DataFed Browser) which can access and browse any of the registered WCS data parameters, regardless of the server location or type.

Configuring the WFS Service and WCS Service for point data

In SQL there is not enough metadata to automatically configure the WCS/WFS pair. But with SQL views in place, you only need to tell the servers the SQL view names and column names. In the AIRNOW case, WFS configuration for locations:

view_alias : location columns : loc_code, loc_name, lat, lon

The data configuration needs the SQL view and some basic metadata. Configuring the PM 10:

pm10:

title : Particulate Matter 10

datatype : float

units : ug/cm^3

data_view :

view_alias : pm10_data,

columns : loc_code, lat, lon, datetime, pm10, pm10_qf

The WCS/WFS server can now compile SQL queries against the views and execute them.

The location SQL view is easy to query. The WFS server takes the location SQL view name and the configured columns. For extra security, the distinct keyword removes duplicate rows.

select distinct loc_code, loc_name, lat, lon from location

The WCS service has more possibilities and therefore the query generation is more complicated. First, if the queried parameter is pm10,

RangeSubset=pm10

then the WCS processor gets the SQL view and the fields from the configuration

select loc_code, lat, lon, datetime, pm10, pm10_qf from pm10_data

Then the WCS processor translates the WCS filter parameters to SQL

WCS Filter | SQL Translation | |

| BoundingBox=-90,35,-70,45 | (lat between 35 and 45) and (lon between -90 and -70) | |

| TimeSequence=2011-07-01T18:00:00 | [datetime] = '2011-07-01T18:00:00' | |

| RangeSubset=pm10[location[421330008]] | loc_code = '421330008' | RangeSubset=pm10 is handled before |

| TimeSequence=2005-06-01/2011-09-01 | [datetime] between '2005-06-01' and '2011-09-01' | |

| TimeSequence=2005-06-01/2011-09-01/PT1H | [datetime] between '2005-06-01' and '2011-09-01' | periodicity is ignored. |

datafed extensions | ||

| months=Dec,Jan,Feb | (datepart(month, [datetime]) in (12, 1, 2)) | |

| days_of_week=Sat,Sun,Mon | (datepart(weekday, [datetime]) in (5, 6, 0)) | |

| hours_of_day=11,12,13 | (datepart(hour, [datetime]) in (11,12,13)) |

Final query for single location timeseries:

select loc_code, lat, lon, datetime, pm10, pm10_qf

from pm10_data

where

([datetime] between '2005-06-01' and '2011-09-01')

and (loc_code = '421330008')

External Examples

TODO: separate page for CIRA, more infor about EBAS