Difference between revisions of "Standards and Conventions for WCS Server"

| (5 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| + | {{HTAP Report Backlinks}} | ||

''"What few things must be the same so that everything else can be different?"'' | ''"What few things must be the same so that everything else can be different?"'' | ||

--Elliot Christian | --Elliot Christian | ||

| + | ==Interoperability== | ||

This section reviews the relevant standards and conventions used in the HTAP Data Network Pilot, the combination of the standards and conventions to enable interoperability between the data systems and the networking of resulting . | This section reviews the relevant standards and conventions used in the HTAP Data Network Pilot, the combination of the standards and conventions to enable interoperability between the data systems and the networking of resulting . | ||

| Line 16: | Line 18: | ||

Interoperability of scientific data systems is difficult. The AQ field, in particular, requires many data types generated by surface-based insitu sensors, emission monitors, remote sensors and air quality model outputs. There is a tremendous variability of servers, clients, applications, and languages that needs to be interoperable. | Interoperability of scientific data systems is difficult. The AQ field, in particular, requires many data types generated by surface-based insitu sensors, emission monitors, remote sensors and air quality model outputs. There is a tremendous variability of servers, clients, applications, and languages that needs to be interoperable. | ||

| − | Long journey. DataFed since 2000 within HTAP since 2005. First experience of interoperability.. Required interoperability of people WCS*S: OGC at protocol level; GALEON (Ben Domenico) at netCDF level, AQ_CoP (Kari & Michael) at the server level Data Structure: Unidata at data model; CF naming convention Cristiane & Martin on CF convention; AQ_CoP (Kari & Michael) at the server Metadata: Ted Haberman, Erin at ISO; CF naming convention Cristiane & Martin & Aasmund..; AQ_CoP (Kari & Michael) at the server. Mediator | + | Long journey. DataFed since 2000 within HTAP since 2005. First experience of interoperability.. Required interoperability of people WCS*S: OGC at protocol level; [http://www.delicious.com/rhusar/interoperability+GALEO GALEON] (Ben Domenico) at netCDF level, AQ_CoP (Kari & Michael) at the server level Data Structure: Unidata at data model; CF naming convention Cristiane & Martin on CF convention; AQ_CoP (Kari & Michael) at the server Metadata: Ted Haberman, Erin at ISO; CF naming convention Cristiane & Martin & Aasmund..; AQ_CoP (Kari & Michael) at the server. [http://http//www.delicious.com/rhusar/interoperability+mediator Mediator] |

Each standard developed by a different group netCDF, CF, WCS These have to fit together semantically and syntax in the interoperability stack, which is not necessarily a fit. | Each standard developed by a different group netCDF, CF, WCS These have to fit together semantically and syntax in the interoperability stack, which is not necessarily a fit. | ||

| Line 22: | Line 24: | ||

==Interoperability Stack== | ==Interoperability Stack== | ||

| − | Synopsis: Interoperability in SOA requires multiple layer interoperability | + | ''Synopsis: Interoperability in SOA requires multiple layer interoperability'' |

Effective data 'sharing' hinges on the smooth interoperability between the servers and the clients for the offered services. Interoperability between the client and the server has many requirements: that the clients request is received and understood, that the server properly executes the request and that the returned response is also received and understood by the client. Thus, interoperability in a network requires satisfying a 'stack' of conditions depicted in the interoperability stack, Figure 3.1. | Effective data 'sharing' hinges on the smooth interoperability between the servers and the clients for the offered services. Interoperability between the client and the server has many requirements: that the clients request is received and understood, that the server properly executes the request and that the returned response is also received and understood by the client. Thus, interoperability in a network requires satisfying a 'stack' of conditions depicted in the interoperability stack, Figure 3.1. | ||

| Line 36: | Line 38: | ||

==netCDF Data Model== | ==netCDF Data Model== | ||

| − | The lowest layer in the interoperability stack is the physical layer where the data are stored. NetCDF is a data model for scientific data consisting of: Variables: name, shape (list of Dimensions), type, attributes, values; Dimensions: name, length; Attributes: name, type, value(s); The netCDF data files are manipulated via a set of libraries for data access (C, Fortran, C++, Java, Perl, Python, Ruby, Matlab, IDL, ...). The data format is portable binary data that supports direct access, metadata, appending new data, shared access (users need not know anything about format). Applications for netCDF include gridded output from models (forecast, climate, ocean, atmospheric chemistry); observational data (surface, soundings, satellite), trajectory data etc. | + | The lowest layer in the interoperability stack is the physical layer where the data are stored. [http://www.unidata.ucar.edu/presentations/Rew/esri-netcdf.pdf NetCDF] is a data model for scientific data consisting of: Variables: name, shape (list of Dimensions), type, attributes, values; Dimensions: name, length; Attributes: name, type, value(s); The netCDF data files are manipulated via a set of libraries for data access (C, Fortran, C++, Java, Perl, Python, Ruby, Matlab, IDL, ...). The data format is portable binary data that supports direct access, metadata, appending new data, shared access (users need not know anything about format). Applications for netCDF include gridded output from models (forecast, climate, ocean, atmospheric chemistry); observational data (surface, soundings, satellite), trajectory data etc. |

The NetCDF (network Common Data Form) is used to store and communicate multidimensional data, such as arising from Earth Observations and models. The NetCDF data model is particularly well suited for storing related arrays containing atmospheric and oceanic data and models. Climate and Forecast Metadata Conventions (CF) are used in conjunction with NetCDF as a means of specifying the semantic information. The semantic metadata is conveyed internally within the NetCDF datasets which makes NetCDF is self documenting. This means that it can associate various physical quantities (such as location, pressure and temperature) with spatio-temporal locations (such as points at specific latitudes, longitudes, vertical levels, and times). | The NetCDF (network Common Data Form) is used to store and communicate multidimensional data, such as arising from Earth Observations and models. The NetCDF data model is particularly well suited for storing related arrays containing atmospheric and oceanic data and models. Climate and Forecast Metadata Conventions (CF) are used in conjunction with NetCDF as a means of specifying the semantic information. The semantic metadata is conveyed internally within the NetCDF datasets which makes NetCDF is self documenting. This means that it can associate various physical quantities (such as location, pressure and temperature) with spatio-temporal locations (such as points at specific latitudes, longitudes, vertical levels, and times). | ||

| − | For the past two decades, with the help of the U.S. National Science Foundation, netCDF was maintained and actively supported by the University Corporation for Atmospheric Research (UCAR) . NetCDF is a set of software libraries and machine-independent data formats that support the creation, access, and sharing of array-oriented scientific data. NetCDF has a strong and user community Unidata is supported by the U.S. National Science Foundation for the past 25 years. | + | For the past two decades, with the help of the U.S. National Science Foundation, netCDF was [http://www.unidata.ucar.edu/software/netcdf/ maintained and actively supported by the University Corporation for Atmospheric Research (UCAR)] . NetCDF is a set of software libraries and machine-independent data formats that support the creation, access, and sharing of array-oriented scientific data. NetCDF has a strong and [http://www.unidata.ucar.edu/software/netcdf/docs/faq.html#maillist user community]. Unidata is supported by the U.S. National Science Foundation for the past 25 years. |

==CF Conventions== | ==CF Conventions== | ||

| − | + | (see also: '''[http://wiki.esipfed.org/index.php/CF_Naming_Conventions AQ standard names] workspace''') | |

| − | + | CF metadata conventions support interoperability for earth science data from different sources containing model output or observational datasets. It was developed for encoding Climate and weather Forecast metadata in netCDF files but now [http://cf-pcmdi.llnl.gov/projects-and-groups-adopting-the-cf-conventions-as-their-standard applied to many domains] of Earth Science. Besides setting standards for [http://wiki.esipfed.org/index.php/CF_Coordinate_Conventions defining the dimensions and coordinates] of data sets as well as various other data set properties ([http://cf-pcmdi.llnl.gov/documents/cf-conventions/latest-cf-conventions-document-1 see latest CF Conventions document]), arguably the most important contribution of CF to the field of air quality is the definition of [http://cf-pcmdi.llnl.gov/documents/cf-standard-names/ standard names], which provide unique names for chemical constituents and their physical properties. CF standard_names therefore represent an important step towards the definition of a universal air quality vocabulary or name space. | |

| − | + | The CF conventions are still evolving, they require community participation (see the [http://cf-pcmdi.llnl.gov/discussion/index_html CF discussion page]. Further contributions are sought on: Mashing of the CF convention with the WCS protocol and with the data models (e.g. netCDF or relational SQL Tables); Extending the CF naming to point monitoring data; Refined definition of aerosol components (including size classes) and emission fluxes (in particular with respect to emission sector definitions). The [http://wiki.esipfed.org/index.php/CF_Naming_Conventions AQ standard names] wiki pages are used as a work space to prepare and discuss such additions to the CF standard prior to bringing it into the official [http://cf-pcmdi.llnl.gov/discussion/index_html CF discussion page]. | |

| − | + | Data in [http://wiki.esipfed.org/index.php/CF-netCDF CF-netCDF] format in conjunction with the OGC WCS data access protocol provides complete specification for loosely coupled networking of distributed air quality data, whereas the netCDF data model, by itself is inadequate to unambiguously define the data structure and its meaning. The CF-netCDF has been formally recognized by US Government NASA and NOAA standards bodies. The CF naming conventions are also being adopted by the Global Observing System of Systems (GEOSS), [http://www.earthobservations.org/documents/tasksheets/latest/DA-09-02d.pdf GEO Task DA-09-02-d]: Atmospheric Model Evaluation Network for the application of Earth observations from distributed archives using standardized approaches to evaluate and improve model performance. See also [http://wiki.esipfed.org/index.php/GEO_AQ_CoP GEO Air Quality Community of Practice AQ CoP] for further details. | |

| + | Recently, UCAR has introduced NetCDF as a candidate OGC standard to encourage broader international use and greater interoperability among clients and servers interchanging data in binary form. This will enable standard delivery of data in binary form via several OGC service interface standards, including the OGC Web Coverage Service (WCS), Web Feature Service (WFS), and Sensor Observation Service (SOS) Interface Standards. | ||

| − | + | Through the [http://cf-pcmdi.llnl.gov/ CF Conventions], netCDF data files become self-describing which enables software tools to display data and perform operations on specified subsets with no user intervention. It is equally important that the coordinate data are easy for human users to write and to understand. These conventions enable programs like [http://wiki.esipfed.org/index.php/Datafed_Browser Datafed Browser] or the [http://ogc-interface.icg.kfa-juelich.de:50080 Juelich WCS web interface] to work on CF-netCDF data without configuration. | |

| − | The | + | Each variable in the file has an associated description of what it represents. Each value can be located in space and time. The convention does not standardize any variable or dimension names. Within the netCDF files, all the CF metadata are written in attributes. Important attributes include: [http://cf-pcmdi.llnl.gov/documents/cf-standard-names/ '''standard_name'''] which provides a unique semantic description of the variable, '''long_name''' is a human readable, non-standardized variable description which can be used for labelling plots for example; '''units''' is the human readable physical dimension of the variable. For variables with a '''standard_name''' the '''units''' attribute is also standardized. Other variables should use the same units where applicable or at least try to have units that are compatible with the [http://www.unidata.ucar.edu/software/udunits/ udunits] software package. |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

===CF Naming Conventions for Atmospheric Chemistry=== | ===CF Naming Conventions for Atmospheric Chemistry=== | ||

| − | A central element of the CF Conventions is the Standard Name Table, which uniquely associates each standard name with a geophysical parameter, named according to the CF Naming Guidelines. These can be used to find or compare datasets in catalog browsers such as AQ_uFIND. As of | + | A central element of the CF Conventions is the [http://cf-pcmdi.llnl.gov/documents/cf-standard-names Standard Name Table], which uniquely associates each standard name with a geophysical parameter, named according to the [http://cf-pcmdi.llnl.gov/documents/cf-standard-names CF Naming Guidelines]. These can be used to find or compare datasets in catalog browsers such as [http://wiki.esipfed.org/index.php/AQ_uFIND AQ_uFIND]. As of 22 July 2011, there are [http://cf-pcmdi.llnl.gov/documents/cf-standard-names 693 CF-registered Atmospheric Chemistry names] contributed by the community, covering mostly model-generated parameters. The standard naming of atmospheric chemistry observation variables is in need of community contributions. |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | '''History.''' The AQ naming activity was initiated in 2006 and coordinated by Christiane Textor. An ad hoc virtual workgroup constructed and discussed a first set of CF standard_names for air chemistry and aerosols using a [http://wiki.esipfed.org/index.php/CFpeople Wiki workspace]. The names were submitted to the CF Community for approval and added the CF Names table in 2008. Later additions concerned specifically standard names for stratospheric chemical constituents and other individual additions and refined definitions. | |

| − | + | A recent development within the CF group discussions is the general acceptance of [http://www.met.reading.ac.uk/~jonathan/CF_metadata/14.1/ grammar rules] which greatly facilitate the process of adding new entries to the standard_name table. New standard_name proposals must be submitted to the CF mailing list for approval. As long as these new names follow the accepted grammar and consist of previously defined terms or obvious modifications to these terms, acceptance of new names is generally easy. When new concepts are introduced, the CF mailing list discussions can at times become quite extensive and will require some involvement over an extended time period. | |

| − | + | '''CF Naming Examples''': Typical AQ standard names describe volume mixing ratios ("mole_fraction"), concentrations, mass mixing ratios, column densities, or tendencies of atmospheric constituents. A few examples copied from the [http://cf-pcmdi.llnl.gov/documents/cf-standard-names/standard-name-table/18/cf-standard-name-table.html standard name table] are "mass_concentration_of_ammonium_dry_aerosol_in_air", "mole_concentration_of_alpha_pinene_in_air", "mole_fraction_of_carbon_monoxide_in_air" or "tendency_of_atmosphere_mass_content_of_carbon_monoxide_due_to_emission", "tendency_of_atmosphere_mass_content_of_formic_acid_due_to_wet_deposition". Most standard names are associated with a detailed definition that can be accessed by clicking on the standard_name entry. There are also standard names for a few species groups ("lumped" compounds) that are often used in models (for example "..._of_nmvoc_..."). Here, the CF group advises to provide a full list of compounds or a specific description of the lumping in a comment attribute. | |

| − | + | For ongoing discussions concerning AQ standard name additions, consult the [http://wiki.esipfed.org/index.php/CF_Naming_Conventions AQ standard names] wiki pages. | |

| + | |||

| + | ===Projects/Programs Using CF Chemistry Names=== | ||

| − | + | The CF convention for naming atmospheric chemicals and aerosols has been adopted by the following model intercomparisons (state of July 2008): | |

| − | + | * [http://www.htap.org/ Task Force on Hemispheric Transport of Air Pollution (TF HTAP)] | |

| + | * [http://www.pa.op.dlr.de/CCMVal/ SPARC Chemistry-Climate Model Validation Activity (CCMVal)] | ||

| + | * [http://www.igac.noaa.gov/ACandC.php IGAC-SPARC Atmospheric Chemistry & Climate Initiative (AC&C)] | ||

| + | * [http://nansen.ipsl.jussieu.fr/AEROCOM/aerocomhome.html Aerosol Comparisons between Observations and Models (AeroCOM] | ||

===CF Coordinate Conventions=== | ===CF Coordinate Conventions=== | ||

| Line 100: | Line 85: | ||

The CF Conventions offer strong support for the definition of dimensions and coordinates of Earth observations. Through dimensions and coordinates the extent and structure of observation elements can be defined unambiguously. Four coordinates get special treatment in CF conventions, the three physical directions and time. | The CF Conventions offer strong support for the definition of dimensions and coordinates of Earth observations. Through dimensions and coordinates the extent and structure of observation elements can be defined unambiguously. Four coordinates get special treatment in CF conventions, the three physical directions and time. | ||

| − | Spatial Coordinates: standard_name=latitude and optionally axis=Y marks the latitude variable. This can be used in projections that have an orthogonal latitude axis. Similarly, the longitude coordinate variable must have the same name as the longitude dimension and may have axis=X. Coordinates of longitude axis typically are from -180...180 or 0..360, but that is not part of the convention. If the latitude-longitude coordinates are not Cartesian, two dimensional coordinate variables can be used. | + | Spatial Coordinates: standard_name=latitude and optionally axis=Y marks the latitude variable. This can be used in projections that have an orthogonal latitude axis. Similarly, the longitude coordinate variable must have the same name as the longitude dimension and may have axis=X. Coordinates of longitude axis typically are from -180...180 or 0..360, but that is not part of the convention. If the latitude-longitude coordinates are not Cartesian, two dimensional coordinate variables can be used. |

| − | WCS Standard | + | ==WCS Standard== |

For atmospheric air quality data and models the most relevant international standard are the suite of OGC web services, WMS, WCS and WFS. The WCS may be compared to the OGC Web Map Service (WMS) and the Web Feature Service (WFS); like them it allows clients to choose portions of a server's information holdings based on spatial constraints and other criteria. Unlike the WMS [OGC 06-042], which portrays spatial data to return static maps (rendered as pictures by the server), the Web Coverage Service provides available data together with their detailed descriptions; defines a rich syntax for requests against these data; and returns data with its original semantics (instead of pictures) which may be interpreted, extrapolated, etc. – and not just portrayed. Unlike WFS [OGC 04-094], which returns discrete geospatial features, the Web Coverage Service returns coverages representing space-varying phenomena that relate a spatio-temporal domain to a (possibly multidimensional) range of properties. The Web Map Service (WMS), portrays spatial data end returns static, rendered maps. The Web Feature Service (WFS), returns discrete geospatial features, e.g. roads over a specified region. The OGC Web Coverage Service (WCS) designed for the delivery of 'coverages', i.e. numerical data that cover certain spatial and temporal domain. WCS provides data together with their detailed descriptions and returns data (instead of pictures) with additional semantics (e.g. dimensions, coordinates etc) which may be interpreted, extrapolated, etc.. WCS returns coverages represent space-time-varying phenomena that relate a spatio-temporal domain to a possibly multidimensional range of properties. | For atmospheric air quality data and models the most relevant international standard are the suite of OGC web services, WMS, WCS and WFS. The WCS may be compared to the OGC Web Map Service (WMS) and the Web Feature Service (WFS); like them it allows clients to choose portions of a server's information holdings based on spatial constraints and other criteria. Unlike the WMS [OGC 06-042], which portrays spatial data to return static maps (rendered as pictures by the server), the Web Coverage Service provides available data together with their detailed descriptions; defines a rich syntax for requests against these data; and returns data with its original semantics (instead of pictures) which may be interpreted, extrapolated, etc. – and not just portrayed. Unlike WFS [OGC 04-094], which returns discrete geospatial features, the Web Coverage Service returns coverages representing space-varying phenomena that relate a spatio-temporal domain to a (possibly multidimensional) range of properties. The Web Map Service (WMS), portrays spatial data end returns static, rendered maps. The Web Feature Service (WFS), returns discrete geospatial features, e.g. roads over a specified region. The OGC Web Coverage Service (WCS) designed for the delivery of 'coverages', i.e. numerical data that cover certain spatial and temporal domain. WCS provides data together with their detailed descriptions and returns data (instead of pictures) with additional semantics (e.g. dimensions, coordinates etc) which may be interpreted, extrapolated, etc.. WCS returns coverages represent space-time-varying phenomena that relate a spatio-temporal domain to a possibly multidimensional range of properties. | ||

| Line 116: | Line 101: | ||

Figure 3.2 Client-server interaction diagram for WCS. | Figure 3.2 Client-server interaction diagram for WCS. | ||

| − | Data Flow & Interoperability in DataFed Service-based AQ Analysis System | + | * [http://datafedwiki.wustl.edu/index.php/2006-01-11_Data_Flow_%26_Interoperability_in_DataFed_Service-based_AQ_Analysis_System Data Flow & Interoperability in DataFed Service-based AQ Analysis System} |

| − | HTAP/WCS Slides | + | * [http://datafedwiki.wustl.edu/index.php/2006-03-14_WG_on_HTAP-Relevant_IT_Techniques,_Tools_and_Philosophies:_DataFed_Experience_and_Perspectives HTAP/WCS Slides] |

| − | WCS | + | * [http://capita.wustl.edu/capita/capitareports/061024_DataFed_WCS/Screencast/DataFed_WCS/DataFed_WCS.html WCS Screencast] |

Grid coverages have a domain comprised of regularly spaced locations along 0, 1, 2, or 3 axes of a spatial coordinate reference system. Their domain may also have a time dimension, which may be regularly or irregularly spaced. A coverage defines, at each location in the domain, a set of fields that may be scalar-valued (such as elevation), or vector-valued (such as brightness values in different parts of the electromagnetic spectrum). These fields (and their values) are known as the range of the coverage. | Grid coverages have a domain comprised of regularly spaced locations along 0, 1, 2, or 3 axes of a spatial coordinate reference system. Their domain may also have a time dimension, which may be regularly or irregularly spaced. A coverage defines, at each location in the domain, a set of fields that may be scalar-valued (such as elevation), or vector-valued (such as brightness values in different parts of the electromagnetic spectrum). These fields (and their values) are known as the range of the coverage. | ||

| Line 125: | Line 110: | ||

The WCS interface specifies three operations that may be requested by a WCS client and performed by a WCS server: | The WCS interface specifies three operations that may be requested by a WCS client and performed by a WCS server: | ||

| − | GetCapabilities: (required implementation by servers) – This operation allows a client to request the service metadata (or Capabilities) document. This XML document describes the abilities of the specific server implementation, usually including brief descriptions of the coverages available on the server. This operation also supports negotiation of the specification version being used for client-server interactions. Clients would generally request the GetCapabilities operation and cache its result for use throughout a session, or reuse it for multiple sessions. When the GetCapabilities operation does not return descriptions of its available coverages, that information must be available from a separate source, such as an image catalog. | + | '''GetCapabilities:''' (required implementation by servers) – This operation allows a client to request the service metadata (or Capabilities) document. This XML document describes the abilities of the specific server implementation, usually including brief descriptions of the coverages available on the server. This operation also supports negotiation of the specification version being used for client-server interactions. Clients would generally request the GetCapabilities operation and cache its result for use throughout a session, or reuse it for multiple sessions. When the GetCapabilities operation does not return descriptions of its available coverages, that information must be available from a separate source, such as an image catalog. |

| − | DescribeCoverage: (required implementation by servers) – This operation allows a client to request full descriptions of one or more coverages served by a particular WCS server. The server responds with an XML document that fully describes the identified coverages. | + | '''DescribeCoverage''': (required implementation by servers) – This operation allows a client to request full descriptions of one or more coverages served by a particular WCS server. The server responds with an XML document that fully describes the identified coverages. |

| − | GetCoverage: (required implementation by servers) – This operation allows a client to request a coverage comprised of selected range properties at a selected set of geographic locations. The server extracts the response data from the selected coverage, and encodes it in a known coverage format. The GetCoverage operation is normally run after GetCapabilities and DescribeCoverage operation responses have shown what requests are allowed and what data are available. | + | '''GetCoverage''': (required implementation by servers) – This operation allows a client to request a coverage comprised of selected range properties at a selected set of geographic locations. The server extracts the response data from the selected coverage, and encodes it in a known coverage format. The GetCoverage operation is normally run after GetCapabilities and DescribeCoverage operation responses have shown what requests are allowed and what data are available. |

==ISO 19115== | ==ISO 19115== | ||

| Line 137: | Line 122: | ||

A convergence is apparent among leaders in the effort to document data consistently. The World Meteorological Organization, the NextGen Project (FAA and NOAA/NWS), the GOES-R Project (NASA, NOAA/NESDIS, NOAA/NWS), the FGDC, and ESDIS (NASA) have all adopted, or are considering adoption, of ISO documentation standards. The European Union is mandating ISO 19115 documentation of available spatial data. Because of this wide adoption, the AQ Community chose ISO 19115 Metadata for Geospatial Data Standard for describing air quality datasets. | A convergence is apparent among leaders in the effort to document data consistently. The World Meteorological Organization, the NextGen Project (FAA and NOAA/NWS), the GOES-R Project (NASA, NOAA/NESDIS, NOAA/NWS), the FGDC, and ESDIS (NASA) have all adopted, or are considering adoption, of ISO documentation standards. The European Union is mandating ISO 19115 documentation of available spatial data. Because of this wide adoption, the AQ Community chose ISO 19115 Metadata for Geospatial Data Standard for describing air quality datasets. | ||

| − | ISO 19115 is suitable because it's structure accounts not only for traditional data access and discovery metadata, but also for usage, lineage and other metadata needed for understanding the data. The AQ Information System incorporates the structured metadata along the data usage chain using the ISO 19115. The AQ Record includes the ISO 19115 Core, 19119 metadata for describing the geospatial services and AQ-specific metadata for finding datasets. The Community Record for finding and accessing AQ data is continuing to evolve with participation and input from community members and experience in relevant AQ projects. | + | ISO 19115 is suitable because it's structure accounts not only for traditional data access and discovery metadata, but also for usage, lineage and other metadata needed for understanding the data. The AQ Information System incorporates the structured metadata along the data usage chain using the ISO 19115. The AQ Record includes the ISO 19115 Core, 19119 metadata for describing the geospatial services and AQ-specific metadata for finding datasets. The Community Record for finding and accessing AQ data is [http://geo-aq-cop.org/isodiscussion continuing to evolve] with participation and input from community members and experience in relevant AQ projects. |

| Line 145: | Line 130: | ||

Metadata needed for Data Binding (purple box) is encoded in ISO 19119 Service metadata. This metadata identifies what kind of services are available, links to GetCapabilities, DescribeCoverage and example GetCoverage or GetMap are listed. The red and yellow boxes for OGC CSW Core Queryable/Returnable fields and the ISO 19115 Metadata CSW Profile are all fields included for finding metadata within the GEOSS GeoNetwork Clearinghouse, which operates using CSW service interfaces and an ISO 19115 profile. The blue box is the Air Quality-specific metadata, which currently only includes the keywords needed for the AQ Community faceted search engine, uFIND. The facets are: Dataset, Parameter, Instrument, Platform, Domain, Topic Category, Temporal Resolution, Vertical, DataType, Data Distributor, Data Originator. The above facets are included for the AQ user to easily find the data. This additional metadata allows for sharp queries to be given in the parameter space, time, and physical space. Another feature of the user-centric system is that using web analytics additional metadata is attached to each dataset in order to provide information about dataset usage characteristics. | Metadata needed for Data Binding (purple box) is encoded in ISO 19119 Service metadata. This metadata identifies what kind of services are available, links to GetCapabilities, DescribeCoverage and example GetCoverage or GetMap are listed. The red and yellow boxes for OGC CSW Core Queryable/Returnable fields and the ISO 19115 Metadata CSW Profile are all fields included for finding metadata within the GEOSS GeoNetwork Clearinghouse, which operates using CSW service interfaces and an ISO 19115 profile. The blue box is the Air Quality-specific metadata, which currently only includes the keywords needed for the AQ Community faceted search engine, uFIND. The facets are: Dataset, Parameter, Instrument, Platform, Domain, Topic Category, Temporal Resolution, Vertical, DataType, Data Distributor, Data Originator. The above facets are included for the AQ user to easily find the data. This additional metadata allows for sharp queries to be given in the parameter space, time, and physical space. Another feature of the user-centric system is that using web analytics additional metadata is attached to each dataset in order to provide information about dataset usage characteristics. | ||

| − | ISO 19115 Records are made by the ISO 19115 Maker service. This service creates an ISO 19115 record from a WCS or WMS service. It gets the metadata either from keywords in the capabilities document of from the calling URL. The resulting ISO records can be submitted to GEOSS Clearinghouse. | + | ISO 19115 Records are made by the ISO 19115 Maker service. This service creates an ISO 19115 record from a WCS or WMS service. It gets the metadata either from keywords in the capabilities document of from the calling URL. The resulting ISO records can be submitted to [http://wiki.esipfed.org/index.php/GEOSS_Clearinghouse GEOSS Clearinghouse]. |

Latest revision as of 13:24, October 5, 2011

< GEO AQ CoP < HTAP Data Network Pilot

"What few things must be the same so that everything else can be different?"

--Elliot Christian

Interoperability

This section reviews the relevant standards and conventions used in the HTAP Data Network Pilot, the combination of the standards and conventions to enable interoperability between the data systems and the networking of resulting .

The first impediment is that clients are not aware of available services. Assuming that clients and servers are aware of each other, their 'interoperability' faces many hurdles:

The server needs to publish and advertise its data holdings and capabilities. The client needs to express its data needs in the context of the server capabilities. The server has to respond to the user's query bag extracting and preparing the payload precisely as requested. The client then has to understand the content of the data package. In the past, these requirements for interoperability between data providers and users were accomplished by human-catered communication. Available datasets were published in catalogs and data structures were described in 'readme' files. The clients then 'downloaded' the desired files, and with the use of 'readme' files prepared a hand-crafted importing procedure for the data into the client's data processing tools. Frequently, the processing is performed using dedicated programs written in procedural languages (Fortran, C, Java, etc.)

Service-Oriented Architecture offers an opportunity to mechanize most of these processes.

Interoperability of scientific data systems is difficult. The AQ field, in particular, requires many data types generated by surface-based insitu sensors, emission monitors, remote sensors and air quality model outputs. There is a tremendous variability of servers, clients, applications, and languages that needs to be interoperable.

Long journey. DataFed since 2000 within HTAP since 2005. First experience of interoperability.. Required interoperability of people WCS*S: OGC at protocol level; GALEON (Ben Domenico) at netCDF level, AQ_CoP (Kari & Michael) at the server level Data Structure: Unidata at data model; CF naming convention Cristiane & Martin on CF convention; AQ_CoP (Kari & Michael) at the server Metadata: Ted Haberman, Erin at ISO; CF naming convention Cristiane & Martin & Aasmund..; AQ_CoP (Kari & Michael) at the server. Mediator

Each standard developed by a different group netCDF, CF, WCS These have to fit together semantically and syntax in the interoperability stack, which is not necessarily a fit.

Interoperability Stack

Synopsis: Interoperability in SOA requires multiple layer interoperability

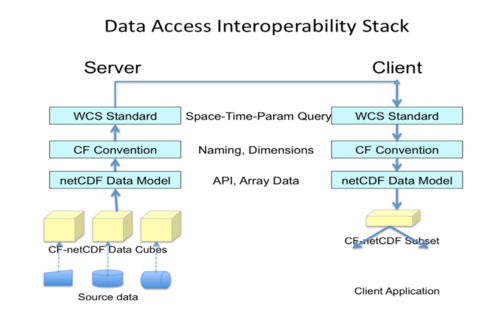

Effective data 'sharing' hinges on the smooth interoperability between the servers and the clients for the offered services. Interoperability between the client and the server has many requirements: that the clients request is received and understood, that the server properly executes the request and that the returned response is also received and understood by the client. Thus, interoperability in a network requires satisfying a 'stack' of conditions depicted in the interoperability stack, Figure 3.1.

Figure 3.1 Interoperability stack for WCS.

The interoperability stack is composed of stack of layers. Each layer in the stack constitutes a standard or convention, i.e. a constraint. In the above example, the lowest layer is the netCDF data layer with the array data model. The array data model is flexible and has few constrains on the structure and content of the netCDF files. While netCDF data are fully accessible through the standards APIA, clients can not fully understand the content of the netCDF files. In the next CF-Conventions layer, semantic constraints are added including standard definition of array dimensions, and standard names of the variables. Files following the CF-netCDF convention are self-describing and understandable by the clients. The WCS layer imposes standards-based subsetting of CF-netCDF files based on space-time constraints specified by the client.

In order to be interoperable network nodes need to be interoperable within each layer of the interoperability stack. In other words, servers and clients need to share the same data model, same naming conventions and common subsetting query language. Achieving such interoperability has been the goal of many initiatives over the past decade but the successes have been few.

netCDF Data Model

The lowest layer in the interoperability stack is the physical layer where the data are stored. NetCDF is a data model for scientific data consisting of: Variables: name, shape (list of Dimensions), type, attributes, values; Dimensions: name, length; Attributes: name, type, value(s); The netCDF data files are manipulated via a set of libraries for data access (C, Fortran, C++, Java, Perl, Python, Ruby, Matlab, IDL, ...). The data format is portable binary data that supports direct access, metadata, appending new data, shared access (users need not know anything about format). Applications for netCDF include gridded output from models (forecast, climate, ocean, atmospheric chemistry); observational data (surface, soundings, satellite), trajectory data etc.

The NetCDF (network Common Data Form) is used to store and communicate multidimensional data, such as arising from Earth Observations and models. The NetCDF data model is particularly well suited for storing related arrays containing atmospheric and oceanic data and models. Climate and Forecast Metadata Conventions (CF) are used in conjunction with NetCDF as a means of specifying the semantic information. The semantic metadata is conveyed internally within the NetCDF datasets which makes NetCDF is self documenting. This means that it can associate various physical quantities (such as location, pressure and temperature) with spatio-temporal locations (such as points at specific latitudes, longitudes, vertical levels, and times).

For the past two decades, with the help of the U.S. National Science Foundation, netCDF was maintained and actively supported by the University Corporation for Atmospheric Research (UCAR) . NetCDF is a set of software libraries and machine-independent data formats that support the creation, access, and sharing of array-oriented scientific data. NetCDF has a strong and user community. Unidata is supported by the U.S. National Science Foundation for the past 25 years.

CF Conventions

(see also: AQ standard names workspace)

CF metadata conventions support interoperability for earth science data from different sources containing model output or observational datasets. It was developed for encoding Climate and weather Forecast metadata in netCDF files but now applied to many domains of Earth Science. Besides setting standards for defining the dimensions and coordinates of data sets as well as various other data set properties (see latest CF Conventions document), arguably the most important contribution of CF to the field of air quality is the definition of standard names, which provide unique names for chemical constituents and their physical properties. CF standard_names therefore represent an important step towards the definition of a universal air quality vocabulary or name space.

The CF conventions are still evolving, they require community participation (see the CF discussion page. Further contributions are sought on: Mashing of the CF convention with the WCS protocol and with the data models (e.g. netCDF or relational SQL Tables); Extending the CF naming to point monitoring data; Refined definition of aerosol components (including size classes) and emission fluxes (in particular with respect to emission sector definitions). The AQ standard names wiki pages are used as a work space to prepare and discuss such additions to the CF standard prior to bringing it into the official CF discussion page.

Data in CF-netCDF format in conjunction with the OGC WCS data access protocol provides complete specification for loosely coupled networking of distributed air quality data, whereas the netCDF data model, by itself is inadequate to unambiguously define the data structure and its meaning. The CF-netCDF has been formally recognized by US Government NASA and NOAA standards bodies. The CF naming conventions are also being adopted by the Global Observing System of Systems (GEOSS), GEO Task DA-09-02-d: Atmospheric Model Evaluation Network for the application of Earth observations from distributed archives using standardized approaches to evaluate and improve model performance. See also GEO Air Quality Community of Practice AQ CoP for further details. Recently, UCAR has introduced NetCDF as a candidate OGC standard to encourage broader international use and greater interoperability among clients and servers interchanging data in binary form. This will enable standard delivery of data in binary form via several OGC service interface standards, including the OGC Web Coverage Service (WCS), Web Feature Service (WFS), and Sensor Observation Service (SOS) Interface Standards.

Through the CF Conventions, netCDF data files become self-describing which enables software tools to display data and perform operations on specified subsets with no user intervention. It is equally important that the coordinate data are easy for human users to write and to understand. These conventions enable programs like Datafed Browser or the Juelich WCS web interface to work on CF-netCDF data without configuration.

Each variable in the file has an associated description of what it represents. Each value can be located in space and time. The convention does not standardize any variable or dimension names. Within the netCDF files, all the CF metadata are written in attributes. Important attributes include: standard_name which provides a unique semantic description of the variable, long_name is a human readable, non-standardized variable description which can be used for labelling plots for example; units is the human readable physical dimension of the variable. For variables with a standard_name the units attribute is also standardized. Other variables should use the same units where applicable or at least try to have units that are compatible with the udunits software package.

CF Naming Conventions for Atmospheric Chemistry

A central element of the CF Conventions is the Standard Name Table, which uniquely associates each standard name with a geophysical parameter, named according to the CF Naming Guidelines. These can be used to find or compare datasets in catalog browsers such as AQ_uFIND. As of 22 July 2011, there are 693 CF-registered Atmospheric Chemistry names contributed by the community, covering mostly model-generated parameters. The standard naming of atmospheric chemistry observation variables is in need of community contributions.

History. The AQ naming activity was initiated in 2006 and coordinated by Christiane Textor. An ad hoc virtual workgroup constructed and discussed a first set of CF standard_names for air chemistry and aerosols using a Wiki workspace. The names were submitted to the CF Community for approval and added the CF Names table in 2008. Later additions concerned specifically standard names for stratospheric chemical constituents and other individual additions and refined definitions.

A recent development within the CF group discussions is the general acceptance of grammar rules which greatly facilitate the process of adding new entries to the standard_name table. New standard_name proposals must be submitted to the CF mailing list for approval. As long as these new names follow the accepted grammar and consist of previously defined terms or obvious modifications to these terms, acceptance of new names is generally easy. When new concepts are introduced, the CF mailing list discussions can at times become quite extensive and will require some involvement over an extended time period.

CF Naming Examples: Typical AQ standard names describe volume mixing ratios ("mole_fraction"), concentrations, mass mixing ratios, column densities, or tendencies of atmospheric constituents. A few examples copied from the standard name table are "mass_concentration_of_ammonium_dry_aerosol_in_air", "mole_concentration_of_alpha_pinene_in_air", "mole_fraction_of_carbon_monoxide_in_air" or "tendency_of_atmosphere_mass_content_of_carbon_monoxide_due_to_emission", "tendency_of_atmosphere_mass_content_of_formic_acid_due_to_wet_deposition". Most standard names are associated with a detailed definition that can be accessed by clicking on the standard_name entry. There are also standard names for a few species groups ("lumped" compounds) that are often used in models (for example "..._of_nmvoc_..."). Here, the CF group advises to provide a full list of compounds or a specific description of the lumping in a comment attribute.

For ongoing discussions concerning AQ standard name additions, consult the AQ standard names wiki pages.

Projects/Programs Using CF Chemistry Names

The CF convention for naming atmospheric chemicals and aerosols has been adopted by the following model intercomparisons (state of July 2008):

- Task Force on Hemispheric Transport of Air Pollution (TF HTAP)

- SPARC Chemistry-Climate Model Validation Activity (CCMVal)

- IGAC-SPARC Atmospheric Chemistry & Climate Initiative (AC&C)

- Aerosol Comparisons between Observations and Models (AeroCOM

CF Coordinate Conventions

The CF Conventions offer strong support for the definition of dimensions and coordinates of Earth observations. Through dimensions and coordinates the extent and structure of observation elements can be defined unambiguously. Four coordinates get special treatment in CF conventions, the three physical directions and time.

Spatial Coordinates: standard_name=latitude and optionally axis=Y marks the latitude variable. This can be used in projections that have an orthogonal latitude axis. Similarly, the longitude coordinate variable must have the same name as the longitude dimension and may have axis=X. Coordinates of longitude axis typically are from -180...180 or 0..360, but that is not part of the convention. If the latitude-longitude coordinates are not Cartesian, two dimensional coordinate variables can be used.

WCS Standard

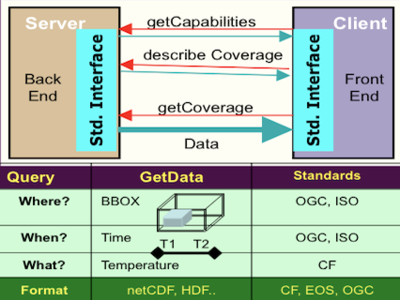

For atmospheric air quality data and models the most relevant international standard are the suite of OGC web services, WMS, WCS and WFS. The WCS may be compared to the OGC Web Map Service (WMS) and the Web Feature Service (WFS); like them it allows clients to choose portions of a server's information holdings based on spatial constraints and other criteria. Unlike the WMS [OGC 06-042], which portrays spatial data to return static maps (rendered as pictures by the server), the Web Coverage Service provides available data together with their detailed descriptions; defines a rich syntax for requests against these data; and returns data with its original semantics (instead of pictures) which may be interpreted, extrapolated, etc. – and not just portrayed. Unlike WFS [OGC 04-094], which returns discrete geospatial features, the Web Coverage Service returns coverages representing space-varying phenomena that relate a spatio-temporal domain to a (possibly multidimensional) range of properties. The Web Map Service (WMS), portrays spatial data end returns static, rendered maps. The Web Feature Service (WFS), returns discrete geospatial features, e.g. roads over a specified region. The OGC Web Coverage Service (WCS) designed for the delivery of 'coverages', i.e. numerical data that cover certain spatial and temporal domain. WCS provides data together with their detailed descriptions and returns data (instead of pictures) with additional semantics (e.g. dimensions, coordinates etc) which may be interpreted, extrapolated, etc.. WCS returns coverages represent space-time-varying phenomena that relate a spatio-temporal domain to a possibly multidimensional range of properties.

In air quality and atmospheric sciences, WMS is best suited for data browser applications where the numerical data values are not crucial. WFS is appropriate for delivering and describing fixed air quality monitoring stations. WCS is particularly well suited for delivering semantically rich observations of the 'fluid Earth' including the atmosphere and the oceans. With these three standards, virtually all the essential air quality-related Earth Observations can be encoded and shared through a network of distributed servers and clients.

When these standards-based services are embedded in a suitable workflow software in client applications, they represent the fundamental building blocks for agile application development using Service Oriented Architecture (SOA). Both the HTAP and GEO are promoting SOA for implementing the data sharing infrastructure. Beyond service orientation, a key requirement for SOA is loose coupling between the services, i.e. seamless interoperability among the services.

The WCS may be compared to the OGC Web Map Service (WMS) and the Web Feature Service (WFS); like them it allows clients to choose portions of a server's information holdings based on spatial constraints and other criteria. Unlike the WMS [OGC 06-042], which portrays spatial data to return static maps (rendered as pictures by the server), the Web Coverage Service provides available data together with their detailed descriptions; defines a rich syntax for requests against these data; and returns data with its original semantics (instead of pictures) which may be interpreted, extrapolated, etc. – and not just portrayed. Unlike WFS [OGC 04-094], which returns discrete geospatial features, the Web Coverage Service returns coverages representing space-varying phenomena that relate a spatio-temporal domain to a (possibly multidimensional) range of properties.

Figure 3.2 Client-server interaction diagram for WCS.

- [http://datafedwiki.wustl.edu/index.php/2006-01-11_Data_Flow_%26_Interoperability_in_DataFed_Service-based_AQ_Analysis_System Data Flow & Interoperability in DataFed Service-based AQ Analysis System}

- HTAP/WCS Slides

- WCS Screencast

Grid coverages have a domain comprised of regularly spaced locations along 0, 1, 2, or 3 axes of a spatial coordinate reference system. Their domain may also have a time dimension, which may be regularly or irregularly spaced. A coverage defines, at each location in the domain, a set of fields that may be scalar-valued (such as elevation), or vector-valued (such as brightness values in different parts of the electromagnetic spectrum). These fields (and their values) are known as the range of the coverage. The WCS interface, while limited in this version to regular grid coverages, is designed to extend in future versions to other coverage types defined in OGC Abstract Specification Topic 6, "The Coverage Type".

The WCS interface specifies three operations that may be requested by a WCS client and performed by a WCS server:

GetCapabilities: (required implementation by servers) – This operation allows a client to request the service metadata (or Capabilities) document. This XML document describes the abilities of the specific server implementation, usually including brief descriptions of the coverages available on the server. This operation also supports negotiation of the specification version being used for client-server interactions. Clients would generally request the GetCapabilities operation and cache its result for use throughout a session, or reuse it for multiple sessions. When the GetCapabilities operation does not return descriptions of its available coverages, that information must be available from a separate source, such as an image catalog.

DescribeCoverage: (required implementation by servers) – This operation allows a client to request full descriptions of one or more coverages served by a particular WCS server. The server responds with an XML document that fully describes the identified coverages.

GetCoverage: (required implementation by servers) – This operation allows a client to request a coverage comprised of selected range properties at a selected set of geographic locations. The server extracts the response data from the selected coverage, and encodes it in a known coverage format. The GetCoverage operation is normally run after GetCapabilities and DescribeCoverage operation responses have shown what requests are allowed and what data are available.

ISO 19115

The netCDF data model the CF conventions and the OGC access service are frequently augmented by metadata. Metadata have the primary purpose to facilitate finding and accessing the data as services. This metadata includes intrinsic discovery metadata such as spatial and temporal extent, keywords and contact information for the provider. The metadata also includes distribution information for data access. Additionally, providers include various other information to help users once they are at their site. This approach is provider-driven and does not incorporate the user needs.

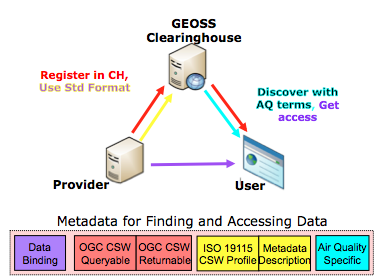

A convergence is apparent among leaders in the effort to document data consistently. The World Meteorological Organization, the NextGen Project (FAA and NOAA/NWS), the GOES-R Project (NASA, NOAA/NESDIS, NOAA/NWS), the FGDC, and ESDIS (NASA) have all adopted, or are considering adoption, of ISO documentation standards. The European Union is mandating ISO 19115 documentation of available spatial data. Because of this wide adoption, the AQ Community chose ISO 19115 Metadata for Geospatial Data Standard for describing air quality datasets.

ISO 19115 is suitable because it's structure accounts not only for traditional data access and discovery metadata, but also for usage, lineage and other metadata needed for understanding the data. The AQ Information System incorporates the structured metadata along the data usage chain using the ISO 19115. The AQ Record includes the ISO 19115 Core, 19119 metadata for describing the geospatial services and AQ-specific metadata for finding datasets. The Community Record for finding and accessing AQ data is continuing to evolve with participation and input from community members and experience in relevant AQ projects.

Figure 3.3 ISO19115 metadata developed by air quality Community of Practice.

Metadata needed for Data Binding (purple box) is encoded in ISO 19119 Service metadata. This metadata identifies what kind of services are available, links to GetCapabilities, DescribeCoverage and example GetCoverage or GetMap are listed. The red and yellow boxes for OGC CSW Core Queryable/Returnable fields and the ISO 19115 Metadata CSW Profile are all fields included for finding metadata within the GEOSS GeoNetwork Clearinghouse, which operates using CSW service interfaces and an ISO 19115 profile. The blue box is the Air Quality-specific metadata, which currently only includes the keywords needed for the AQ Community faceted search engine, uFIND. The facets are: Dataset, Parameter, Instrument, Platform, Domain, Topic Category, Temporal Resolution, Vertical, DataType, Data Distributor, Data Originator. The above facets are included for the AQ user to easily find the data. This additional metadata allows for sharp queries to be given in the parameter space, time, and physical space. Another feature of the user-centric system is that using web analytics additional metadata is attached to each dataset in order to provide information about dataset usage characteristics.

ISO 19115 Records are made by the ISO 19115 Maker service. This service creates an ISO 19115 record from a WCS or WMS service. It gets the metadata either from keywords in the capabilities document of from the calling URL. The resulting ISO records can be submitted to GEOSS Clearinghouse.