Encoding Relational Tables in NetCDF

Back to Questions and Comments about CF-1.6 Station Data Convention

Rational Behind this Proposal

The WCS service has potential to grow beyond regular, gridded coverages. Coverage types that need to be described are for example:

- station point data

- station trajectory data

- lidar data

- moving station data, like aeroplane or ship

With a relational system, it is easy to design a schema that captures all the data and the metadata.

The netCDF data format was designed to contain mainly gridded multidimensional data. The format does not have a special support for tables, which sounds like a severe restriction, but it's not.

- It's possible to agree about a simple way to encode tables in a netCDF file.

- Tables with multiple fields.

- Proper primary keys for tables.

- Foreign keys for referential integrity.

- Common relationships: many-to-many, 1-to-many, 1-to-1.

- It's possible to be efficient, the encoding does not have to be one size fits all.

- Sparse tables can be encoded row-by-row manner.

- Dense tables can be encoded as multidimensional hypercubes.

- Mix and match the two above at will.

- It's possible to be elegant.

- No mysterious indirections.

- No complex offset calculations.

- A reader-writer library can take care of following the rules, letting a programmer to operate on higher level.

In the examples, table names are omitted in attribute declaration for clarity.

Encoding Table Columns

Table is a collection of columns. These are indicated with table_name attribute.

Every table needs a primary key. It is indicated with primary_key = "T" attribute.

Example: A station table with three columns: station_code, lat and lon. There are ten rows with indexes 0..9

dimensions:

station = 10 ; // station table dimension

station_code_length = 4 ; // artificial dimension for station code, with max four character length.

variables:

char station_code(station, station_code_length) ;

table_name = "station";

primary_key = "T";

float lon(station) ;

table_name = "station";

float lat(station) ;

table_name = "station";

The dimension name station can be anything. It does not have to equal the table name station, but should be for readability. Same for station_code_length, it can be called anything.

A table can have multiple fields as a primary key.

Encoding Relations

A typical station data instance is a relation (station_code, time, temperature, humidity). The pair (station_code, time) is the primary key; data is recorded at a certain station, at a certain time. Temperature and humidity are the recorded parameters.

Encoding this into netCDF can be done in two ways, using either variable or a dimension.

Encoding Relation as a Record Array

We have now the station table, let's add the data table with humidity and temperature, with station and time as keys.

dimensions:

station = 10 ; // station table dimension

station_code_length = 4 ; // artificial dimension for station code, with max four character length.

data = UNLIMITED // each row in the data table.

variables:

char station_code(station, station_code_length) ;

table_name = "station";

primary_key = "T";

float lon(station) ;

table_name = "station";

float lat(station) ;

table_name = "station";

// The foreign key column: indexes to the station table

int data_station_code(data) ;

table_name = "data";

foreign_key = "station";

primary_key = "T";

// The second primary key column:

int time(data) ;

table_name = "data";

primary_key = "T";

units = "hours since 2000-01-01";

float temperature(data) ;

table_name = "data";

float humidity(data) ;

table_name = "data";

The variable data_station_code is marked foreign_key = "station", which means, that the variable is an index to the station table primary key, station_code, variable. Both are marked primary_key = "T" which means, that the combination of (data_station_code, time) is unique.

To read the full table (station_code, lat, lon, time, temperature, humidity), row by row:

for idx in range of data

read station:

read data_station_code(idx)

read variables station_code, lat and lon at that index

read time(idx), calculate "hours since 2000-01-01"

read temperature(idx)

read humidity(idx)

This is an space-efficient encoding for sparse tables.

Encoding Relation as a Multidimensional Array

This is identical to CF-1.6 encoding. Both variables are 2-dimensional cubes.

dimensions:

station = 10 ; // station table dimension

station_code_length = 4 ; // artificial dimension for station code, with max four character length.

time = UNLIMITED ; // we can again add one time slice at a time

variables:

char station_code(station, station_code_length) ;

table_name = "station";

primary_key = "T";

float lon(station) ;

table_name = "station";

float lat(station) ;

table_name = "station";

int time(time) ;

table_name = "time";

primary_key = "T";

units = "hours since 2000-01-01";

float temperature(time,station) ;

table_name = "data";

float humidity(time,station) ;

table_name = "data";

This declares two arrays, one for temperature and one for humidity. Both are two-dimensional. The dimensions time and station refer to the time and station variables. They implicitly became part of the primary key.

To find temperature at "ACAD" in "2004-07-22":

Find index for "ACAD" from the "station_code" array. Let's say it's 2.

2004-07-22 is 39936 hours since 2000-01-01.

find 39936 from time. Lets say it's at index 317.

Read temperature(317,2).

Read humidity(317,2).

This is a very space-efficient encoding, if most of the stations have data most of the time.

Summa Summarum

- A typical schema of any relational database can be encoded.

- If the data is sparse, foreign keys can be expressed as variables.

- If the data is dense, foreign keys can be expressed as dimensions, resulting in vastly smaller files than flat CSV would be.

- There can be any combination of the two, enabling the best of both worlds whatever your data will look like.

More Real World Examples

NILU / EBAS

Full Schema

The EBAS database schema is rather large, full schema outline looks like this:

Essential Schema

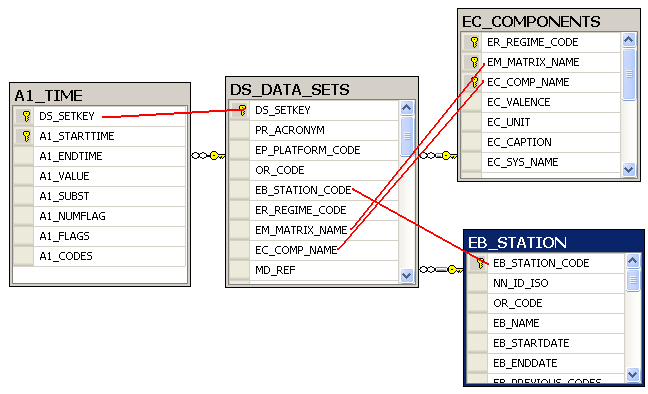

The essential schema is not that big, only four tables.

The Essential Schema Four Tables

- Table EB_STATION: This contains the essential of the station information.

- Field EB_STATION_CODE is the unique code.

- Fields EB_LATITUDE, EB_LONGITUDE, EB_ALTITUDE_ASL for the geographic location.

- More miscellaneous fields.

- Table EC_COMPONENTS: This table contains the measured parameters.

- Field EM_MATRIX_NAME defines which medium the parameter is measured in (e.g. precipitation, air, pm10, ...)

- Field EC_COMP_NAME is the name of the parameter (e.g. sulphate_total, ...)

- Various other fields, like EC_UNIT define more metadata.

- Table DS_DATA_SETS describes what is called a dataset in EBAS: One parameter measured at a certain station. You can imagine a dataset as an instrument on a fixed location measuring one parameter. This leads us to the main data table. The full version has some more complex dependencies on instrument, method etc.

- Fields EM_MATRIX_NAME and EC_COMP_NAME refer to the EC_COMPONENTS table.

- Fields DS_STARTDATE and DS_ENDDATE provide the timestamp of the first and last measurement in the timeseries.

- There are many other fields and references to many other tables.

- Table A1_TIME Contains all the observations, all the parameters from all the stations

- Field DS_SETKEY refers to the dataset.

- Fields A1_STARTTIME and A1_ENDTIME define the measurement time.

- Field A1_VALUE contains the data value

- The rest of the fields are metadata.

Reading EBAS Data

So a single data row from A1_TIME can be interpreted as follows:

- The observation value is A1_VALUE

- The observation time is (A1_STARTTIME to A1_ENDTIME)

- The observation dataset is found with DS_SETKEY from DS_DATA_SETS table.

- The DS_DATA_SETS contains EM_MATRIX_NAME and EC_COMP_NAME that define the observation parameter.

- Field EB_STATION_CODE is the unique code refers to the observation station.

Encoding a Subset of Fields in netCDF

This shows encoding a small subset of the essential schema.

dimensions:

A1_TIME = UNLIMITED

EB_STATION = 120

EB_STATION_CODE_length = 7

EB_NAME_length = 40

EC_COMPONENTS = 14

EM_MATRIX_NAME_length = 40

EC_COMP_NAME_length = 100

EC_UNIT_length = 8

DS_DATA_SETS = 530 // all the 120 * 14 datasets don't exist.

DS_SETKEY_length = 10

PR_ACRONYM_length = 10

variables:

// fields for table EB_STATION

char EB_STATION_CODE(EB_STATION,EB_STATION_CODE_length)

table_name = "EB_STATION"

primary_key = "T"

char EB_NAME(EB_STATION,EB_NAME_length)

table_name = "EB_STATION"

float EB_LONGITUDE(EB_STATION)

table_name = "EB_STATION"

float EB_LATITUDE(EB_STATION)

table_name = "EB_STATION"

etc...

// fields for table EC_COMPONENTS

char EM_MATRIX_NAME(EC_COMPONENTS,EM_MATRIX_NAME_length)

table_name = "EC_COMPONENTS"

primary_key = "T"

char EC_COMP_NAME(EC_COMPONENTS,EC_COMP_NAME_length)

table_name = "EC_COMPONENTS"

primary_key = "T"

char EC_UNIT(EC_COMPONENTS,EC_UNIT_length)

table_name = "EC_COMPONENTS"

etc...

// fields for table DS_DATA_SETS

char EC_COMPONENTS_DS_SETKEY(DS_DATA_SETS,DS_SETKEY_length)

table_name = "DS_DATA_SETS"

primary_key = "T"

char PR_ACRONYM(DS_DATA_SETS,PR_ACRONYM_length)

table_name = "DS_DATA_SETS"

// References to EB_STATION and EC_COMPONENTS

int DS_DATA_SETS_EB_STATION(DS_DATA_SETS)

table_name = "DS_DATA_SETS"

foreign_key = "EB_STATION"

int DS_DATA_SETS_EC_COMPONENTS(DS_DATA_SETS)

table_name = "DS_DATA_SETS"

foreign_key = "EC_COMPONENTS"

etc...

// Table A1_TIME

// Indexes to DS_DATA_SETS

int A1_TIME_DS_DATA_SETS(A1_TIME)

table_name = "A1_TIME"

primary_key = "T"

foreign_key = "DS_DATA_SETS"

int A1_STARTTIME(A1_TIME)

table_name = "A1_TIME"

primary_key = "T"

float A1_VALUE(A1_TIME)

table_name = "A1_TIME"

float A1_SUBST(A1_TIME)

table_name = "A1_TIME"

etc...

Creating writing and reading such netCDF files will require a high level library. We need to be able to create tables declaratively, fill the tables by writing collections of records to the tables, and read the tables, with an inner join from table to table.

CIRA / VIEWS

A generic client can now read tables and their relations, but unless more knowledge is available, there's no way to know what that thing actually means. The table encoding gives syntax, but not the semantics. The WCS conventions need to come up with standard schemas, that standardize how metadata is encoded.

The example here is CIRA/VIEWS where the schema is a typical star schema, and each measurement refer to several metadata tables:

- Parameter

- Aggregation

- Method

- DataSource

- Program

- Site

The encoding of such tables is straightforward. Using CIRA/VIEWS nomenclature

dimensions:

// dimensions for max name lengths

SiteCode_length = 4 ;

SiteName_length = 50 ;

ProgramCode_length = 8

etc...

// dimensions for metadata tables

Time = 800

Site = 80

Aggregation = 3 // just a few aggregation methods are available

Program = 5 // similarly, the program table is small

etc...

// main data dimension:

AirFact3 = UNLIMITED

variables:

// variables for metadata tables

char SiteCode(site, SiteCode_length) ;

table_name = "Site";

primary_key = "T";

float Longitude(site) ;

table_name = "Site";

float Latitude(site) ;

table_name = "Site";

about 10 more fields for Site...

char ProgramCode(Program, ProgramCode_length) ;

table_name = "Program";

char ProgramName(Program, ProgramName_length) ;

table_name = "Program";

five more fields....

six more tables...

float AirFact3_AirFact(AirFact3) ;

table_name = "AirFact3";

int AirFact3_Parameter(AirFact3) ; // refers to parameter table (Alf, SO4f, MT, Mf etc...)

table_name = "AirFact3";

foreign_key = "Parameter"

int AirFact3_Site(AirFact3) ; // refers to Site

table_name = "AirFact3";

foreign_key = "Site"

int AirFact3_ObsTime(AirFact3) ;

table_name = "AirFact3";

units = "days since 2000-01-01"

int AirFact3_Program(AirFact3) ; // refers to Program

table_name = "AirFact3";

foreign_key = "Program"

plenty more columns....

This kind of pure star-schema, with data table in the middle, and metadata tables related around it, would be a good way to export any metadata in an easily digested format.